Artificial Intelligence

Tectonic Salesforce Implementation Partner

Artificial intelligence (AI) is the ability of computational systems to perform tasks traditionally associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. As a branch of computer science, AI focuses on developing software and methodologies that enable machines to interpret their environment, learn from data, and take actions to optimize outcomes. These intelligent systems, often referred to as AIs, can operate autonomously or assist humans in various domains.

AI is widely used in high-profile applications, including advanced web search engines (e.g., Google Search), recommendation systems (e.g., YouTube, Amazon, Netflix), virtual assistants (e.g., Siri, Google Assistant, Alexa), autonomous vehicles (e.g., Waymo), generative and creative tools (e.g., ChatGPT, AI-generated art), and strategy game analysis (e.g., chess and Go). However, many AI-driven technologies become so commonplace that they are no longer recognized as AI. As one expert put it, “Once something becomes useful and common enough, it stops being labeled as AI.”

AI research spans multiple subfields, each focused on specific objectives and methodologies. Key areas include machine learning, reasoning, knowledge representation, planning, natural language processing, perception, and robotics. One of AI’s long-term ambitions is to achieve general intelligence—the ability to perform any intellectual task at a human level or beyond. To reach these goals, AI researchers leverage techniques such as search algorithms, mathematical optimization, formal logic, artificial neural networks, and statistical methods. AI also draws insights from psychology, linguistics, philosophy, neuroscience, and other disciplines.

Established as an academic field in 1956, AI has experienced cycles of rapid progress and setbacks, known as AI winters, when funding and interest declined. However, a major breakthrough came in 2012 when deep learning significantly outperformed earlier AI methods, reigniting investment and enthusiasm. The pace of advancement accelerated further in 2017 with the introduction of transformer-based architectures, leading to the AI boom of the early 2020s. The rise of generative AI in this period brought groundbreaking capabilities but also raised concerns about unintended consequences, ethical implications, and long-term risks. As AI continues to evolve, discussions around regulation and policy are increasingly focused on ensuring its responsible development and societal benefits.

Artificial Intelligence

AI Goals and Capabilities

Artificial intelligence (AI) aims to replicate or create intelligence by breaking it into specialized subfields. Researchers focus on developing specific traits and abilities that define intelligent systems. The following areas represent key challenges and active research directions within AI.

Reasoning and Problem-Solving

Early AI research focused on algorithms that mimic human step-by-step reasoning, such as solving puzzles or making logical deductions. By the late 20th century, methods incorporating probability and economics allowed AI to handle uncertainty and incomplete information. However, many reasoning algorithms struggle with “combinatorial explosion,” where complexity increases exponentially with problem size. Unlike early AI models, human cognition often relies on fast, intuitive judgments rather than strict logical deduction. Developing AI that can reason both accurately and efficiently remains an ongoing challenge.

Knowledge Representation

AI systems require structured knowledge to interpret the world, make decisions, and answer questions. Knowledge representation involves organizing facts, concepts, relationships, and rules within a given domain. Ontologies define objects, properties, and relationships, enabling AI to perform content-based retrieval, scene interpretation, decision support, and knowledge discovery.

Challenges in this area include capturing vast amounts of commonsense knowledge—information humans assume without explicit reasoning—and acquiring knowledge in a format AI can effectively process. Because much human knowledge is implicit rather than explicitly stated, AI struggles with representing and utilizing this “sub-symbolic” knowledge.

Planning and Decision-Making

AI systems, or “agents,” operate by perceiving their environment and taking actions toward a goal. In planning, an agent works with a predefined objective, while in decision-making, it assigns preferences to different outcomes. Decision-making often involves calculating “expected utility”—weighing possible outcomes based on probability and desirability.

In deterministic settings, AI can predict the consequences of actions precisely. However, in real-world scenarios with uncertainty and incomplete information, AI must estimate outcomes and adapt dynamically. Markov decision processes model such environments by mapping probabilities and rewards to guide decision-making. Additionally, game theory helps AI navigate competitive or cooperative multi-agent scenarios.

Learning

Machine learning (ML) enables AI systems to improve performance without explicit programming. Different approaches include:

- Unsupervised learning: Identifies patterns in unstructured data without predefined labels.

- Supervised learning: Trains on labeled examples to classify data or predict values.

- Reinforcement learning: AI learns through trial and error, receiving rewards for desirable actions.

- Transfer learning: Applies knowledge from one task to another.

- Deep learning: Uses artificial neural networks inspired by the human brain to process complex data.

Computational learning theory assesses these models based on factors such as efficiency, data requirements, and optimization criteria.

Natural Language Processing (NLP)

NLP enables AI to understand, interpret, and generate human language. Tasks include speech recognition, machine translation, sentiment analysis, and question answering. Early NLP relied on linguistic rules and syntax-based models, but modern advancements, such as word embeddings and transformers, have revolutionized language processing.

Generative pre-trained transformer (GPT) models, introduced in 2019, demonstrated the ability to generate coherent text. By 2023, these models achieved near-human performance on standardized tests, including the bar exam, SAT, and GRE.

Perception

Machine perception allows AI to interpret sensory input, such as images, sounds, and environmental signals. Computer vision enables image recognition, facial recognition, and object tracking, while speech recognition deciphers spoken language. AI-driven perception technologies are critical in fields like robotics, autonomous vehicles, and security.

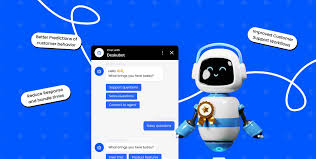

Social Intelligence and Affective Computing

AI systems are increasingly designed to understand and simulate human emotions. Affective computing enables AI to detect moods, interpret sentiment, and engage in natural interactions. Some virtual assistants use humor and conversational cues to enhance human-computer interactions. However, users may mistakenly overestimate an AI’s intelligence based on these social cues.

Artificial General Intelligence (AGI)

While current AI excels at specific tasks, artificial general intelligence (AGI) would possess human-like adaptability, enabling it to solve a broad range of problems across different domains. AGI remains a long-term goal, requiring advancements in reasoning, learning, perception, and decision-making to achieve human-level intelligence.

Search and Optimization in AI

AI solves complex problems by intelligently searching through possible solutions. There are two main types of search: state space search and local search.

State Space Search

State space search navigates through a tree of possible states to find a goal state. For example, planning algorithms explore goals and subgoals to determine a path to a target, a method called means-ends analysis.

However, exhaustive searches are often impractical because the number of possibilities grows exponentially, making searches too slow or infeasible. To address this, AI uses heuristics—rules of thumb that prioritize more promising choices.

In adversarial settings, such as game-playing AI (e.g., chess or Go), adversarial search is used. This involves evaluating possible moves and countermoves to find a winning strategy.

Local Search

Local search uses mathematical optimization techniques to refine an initial guess incrementally.

- Gradient descent optimizes numerical parameters by adjusting them to minimize a loss function. It is widely used in training neural networks through backpropagation.

- Evolutionary computation improves candidate solutions by simulating natural selection—mutating and recombining solutions and selecting the best ones.

- Swarm intelligence algorithms, like particle swarm optimization (inspired by bird flocking) and ant colony optimization (modeled after ant trails), coordinate distributed search efforts.

Logic in AI

AI relies on formal logic for reasoning and knowledge representation.

- Propositional logic operates on true/false statements using logical connectives (e.g., “and”, “or”, “not”).

- Predicate logic extends this by handling objects, predicates, and relationships, using quantifiers like “Every X is a Y”.

AI uses deductive reasoning to derive conclusions from premises. Problem-solving in AI often involves searching for a proof tree, where each node represents a logical step toward a solution.

- Horn clause logic, used in Prolog, enables efficient reasoning.

- Fuzzy logic assigns degrees of truth (0 to 1) to handle uncertainty.

- Non-monotonic logic allows AI to reason with incomplete information, updating conclusions as new data becomes available.

Probabilistic Methods for Uncertain Reasoning

AI frequently operates under uncertainty. Probabilistic models help AI make informed decisions:

- Bayesian networks enable AI to reason, learn, plan, and perceive by updating beliefs based on new evidence.

- Hidden Markov models (HMMs) and Kalman filters process time-dependent data for applications like speech recognition and robotics.

- Markov decision processes (MDPs) and game theory support AI decision-making in uncertain environments.

Machine Learning and Classification

AI applications commonly involve classifiers, which recognize patterns in data. Supervised learning fine-tunes classifiers using labeled examples.

Popular classifiers include:

- Decision trees – Simple and widely used in symbolic machine learning.

- K-nearest neighbor (KNN) – A traditional method for analogical AI.

- Support vector machines (SVMs) – Replaced KNN in the 1990s for better pattern separation.

- Naïve Bayes classifier – Popular due to its scalability, notably used at Google.

- Neural networks – Used extensively in modern AI classification tasks.

Artificial Neural Networks and Deep Learning

Artificial neural networks (ANNs) consist of interconnected nodes (neurons) that process and recognize patterns.

- Feedforward neural networks pass signals in one direction.

- Recurrent neural networks (RNNs) use feedback loops to handle sequential data.

- Convolutional neural networks (CNNs) enhance pattern recognition in images.

Deep learning utilizes multiple layers of neurons to extract hierarchical features, making it highly effective in computer vision, speech recognition, and natural language processing. Its success is driven by increased computational power (GPUs) and large datasets like ImageNet.

Generative AI and GPT Models

Generative Pre-trained Transformers (GPT) are large language models (LLMs) that generate text by predicting the next word based on context. These models, pre-trained on vast amounts of text data, refine their outputs through reinforcement learning from human feedback (RLHF) to improve accuracy and reduce misinformation.

Current GPT-based models include Gemini (formerly Bard), ChatGPT, Grok, Claude, Copilot, and LLaMA. Multimodal GPT models can process images, videos, and audio alongside text.

AI Hardware and Software

AI advancements are driven by specialized hardware and software:

- GPUs (Graphical Processing Units) with AI-specific enhancements have overtaken CPUs for machine learning tasks.

- Programming languages like Python dominate AI development, replacing older languages like Prolog.

- Moore’s Law (transistor doubling every ~18 months) and Huang’s Law (rapid GPU improvements) fuel AI’s accelerating progress.

Artificial Intelligence

AI and Machine Learning Applications

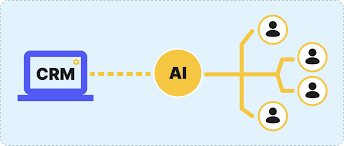

AI and machine learning have become integral to many essential technologies of the 2020s, powering search engines (Google Search), online advertising (AdSense, Facebook), recommendation systems (Netflix, YouTube, Amazon), and internet traffic management. They also drive virtual assistants (Siri, Alexa), autonomous vehicles (drones, ADAS, self-driving cars), automatic language translation (Google Translate, Microsoft Translator), facial recognition (Apple’s Face ID, Google’s FaceNet), and image labeling (Facebook, TikTok, Apple’s iPhoto). The deployment of AI in organizations is often overseen by a Chief Automation Officer (CAO).

AI in Healthcare

AI is transforming medicine and medical research, improving diagnostics, treatment, and patient outcomes. Ethically, under the Hippocratic Oath, medical professionals may be compelled to use AI when it can enhance accuracy in diagnosis and care. AI-driven big data analysis is advancing research in fields like organoid and tissue engineering. It also helps address disparities in research funding and accelerates biomedical discoveries.

Key breakthroughs include AlphaFold 2’s rapid protein structure predictions (2021), AI-guided discovery of antibiotics for drug-resistant bacteria (2023), and machine learning applications in Parkinson’s disease research (2024), which dramatically sped up drug screening while reducing costs.

AI in Gaming

Since the 1950s, AI has been central to game development and competition. Milestones include:

- 1997: IBM’s Deep Blue defeated chess champion Garry Kasparov.

- 2011: IBM Watson won Jeopardy! against top champions.

- 2016-2017: AlphaGo defeated top-ranked Go players, including Lee Sedol and Ke Jie.

- 2019: DeepMind’s AlphaStar reached grandmaster level in StarCraft II.

- 2021: AI outperformed human champions in Gran Turismo.

- 2024: Google DeepMind’s SIMA autonomously played nine open-world video games and executed tasks based on natural language instructions.

AI in Mathematics

Large language models like GPT-4, Gemini, and Claude are increasingly used in mathematics, though they may generate incorrect solutions. Enhancements like supervised fine-tuning and reasoning-step training have improved accuracy.

Recent advancements include:

- Qwen2-Math (Alibaba, 2024): Achieved top accuracy on competition math problems.

- rStar-Math (Microsoft, 2025): Used Monte Carlo tree search and step-by-step reasoning to solve 90% of MATH benchmark problems.

- AlphaTensor, AlphaGeometry, AlphaProof (DeepMind): Specialized AI models for theorem proving and advanced problem-solving.

Some AI models serve as educational tools, while others contribute to topological deep learning and mathematical discoveries.

AI in Finance

AI is widely deployed in finance, from retail banking to automated investment advising. However, experts note that AI’s impact may primarily streamline operations and reduce jobs rather than drive groundbreaking financial innovation.

AI in Military Applications

AI enhances military capabilities in command, control, communications, and logistics. Key applications include threat detection, target acquisition, cyber operations, and autonomous combat systems. AI-driven strategies have been implemented in conflicts in Iraq, Syria, Israel, and Ukraine.

Generative AI

Generative AI (GenAI) creates text, images, video, and other media using deep learning models. Advances in transformer-based neural networks have driven an AI boom, leading to innovations such as:

- Chatbots: ChatGPT, Copilot, Gemini, LLaMA

- Text-to-Image: Stable Diffusion, Midjourney, DALL·E

- Text-to-Video: Sora

Generative AI is reshaping industries, including software development, marketing, healthcare, and entertainment. However, concerns persist regarding cybercrime, deepfakes, job displacement, and intellectual property rights.

AI Agents

AI agents autonomously perceive environments, make decisions, and execute tasks in fields such as virtual assistance, robotics, gaming, and self-driving vehicles. Many incorporate machine learning to refine performance over time, optimizing efficiency within computational and operational constraints.

Industry-Specific AI Applications

AI is deployed across countless industries, solving specialized challenges:

- Disaster Management: AI analyzes GPS and social media data for evacuation planning and real-time updates.

- Agriculture: AI optimizes irrigation, pest control, yield prediction, and greenhouse automation.

- Astronomy: AI aids in exoplanet discovery, solar activity forecasting, and gravitational wave analysis.

- Politics: During India’s 2024 elections, AI-generated deepfakes and speech translations were used for voter engagement, with an estimated $50 million spent on AI-generated content.

AI’s transformative impact continues to expand, reshaping industries, research, and daily life.

Artificial Intelligence History

The study of mechanical or “formal” reasoning dates back to ancient philosophers and mathematicians. This exploration of logic laid the groundwork for Alan Turing’s theory of computation, which proposed that a machine manipulating simple symbols like “0” and “1” could simulate any form of mathematical reasoning. Alongside advances in cybernetics, information theory, and neurobiology, this idea spurred researchers to explore the possibility of building an “electronic brain.” Early milestones in artificial intelligence (AI) included McCulloch and Pitts’ 1943 model of “artificial neurons” and Turing’s influential 1950 paper Computing Machinery and Intelligence, which introduced the Turing test and demonstrated the feasibility of machine intelligence.

AI research formally emerged as a field in 1956 during a workshop at Dartmouth College. The participants became key figures in the field, leading research efforts in the 1960s. Their teams developed programs that astonished the public—machines were learning checkers strategies, solving algebraic word problems, proving logical theorems, and even communicating in English. By the late 1950s and early 1960s, AI research labs had been established at leading universities in the U.S. and the U.K.

During the 1960s and 1970s, researchers were optimistic that AI would soon achieve general intelligence. In 1965, Herbert Simon predicted that within two decades, machines would be capable of any task a human could perform. Marvin Minsky echoed this sentiment in 1967, claiming that AI would be largely solved within a generation. However, the challenges proved more formidable than expected. In 1974, funding for AI research was drastically reduced in both the U.S. and the U.K., following criticism from Sir James Lighthill and pressure from Congress to prioritize more practical projects. Additionally, the 1969 book Perceptrons by Minsky and Papert cast doubt on neural networks, leading to widespread skepticism about their potential. This period of disillusionment, known as the “AI winter,” made securing funding for AI projects difficult.

AI experienced a resurgence in the early 1980s, driven by the commercial success of expert systems—software that mimicked human expert reasoning. By 1985, the AI market exceeded a billion dollars. Inspired by Japan’s ambitious Fifth Generation Computer Project, the U.S. and U.K. governments renewed their support for academic AI research. However, the collapse of the Lisp Machine market in 1987 triggered another prolonged AI winter.

Until this point, most AI research had focused on symbolic representations of cognition, modeling intelligence with high-level symbols to represent concepts such as goals, beliefs, and logical facts. By the late 1980s, some researchers began questioning whether this symbolic approach could truly capture human cognition, particularly in areas like perception, robotics, learning, and pattern recognition. This led to a shift toward “sub-symbolic” methods. Rodney Brooks challenged the need for internal representations, focusing instead on engineering machines that could interact with their environments. Meanwhile, Judea Pearl and Lotfi Zadeh pioneered methods for reasoning under uncertainty. The most significant breakthrough, however, was the revival of connectionism—research into artificial neural networks—led by Geoffrey Hinton and others. In 1990, Yann LeCun demonstrated that convolutional neural networks (CNNs) could effectively recognize handwritten digits, setting the stage for modern deep learning.

AI regained credibility in the late 1990s and early 2000s through mathematically rigorous approaches and targeted problem-solving. This narrow focus allowed researchers to produce measurable results and collaborate with fields like statistics, economics, and applied mathematics. By 2000, AI-driven solutions were widely used, though often not labeled as “AI” due to the so-called “AI effect.” Some researchers, concerned that AI was straying from its goal of general intelligence, established the subfield of artificial general intelligence (AGI) around 2002. By the 2010s, AGI research had gained substantial funding.

The modern AI boom began in 2012, when deep learning started outperforming traditional methods on industry benchmarks. This success was fueled by advancements in hardware—such as faster processors, GPUs, and cloud computing—and access to vast datasets like ImageNet. As deep learning rapidly surpassed other techniques, interest and investment in AI skyrocketed. Between 2015 and 2019, machine learning research publications increased by 50%.

By the mid-2010s, concerns over fairness, ethics, and AI alignment became central topics in machine learning research. The field expanded dramatically, with funding surging and more researchers dedicating their careers to these issues. The alignment problem emerged as a critical area of academic study.

In the late 2010s and early 2020s, AGI research produced high-profile breakthroughs that captured global attention. In 2015, DeepMind’s AlphaGo defeated the world champion Go player, demonstrating the power of reinforcement learning. OpenAI’s GPT-3, released in 2020, showcased unprecedented natural language capabilities. The launch of ChatGPT on November 30, 2022, marked a turning point—becoming the fastest-growing consumer software in history with over 100 million users in two months. AI was now firmly embedded in public consciousness.

This surge in AI capabilities led to an investment frenzy. By 2022, annual AI investment in the U.S. alone reached approximately $50 billion, with AI specialists making up 20% of new computer science PhDs. Job postings related to AI numbered around 800,000 in the U.S. that year. By 2024, 22% of newly funded startups identified as AI companies, cementing AI’s role as a dominant force in technology and business.