Healthcare Cloud Marketplace

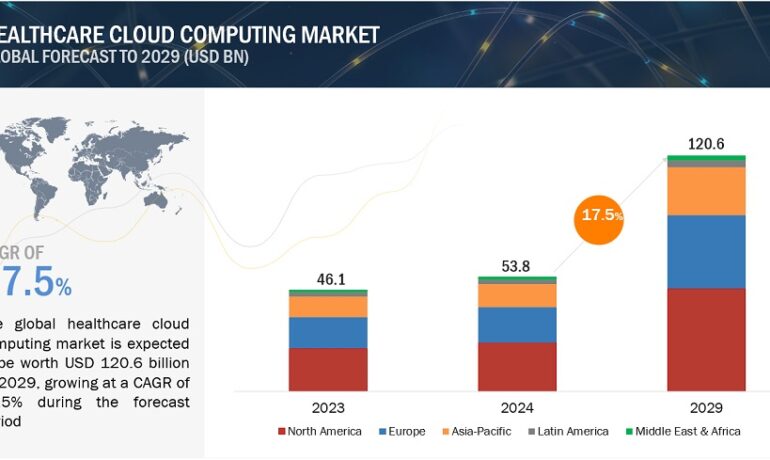

Healthcare Cloud Computing Market: A Comprehensive Overview and Future Outlook Vantage Market Research Report: Insights into Healthcare Cloud Computing by 2030 WASHINGTON, D.C., February 6, 2024 /EINPresswire.com/ — The global Healthcare Cloud Marketplace was valued at USD 38.25 billion in 2022 and is projected to grow at a compound annual growth rate (CAGR) of 18.2%