Salesforce Data Cloud Pioneer

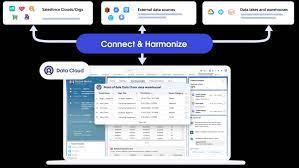

While many organizations are still building their data platforms, Salesforce Data Cloud Pioneer has made a significant leap forward. By seamlessly incorporating metadata integration, Salesforce has transformed the modern data stack into a comprehensive application platform known as the Einstein 1 Platform. Led by Muralidhar Krishnaprasad, executive vice president of engineering at Salesforce, the Einstein