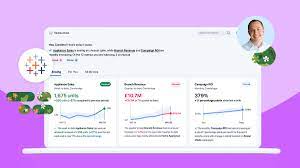

Tableau’s first generative AI assistant is now generally available. Generative AI for Tableau brings data prep to the masses. Earlier this month, Tableau launched its second platform update of 2024, announcing that its first two GenAI assistants would be available by the end of July, with a third set for release in August. The first of these, Einstein Copilot for Tableau Prep, became generally available on July 10. Tableau initially unveiled its plans to develop generative AI capabilities in May 2023 with the introduction of Tableau Pulse and Tableau GPT. Pulse, an insight generator that monitors data for metric changes and uses natural language to alert users, became generally available in February. Tableau GPT, now renamed Einstein Copilot for Tableau, moved into beta testing in April. Following Einstein Copilot for Tableau Prep, Einstein Copilot for Tableau Catalog is expected to be generally available before the end of July. Einstein Copilot for Tableau Web Authoring is set to follow by the end of August. With these launches, Tableau joins other data management and analytics vendors like AWS, Domo, Microsoft, and MicroStrategy, which have already made generative AI assistants generally available. Other companies, such as Qlik, DBT Labs, and Alteryx, have announced similar plans but have not yet moved their products out of preview. Tableau’s generative AI capabilities are comparable to those of its competitors, according to Doug Henschen, an analyst at Constellation Research. In some areas, such as data cataloging, Tableau’s offerings are even more advanced. “Tableau is going GA later than some of its competitors. But capabilities are pretty much in line with or more extensive than what you’re seeing from others,” Henschen said. In addition to the generative AI assistants, Tableau 2024.2 includes features such as embedding Pulse in applications. Based in Seattle and a subsidiary of Salesforce, Tableau has long been a prominent analytics vendor. Its first 2024 platform update highlighted the launch of Pulse, while the final 2023 update introduced new embedded analytics capabilities. Generative AI assistants are proliferating due to their potential to enable non-technical workers to work with data and increase efficiency for data experts. Historically, the complexity of analytics platforms, requiring coding and data literacy, has limited their widespread adoption. Studies indicate that only about one-quarter of employees regularly work with data. Vendors have attempted to overcome this barrier by introducing natural language processing (NLP) and low-code/no-code features. However, NLP features have been limited by small vocabularies requiring specific business phrasing, while low-code/no-code features only support basic tasks. Generative AI has the potential to change this dynamic. Large language models like ChatGPT and Google Gemini offer extensive vocabularies and can interpret user intent, enabling true natural language interactions. This makes data exploration and analysis accessible to non-technical users and reduces coding requirements for data experts. In response to advancements in generative AI, many data management and analytics vendors, including Tableau, have made it a focal point of their product development. Tech giants like AWS, Google, and Microsoft, as well as specialized vendors, have heavily invested in generative AI. Einstein Copilot for Tableau Prep, now generally available, allows users to describe calculations in natural language, which the tool interprets to create formulas for calculated fields in Tableau Prep. Previously, this required expertise in objects, fields, functions, and limitations. Einstein Copilot for Tableau Catalog, set for release later this month, will enable users to add descriptions for data sources, workbooks, and tables with one click. In August, Einstein Copilot for Tableau Web Authoring will allow users to explore data in natural language directly from Tableau Cloud Web Authoring, producing visualizations, formulating calculations, and suggesting follow-up questions. Tableau’s generative AI assistants are designed to enhance efficiency and productivity for both experts and generalists. The assistants streamline complex data modeling and predictive analysis, automate routine data prep tasks, and provide user-friendly interfaces for data visualization and analysis. “Whether for an expert or someone just getting started, the goal of Einstein Copilot is to boost efficiency and productivity,” said Mike Leone, an analyst at TechTarget’s Enterprise Strategy Group. The planned generative AI assistants for different parts of Tableau’s platform offer unique value in various stages of the data and AI lifecycle, according to Leone. Doug Henschen noted that the generative AI assistants for Tableau Web Authoring and Tableau Prep are similar to those being introduced by other vendors. However, the addition of a generative AI assistant for data cataloging represents a unique differentiation for Tableau. “Einstein Copilot for Tableau Catalog is unique to Tableau among analytics and BI vendors,” Henschen said. “But it’s similar to GenAI implementations being done by a few data catalog vendors.” Beyond the generative AI assistants, Tableau’s latest update includes: Among these non-Copilot capabilities, making Pulse embeddable is particularly significant. Extending generative AI capabilities to work applications will make them more effective. “Embedding Pulse insights within day-to-day applications promises to open up new possibilities for making insights actionable for business users,” Henschen said. Multi-fact relationships are also noteworthy, enabling users to relate datasets with shared dimensions and informing applications that require large amounts of high-quality data. “Multi-fact relationships are a fascinating area where Tableau is really just getting started,” Leone said. “Providing ways to improve accuracy, insights, and context goes a long way in building trust in GenAI and reducing hallucinations.” While Tableau has launched its first generative AI assistant and will soon release more, the vendor has not yet disclosed pricing for the Copilots and related features. The generative AI assistants are available through a bundle named Tableau+, a premium Tableau Cloud offering introduced in June. Beyond the generative AI assistants, Tableau+ includes advanced management capabilities, simplified data governance, data discovery features, and integration with Salesforce Data Cloud. Generative AI is compute-intensive and costly, so it’s not surprising that Tableau customers will have to pay extra for these capabilities. Some vendors are offering generative AI capabilities for free to attract new users, but Henschen believes costs will eventually be incurred. “Customers will want to understand the cost implications of adding these new capabilities,”