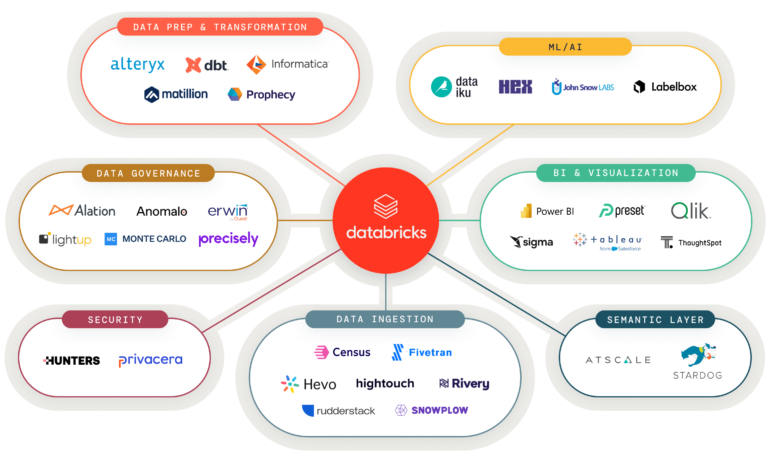

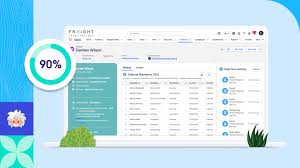

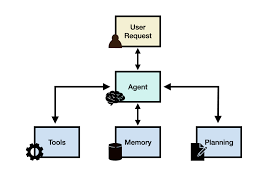

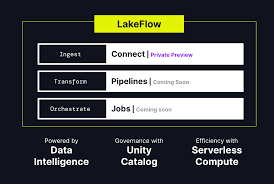

Uplimit Secures $11M in Series A Funding to Enhance AI-Powered Enterprise Learning SAN FRANCISCO, July 24, 2024 /PRNewswire/ — Uplimit, a leading provider of AI-powered enterprise learning solutions, has announced the successful completion of an $11M Series A funding round. This funding, led by Salesforce Ventures with participation from existing investors GSV Ventures, Greylock Partners, and Cowboy Ventures, as well as new investors Translink Capital, Workday Ventures, and Conviction, underscores the growing importance of effective employee upskilling in response to the rapid advancements in Generative AI technology. Uplimit AI-Powered ELP. “Helping employees stay ahead of technological advancements is now a critical priority for the organizations we serve,” said Claudine Emeott, Partner at Salesforce Ventures and Head of the Salesforce Ventures Impact Fund. “AI has the potential to equip both companies and individuals with the necessary skills to thrive, and Uplimit is at the forefront of integrating AI into education and training. We are excited to support their continued growth and look forward to seeing the significant impact they will have in the coming years.” “AI has the potential to equip both companies and individuals with the necessary skills to thrive, and Uplimit is at the forefront of integrating AI into education and training. We are excited to support their continued growth and look forward to seeing the significant impact they will have in the coming years.” Claudine Emeott, Partner at Salesforce Ventures and Head of the Salesforce Ventures Impact Fund Uplimit AI-Powered ELP With this new funding, Uplimit plans to expand its enterprise platform offerings, aiming to provide comprehensive upskilling solutions to more organizations and employees. Traditional education systems often require extensive resources for content creation, personalized feedback, and support, which can hinder scalability. While some scalable solutions exist, they often compromise on quality and outcomes. Uplimit is addressing this challenge with an innovative approach that combines scale and effectiveness. Their AI-driven platform enhances cohort management, learner support, and course authoring, enabling companies to deliver personalized learning experiences at scale. Uplimit’s recent introduction of AI-enabled role-play scenarios provides learners with immediate feedback, revolutionizing training and development for roles such as managers, support teams, and sales professionals. “Quality education has historically been a scarce resource, but AI is changing that,” said Julia Stiglitz, CEO and Co-founder of Uplimit. “AI allows us to create and update educational content rapidly, ensuring that learners receive personalized experiences even in large-scale courses. This is crucial as the demand for new skills, driven by the rapid evolution of AI technologies, continues to grow. Uplimit provides the tools needed for employees to quickly grasp new skills, tailored to their current knowledge and needs.” Uplimit has collaborated with a diverse range of companies, from Fortune 500 giants like GE Healthcare and Kraft Heinz to innovative startups such as Procore. Databricks, a leader in AI-powered data intelligence, was an early adopter of Uplimit’s platform for customer education. “We needed a learning platform that could scale to hundreds of thousands of learners while maintaining high levels of engagement and completion,” said Rochana Golani, VP of Learning and Enablement at Databricks. “Uplimit’s platform offers the perfect blend of real-time human instruction and personalized AI support, along with valuable peer interaction. This approach is set to be transformative for many of our customers.” The new funding will enable Uplimit to further enhance its enterprise and customer education offerings, expanding its AI capabilities to include advanced cohort management tools, rapid course feedback integration, interactive practice and assessment modules, and AI-powered course authoring. Join us on August 14th for our launch event, where we will explore how this funding will accelerate our mission and demonstrate the impact our platform is having on enterprise learning. About Uplimit Uplimit is a comprehensive AI-driven learning platform designed to equip companies with the tools needed to train employees and customers in emerging skills. The platform leverages AI to scale learning programs effectively, offering features such as AI-powered learner support, generative AI for content creation, and live cohort management tools. This approach ensures high engagement and completion rates, significantly surpassing traditional online courses. Uplimit also offers a marketplace of advanced courses in AI, technology, and leadership, taught by industry experts. Founded by Julia Stiglitz, Sourabh Bajaj, and Jake Samuelson, Uplimit is backed by Salesforce Ventures, Greylock Partners, Cowboy Ventures, GSV Ventures, Conviction, Workday Ventures, and Translink Capital, with contributions from the co-founders of OpenAI and DeepMind. Notable customers include GE Healthcare, Kraft Heinz, and Databricks. Uplimit has been featured in leading industry publications such as ATD, Josh Bersin, and Fast Company. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more