It is Time to Implement Data Cloud

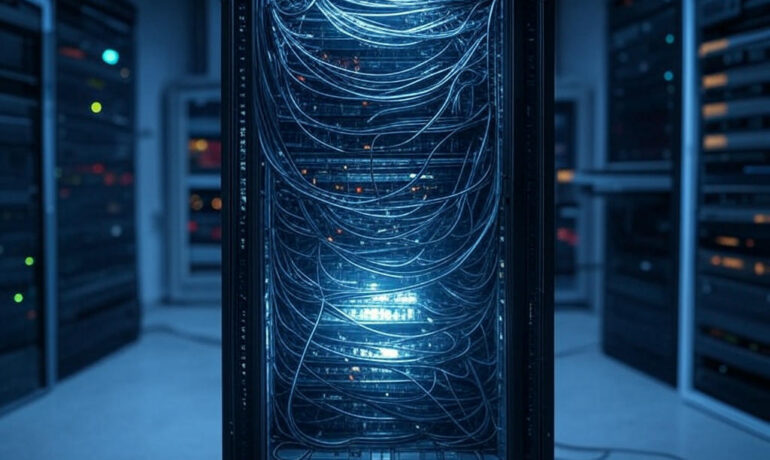

With Salesforce Data Cloud you can: With incomplete data your 360-degree customer view is limited and often leads to multiple sales reps working on the same lead. Slow access to the right leads at the right time leads to missed opportunties and delayed closings. If your team cannot trust the data due to siloes and inaccuracies, they avoid using it. It is Time to Implement Data Cloud. Unified Connect and harmonize data from all your Salesforce applications and external data systems. Then activate your data with insights and automation across every customer touchpoint. Powerful With Data Cloud and Agentforce, you can create the most intelligent agents possible, giving them access to the exact data they need to deliver any employee or customer experience. Secure Securely connect your data to any large language model (LLM) without sacrificing data governance and security thanks to the Einstein 1 trust layer. Open Data Cloud is fully open and extensible – bring your own data lake or model to reduce complexity and leverage what’s already been built. Plus, share out to popular destinations like Snowflake, Google Ads, or Meta Ads. Salesforce Data Cloud is the only hyperscale data engine native to Salesforce. It is more than a CDP. It goes beyond a data lake. You can do more with Data Cloud. Your Agentforce journey begins with Data Cloud. Agents need the right data to work. With Data Cloud, you can create the most intelligent agents possible, giving them access to the exact data they need to deliver any employee or customer experience. Use any data in your organization with Agentforce in a safe and secure manner thanks to the Einstein 1 Trust Layer. Datablazers are Salesforce community members who are passionate about driving business growth with data and AI powered by Data Cloud. Sign up to join a growing group of members to learn, connect, and grow with Data Cloud. Join today. The path to AI success begins and ends with quality data. Business, IT, and analytics decision makers with high data maturity were 2x more likely than low-maturity leaders to have the quality data needed to use AI effectively, according to our State of Data and Analytics report. “What’s data maturity?” you might wonder. Hang tight, we’ll explain in chapter 1 of this guide. Data-leading companies also experience: Your data strategy isn’t just important, it’s critical in getting you to the head of the market with new AI technology by your side. That’s why this Salesforce guide is based on recent industry findings and provides best practices to help your company get the most from your data. Tectonic will be sharing a focus on the 360 degree customer view with Salesforce Data Cloud in our insights. Stay tuned. It is Time to Implement Data Cloud Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more