Salesforce Enhances Einstein 1 Platform with New Vector Database and AI Capabilities

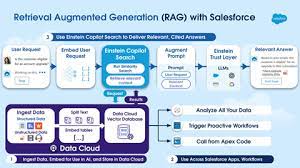

Salesforce (NYSE: CRM) has announced major updates to its Einstein 1 Platform, introducing the Data Cloud Vector Database and Einstein Copilot Search. These new features aim to power AI, analytics, and automation by integrating business data with large language models (LLMs) across the Einstein 1 Platform. Salesforce Enhances Einstein 1 Platform with New Vector Database and AI Capabilities. Unifying Business Data for Enhanced AI The Data Cloud Vector Database will unify all business data, including unstructured data like PDFs, emails, and transcripts, with CRM data. This will enable accurate and relevant AI prompts and Einstein Copilot, eliminating the need for expensive and complex fine-tuning of LLMs. Built into the Einstein 1 Platform, the Data Cloud Vector Database allows all business applications to harness unstructured data through workflows, analytics, and automation. This enhances decision-making and customer insights across Salesforce CRM applications. Introducing Einstein Copilot Search Einstein Copilot Search will provide advanced AI search capabilities, delivering precise answers from the Data Cloud in a conversational AI experience. This feature aims to boost productivity for all business users by interpreting and responding to complex queries with real-time data from various sources. Key Features and Benefits Salesforce Enhances Einstein 1 Platform with New Vector Database and AI Capabilities Data Cloud Vector Database Einstein Copilot Search Addressing the Data Challenge With 90% of enterprise data existing in unstructured formats, accessing and leveraging this data for business applications and AI models has been challenging. As Forrester predicts, the volume of unstructured data managed by enterprises will double by 2024. Salesforce’s new capabilities address this by enabling businesses to effectively harness their data, driving AI innovation and improved customer experiences. Salesforce’s Vision Rahul Auradkar, EVP and GM of Unified Data Services & Einstein, stated, “The Data Cloud Vector Database transforms all business data into valuable insights. This advancement, coupled with the power of LLMs, fosters a data-driven ecosystem where AI, CRM, automation, Einstein Copilot, and analytics turn data into actionable intelligence and drive innovation.” Practical Applications Customer Success Story Shohreh Abedi, EVP at AAA – The Auto Club Group, highlighted the impact: “With Salesforce automation and AI, we’ve reduced response time for roadside events by 10% and manual service cases by 30%. Salesforce AI helps us deliver faster support and increased productivity.” Availability Salesforce Enhances Einstein 1 Platform with New Vector Database and AI Capabilities Salesforce’s new Data Cloud Vector Database and Einstein Copilot Search promise to revolutionize how businesses utilize their data, driving AI-powered innovation and improved customer experiences. Salesforce Enhances Einstein 1 Platform with New Vector Database and AI Capabilities Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more