Salesforce Transforming Nonprofit Operations with AI

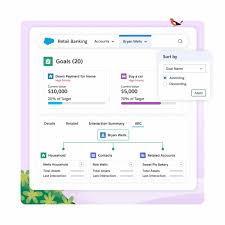

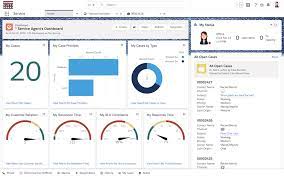

Salesforce Enhances Nonprofit Cloud with Generative AI Capabilities On August 6, 2024, Salesforce announced that its Nonprofit Cloud is now equipped with generative AI capabilities powered by the Einstein 1 Platform. This integration represents the first time Salesforce’s Industry Cloud portfolio has incorporated the Einstein 1 Platform, signaling a broader commitment to embedding AI tools across its product offerings. The update aims to revolutionize nonprofit operations by providing AI-powered tools for personalized donor engagement, operational efficiency, and funding discovery. Key features include AI-generated fundraising proposals and program summaries, which provide concise insights into grant details, donor histories, and program outcomes. Transforming Nonprofit Operations with AI The integration of generative AI into Nonprofit Cloud aligns with Salesforce’s strategy to empower nonprofits to navigate challenges such as donor fatigue, increased operational costs, and rising service demands. Notable enhancements include: Additionally, Salesforce launched Data Cloud for Nonprofits, enabling a unified, real-time view of donor, volunteer, and program data. This innovation breaks down data silos, empowering nonprofits to create tailored outreach strategies and assess program performance effectively. Four Pillars of AI Success Salesforce’s enhancements to Nonprofit Cloud embody its “four-pillar” approach to enterprise AI success: Key Innovations in Nonprofit Cloud Salesforce introduced three groundbreaking innovations to address nonprofit-specific challenges: These features, coupled with Nonprofit Cloud Einstein 1 Edition (which bundles Nonprofit Cloud, Data Cloud, Einstein, Experience Cloud, and Slack), provide nonprofits with comprehensive tools to drive impact. Nonprofit Adoption and Impact Nonprofits are already experiencing the transformative potential of AI. According to Salesforce’s Nonprofit Trends Report, organizations leveraging these AI tools have seen: Julie Fleshman, CEO of the Pancreatic Cancer Action Network, shared her organization’s success with Nonprofit Cloud: “Salesforce has been instrumental in helping us connect patients with specialized healthcare providers and clinical trials, advancing our mission and saving valuable time.” Nonprofit Cloud vs. NPSP While Nonprofit Cloud offers a unified, scalable platform with AI-driven insights and advanced donor management tools, the Nonprofit Success Pack (NPSP) serves as a free, open-source solution for smaller organizations. Here’s a quick comparison: Feature Nonprofit Cloud NPSP Functionality Comprehensive CRM with advanced tools Free app with basic CRM functionality Integration Seamless with other Salesforce products Requires additional configuration Ease of Use User-friendly and designed for nonprofits May require technical expertise Cost Subscription-based Free with optional paid add-ons Scalability Built for growing organizations Requires customization for growth Ideal Users Large and mid-sized nonprofits Small nonprofits Maximizing Fundraising with Nonprofit Cloud Nonprofit Cloud offers nonprofits flexibility and efficiency in managing their fundraising efforts, helping them overcome challenges like donor fatigue and retention. Its advanced features include: By leveraging these tools, nonprofits can improve engagement, strengthen donor relationships, and make data-driven decisions, ultimately amplifying their impact. The Tectonic Role Tectonic has been instrumental in implementing Salesforce Nonprofit Cloud for multiple organizations, ensuring they harness its full potential to optimize operations, engage donors, and achieve their missions. With Salesforce’s AI-driven enhancements and Tectonic’s expertise, nonprofits are poised to navigate challenges, unlock new opportunities, and amplify their societal impact. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more