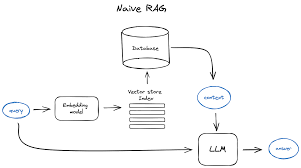

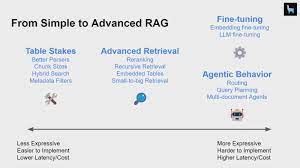

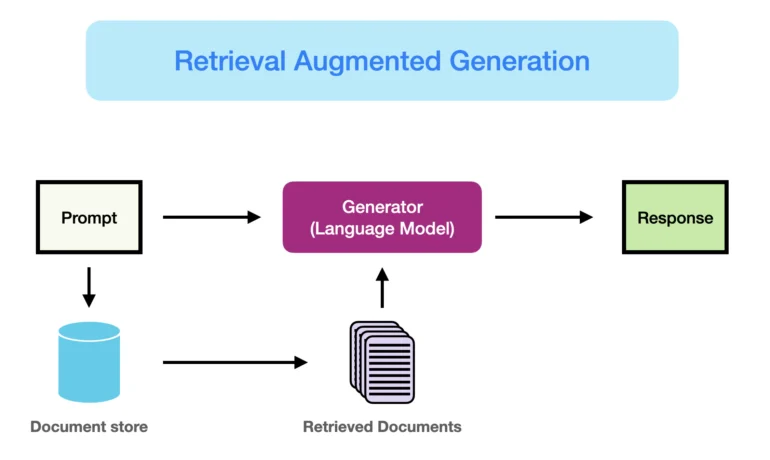

The first notable change in the field of language models is the significant expansion of context window sizes and a reduction in token costs. For instance, Anthropic’s largest model, Claude, has a context window exceeding 200,000 tokens, while recent reports indicate that Gemini’s context window can reach up to 10 million tokens. Under such circumstances, Retrieval-Augmented Generation (RAG) may no longer be necessary for many tasks, as all required data can be accommodated within the expanded context window. Several financial and analytical projects have already demonstrated that tasks can be solved without needing a vector database as intermediate storage. This trend of reducing token costs and increasing context window sizes is likely to continue, potentially decreasing the need for external mechanisms in LLMs, although they are still relevant for the time being. If the context window remains insufficient, methods for summarization and context compression have been introduced. LangChain, for example, offers a class called ConversationSummaryMemory to address this challenge. pythonCopy codellm = OpenAI(temperature=0) conversation_with_summary = ConversationChain( llm=llm, memory=ConversationSummaryMemory(llm=OpenAI()), verbose=True ) conversation_with_summary.predict(input=”Hi, what’s up?”) Knowledge Graphs As the volume of data continues to grow, navigating through it efficiently becomes increasingly critical. In certain cases, understanding the structure and attributes of data is essential for effective use. For example, if the data source is a company’s wiki, an LLM might not recognize a phone number unless the structure or metadata indicates that it’s the company’s contact information. Humans can infer meaning from conventions, such as the subdirectory “Company Information,” but standard RAG may miss such connections. This challenge can be addressed by Knowledge Graphs, also known as Knowledge Maps, which provide both raw data and metadata that illustrates how different entities are interconnected. This method is referred to as Graph Retrieval-Augmented Generation (GraphRAG). Graphs are excellent for representing and managing structured, interconnected information. Unlike vector databases, they excel at capturing complex relationships and attributes among diverse data types. Creating a Knowledge Graph The process of creating a knowledge graph typically involves collecting and structuring data, which requires expertise in both the subject matter and graph modeling. However, LLMs can automate a significant portion of this process by analyzing textual data, identifying entities, and recognizing their relationships, which can then be represented in a graph structure. In many cases, an ensemble of vector databases and knowledge graphs can improve accuracy, as discussed previously. For example, search functionality might combine keyword search through a regular database (e.g., Elasticsearch) and graph-based queries. LangChain can also assist in extracting structured data from entities, as demonstrated in this code example: pythonCopy codedocuments = parse_and_load_data_from_wiki_including_metadata() graph_store = NebulaGraphStore( space_name=”Company Wiki”, tags=[“entity”] ) storage_context = StorageContext.from_defaults(graph_store=graph_store) index = KnowledgeGraphIndex.from_documents( documents, max_triplets_per_chunk=2, space_name=space_name, tags=[“entity”] ) query_engine = index.as_query_engine() response = query_engine.query(“Tell me more about our Company”) Here, searching is conducted based on attributes and related entities, instead of similar vectors. If set up correctly, metadata from the company’s wiki, such as its phone number, would be accessible through the graph. Access Control One challenge with this system is that data access may not be uniform. For instance, in a wiki, access could depend on roles and permissions. Similar issues exist in vector databases, leading to the need for access management mechanisms such as Role-Based Access Control (RBAC), Attribute-Based Access Control (ABAC), and Relationship-Based Access Control (ReBAC). These access control methods function by evaluating paths between users and resources within graphs, such as in systems like Active Directory. To ensure the integrity of data during the ingestion phase, metadata related to permissions must be preserved in both the knowledge graph and vector database. Some commercial vector databases already have this functionality built in. Ingestion and Parsing Data needs to be ingested into both graphs and vector databases, but for graphs, formatting is especially critical since it reflects the data’s structure and serves as metadata. One particular challenge is handling complex formats like PDFs, which can contain diverse elements like tables, images, and text. Extracting structured data from such formats can be difficult, and while frameworks like LLama Parse exist, they are not always foolproof. In some cases, Optical Character Recognition (OCR) may be more effective than parsing. Enhancing Answer Quality Several new approaches are emerging to improve the quality of LLM-generated answers: While these advancements in knowledge graphs, access control, and retrieval mechanisms are promising, challenges remain, particularly around data formatting and parsing. However, these methods continue to evolve, enhancing LLM capabilities and efficiency. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more Alphabet Soup of Cloud Terminology As with any technology, the cloud brings its own alphabet soup of terms. This insight will hopefully help you navigate Read more