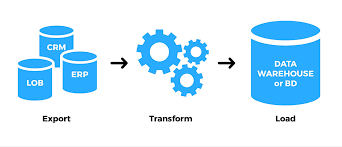

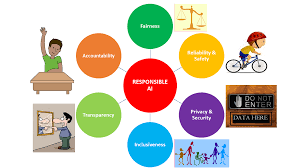

The recent launch of GPT-4o (“o” for “omni”) has captivated everyone with its seamless human-computer interaction. Capable of solving math problems, translating languages in real-time, and even answering queries in a human voice with emotions, GPT-4o is a game-changer. Within hours of its debut, shares of Duolingo, the popular language EdTech platform, plummeted by 26% as investors perceived GPT-4o as a potential threat. But what AI Misconceptions Dispelled, would prevent this? Fears about AI are widespread. Many believe it will become so advanced and efficient that employing humans will be too costly, potentially leading to mass unemployment. Over the past year, it has become clear that artificial intelligence (AI) is among the most disruptive forces in business. AI promises efficiency and speed but also raises concerns about bias and ethics. In a candid conversation on Mint’s new video series All About AI, Arundhati Bhattacharya, Chairperson and CEO of Salesforce India, dispels these fears and discusses bridging the generation gap and making Salesforce a Great Place to Work. Forging Unity and Vision “When I came in, there were disparate groups—sales and distribution, technology and products, support and success. Each group had its leaders, but nobody was bringing them together to create one Salesforce vision and ensure that each group developed the Salesforce DNA,” Arundhati reflects on her April 2020 arrival. She underscored Salesforce’s values-driven approach, highlighting the significance of Trust, Customer Success, Innovation, Equality, and Sustainability. Under Arundhati’s leadership, Salesforce India has risen from 36th to 4th on the Great Places to Work list. Navigating AI Skepticism AI advancements are profoundly shaping industries and humanity’s future. According to Frost & Sullivan’s “Global State of AI, 2022” report, 87% of organizations see AI and machine learning as catalysts for revenue growth, operational efficiency, and better customer experiences. A 2023 IBM survey found that 42% of large businesses have integrated AI, with another 40% considering it. Furthermore, 38% of organizations have adopted generative AI, with an additional 42% contemplating its implementation. Despite the excitement around AI, skepticism remains. Arundhati offers insights on addressing this skepticism and using AI to benefit society. She suggests a balanced approach, noting that every significant technological change has sparked similar fears. Arundhati argues that AI won’t necessarily lead to massive unemployment, given humanity’s ability to adapt and evolve. Amidst India’s socio-economic challenges, Arundhati sees AI as a potent tool for positive change. She cites examples like the Prime Minister’s Jan Dhan Yojana, where AI-enabled solutions facilitated broader financial inclusion. “Similarly, AI can greatly improve services in state hospitals where doctors are overworked. AI can gather patient symptoms and present an initial diagnosis, allowing doctors to focus on more critical aspects. The technology is also being used to check sales conversations for accuracy in insurance, ensuring compliance and reducing mis-selling,” she elaborates. Driving Productivity through AI Integration Improving productivity in India is a pressing issue, and AI can effectively bridge this gap. However, the term “AI” is often overused and misunderstood. People need to approach AI initiatives with intentionality and focus. First, determine the use cases for AI, such as improving productivity, gaining customer mindshare, or enhancing customer experience. Once that is clear, ensure your organization is structured to provide the right inputs for AI, which involves having a robust data strategy. Tools like Data Cloud can help by integrating various data sources without copying the data and extracting intelligence from them. Lastly, securing buy-in from employees is crucial for successful AI implementation. Addressing their concerns, communicating the potential risks, and aligning everyone toward the same goal is essential. Securing the Future: Addressing AI Security Concerns As AI technologies advance, concerns about their security and potential misuse also rise. Threat actors can exploit sophisticated AI tools intended for positive purposes to carry out scams and fraud. As businesses rely more on AI, it is vital to recognize and protect against these security risks. These risks include data manipulation, automated malware, and abuse through impersonation and hallucination. To tackle AI security challenges, consider prioritizing cybersecurity measures for AI systems. Salesforce makes substantial investments in cybersecurity daily to stay ahead of potential threats. “We use third-party infrastructure with additional security layers on top. Public cloud infrastructure provides multiple layers of security, much like a compound with perimeter, building, and apartment security,” Arundhati explains. Empowering the Next Generation Workforce and Fostering Innovation Transitioning from her previous role as Chairperson of the State Bank of India to leading Salesforce India, Arundhati acknowledges the generational shift in workforce dynamics. She emphasizes understanding and catering to the evolving needs and aspirations of a younger workforce, focusing on engagement and fulfillment beyond monetary incentives. “Salesforce has a strong giving policy called one by one by one, where we give 1% of our profit, products, and time to the nonprofit sector. This resonates with the younger workforce, making them feel engaged and fulfilled.” Through a dedicated startup program, Salesforce fosters a collaborative ecosystem where startups can leverage resources, tools, and connections to thrive and succeed. Arundhati’s stewardship of Salesforce India epitomizes a transformative leadership approach anchored in values, innovation, and community empowerment. Under her leadership, Salesforce India continues to chart a course toward sustainable growth and inclusive prosperity, poised to redefine the paradigm of corporate success in the digital age. Like1 Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more