Salesforce Einstein Discovery

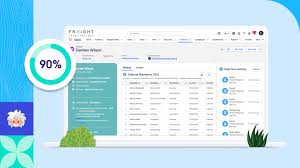

Unlock the Power of Historical Salesforce Data with Einstein Discovery Streamline Access to Historical Insights Salesforce Einstein Discovery (formerly Salesforce Discover) eliminates the complexity of manual data extraction, giving you instant access to complete historical Salesforce data—without maintaining pipelines or infrastructure. 🔹 Effortless Trend Analysis – Track changes across your entire org over time.🔹 Seamless Reporting – Accelerate operational insights with ready-to-use historical data.🔹 Cost Efficiency – Reduce overhead by retrieving trend data from backups instead of production. Why Use Historical Backup Data for Analytics? Most organizations struggle with incomplete or outdated SaaS data, making trend analysis slow and unreliable. With Einstein Discovery, you can:✅ Eliminate data gaps – Access every historical change in your Salesforce org.✅ Speed up decision-making – Feed clean, structured data directly to BI tools.✅ Cut infrastructure costs – Skip costly ETL processes and data warehouses. Einstein Discovery vs. Traditional Data Warehouses Traditional Approach Einstein Discovery Requires ETL pipelines & data warehouses No pipelines needed – backups auto-update Needs ongoing engineering maintenance Zero maintenance – always in sync with your org Limited historical visibility Full change history with minute-level accuracy 💡 Key Advantage: Einstein Discovery automates what used to take months of data engineering. How It Works Einstein Discovery leverages Salesforce Backup & Recover to:🔹 Track every field & record change in real time.🔹 Feed historical data directly to Tableau, Power BI, or other BI tools.🔹 Stay schema-aware – no manual adjustments needed. AI-Powered Predictive Analytics Beyond historical data, Einstein Discovery uses AI and machine learning to:🔮 Predict outcomes (e.g., sales forecasts, churn risk).📊 Surface hidden trends with automated insights.🛠 Suggest improvements (e.g., “Increase deal size by focusing on X”). Supported Use Cases: ✔ Regression (e.g., revenue forecasting)✔ Binary Classification (e.g., “Will this lead convert?”)✔ Multiclass Classification (e.g., “Which product will this customer buy?”) Deploy AI Insights Across Salesforce Once trained, models can be embedded in:📌 Lightning Pages📌 Experience Cloud📌 Tableau Dashboards📌 Salesforce Flows & Automation Get Started with Einstein Discovery 🔹 License Required: CRM Analytics Plus or Einstein Predictions.🔹 Data Prep: Pull from Salesforce or external sources.🔹 Bias Detection: Ensure ethical AI with built-in fairness checks. Transform raw data into actionable intelligence—without coding. Talk to your Salesforce rep to enable Einstein Discovery today! Like1 Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more