Understanding AI Agents

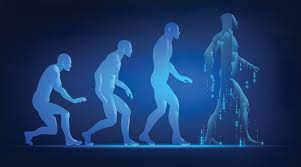

Understanding AI Agents: How They Differ from Copilots and Assistants The AI landscape is evolving rapidly, with terms like AI agents, copilots, and assistants often used interchangeably. But what truly distinguishes them? This analysis clarifies their differences, maps them against real-world AI tools, and identifies gaps in today’s market. Why This Distinction Matters Understanding AI agent capabilities is crucial for: By 2025, AI agents are expected to become enterprise-ready, with the market projected to grow 45% annually, reaching $47 billion by 2030 (MarketsandMarkets). Microsoft CEO Satya Nadella even suggests that agentic applications could replace traditional SaaS. But what makes an AI tool an agent rather than just a copilot or assistant? Defining AI Agents, Copilots, and Assistants 1. AI Agents: Autonomous Goal-Seekers Gartner’s definition (2024): “AI agents are autonomous or semi-autonomous software entities that use AI techniques to perceive, make decisions, take actions, and achieve goals in their digital or physical environments.” Key capabilities:✔ Autonomy – Acts independently.✔ Goal-driven behavior – Works toward broader objectives.✔ Environmental interaction – Uses tools (actions), sensors (perception), and data retrieval.✔ Learning & memory – Adapts over time.✔ Proactivity – Acts on triggers, not just user commands. Example: Agentforce (Salesforce’s AI agent) autonomously creates marketing campaigns by analyzing CRM data. 2. AI Copilots: Collaborative Partners Microsoft’s perspective: “Copilots enhance decision-making by offering context-specific recommendations and work collaboratively with humans.” Key differences from agents: Example: Cursor (AI coding assistant) helps developers by auto-completing and refining code in real time. 3. AI Assistants: Task-Based Helpers Example: ChatGPT (basic version) answers questions but doesn’t autonomously execute tasks. The Agent-Copilot-Assistant Spectrum Feature AI Assistant AI Copilot AI Agent Autonomy ❌ No ⚠️ Semi ✅ Yes Goal-driven ❌ No ⚠️ Partial ✅ Yes Tools & Actions ❌ No ⚠️ Limited ✅ Yes Sensors/Triggers ❌ No ❌ No ✅ Yes Memory & Learning ❌ No ✅ Yes ✅ Yes Proactivity ❌ No ⚠️ Some ✅ Yes Current Market Gaps: Where AI Tools Fall Short Despite advancements, most AI tools today don’t fully meet agent or copilot criteria: 1. Most “Agents” Lack True Autonomy 2. Copilots Often Lack Memory 3. Assistants Dominate the Market Many popular AI tools (Grammarly, Canva AI, Remove.bg) are task-specific assistants, not true copilots or agents. The Future of AI Agents & Copilots Key Takeaways ✔ AI agents act autonomously, copilots collaborate, and assistants follow commands.✔ Today’s “agents” are semi-autonomous—true autonomy is still evolving.✔ Most AI tools are still assistants, with only a few (like GitHub Copilot) qualifying as copilots.✔ Memory, proactivity, and sensors are the biggest gaps in current AI offerings. For businesses and developers, this presents an opportunity: those who build true copilots and safe agents will lead the next wave of AI adoption. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more