Fivetrans Hybrid Deployment

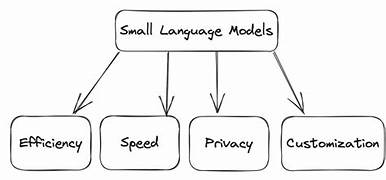

Fivetran’s Hybrid Deployment: A Breakthrough in Data Engineering In the data engineering world, balancing efficiency with security has long been a challenge. Fivetran aims to shift this dynamic with its Hybrid Deployment solution, designed to seamlessly move data across any environment while maintaining control and flexibility. Fivetrans Hybrid Deployment. The Hybrid Advantage: Flexibility Meets Control Fivetran’s Hybrid Deployment offers a new approach for enterprises, particularly those handling sensitive data or operating in regulated sectors. Often, these businesses struggle to adopt data-driven practices due to security concerns. Hybrid Deployment changes this by enabling the secure movement of data across cloud and on-premises environments, giving businesses full control over their data while maintaining the agility of the cloud. As George Fraser, Fivetran’s CEO, notes, “Businesses no longer have to choose between managed automation and data control. They can now securely move data from all their critical sources—like Salesforce, Workday, Oracle, SAP—into a data warehouse or data lake, while keeping that data under their own control.” How it Works: A Secure, Streamlined Approach Fivetran’s Hybrid Deployment relies on a lightweight local agent to move data securely within a customer’s environment, while the Fivetran platform handles the management and monitoring. This separation of control and data planes ensures that sensitive information stays within the customer’s secure perimeter. Vinay Kumar Katta, a managing delivery architect at Capgemini, highlights the flexibility this provides, enabling businesses to design pipelines without sacrificing security. Beyond Security: Additional Benefits Hybrid Deployment’s benefits go beyond just security. It also offers: Early adopters are already seeing its value. Troy Fokken, chief architect at phData, praises how it “streamlines data pipeline processes,” especially for customers in regulated industries. AI Agent Architectures: Defining the Future of Autonomous Systems In the rapidly evolving world of AI, a new framework is emerging—AI agents designed to act autonomously, adapt dynamically, and explore digital environments. These AI agents are built on core architectural principles, bringing the next generation of autonomy to AI-driven tasks. What Are AI Agents? AI agents are systems designed to autonomously or semi-autonomously perform tasks, leveraging tools to achieve objectives. For instance, these agents may use APIs, perform web searches, or interact with digital environments. At their core, AI agents use Large Language Models (LLMs) and Foundation Models (FMs) to break down complex tasks, similar to human reasoning. Large Action Models (LAMs) Just as LLMs transformed natural language processing, Large Action Models (LAMs) are revolutionizing how AI agents interact with environments. These models excel at function calling—turning natural language into structured, executable actions, enabling AI agents to perform real-world tasks like scheduling or triggering API calls. Salesforce AI Research, for instance, has open-sourced several LAMs designed to facilitate meaningful actions. LAMs bridge the gap between unstructured inputs and structured outputs, making AI agents more effective in complex environments. Model Orchestration and Small Language Models (SLMs) Model orchestration complements LAMs by utilizing smaller, specialized models (SLMs) for niche tasks. Instead of relying on resource-heavy models, AI agents can call upon these smaller models for specific functions—such as summarizing data or executing commands—creating a more efficient system. SLMs, combined with techniques like Retrieval-Augmented Generation (RAG), allow smaller models to perform comparably to their larger counterparts, enhancing their ability to handle knowledge-intensive tasks. Vision-Enabled Language Models for Digital Exploration AI agents are becoming even more capable with vision-enabled language models, allowing them to interact with digital environments. Projects like Apple’s Ferret-UI and WebVoyager exemplify this, where agents can navigate user interfaces, recognize elements via OCR, and explore websites autonomously. Function Calling: Structured, Actionable Outputs A fundamental shift is happening with function calling in AI agents, moving from unstructured text to structured, actionable outputs. This allows AI agents to interact with systems more efficiently, triggering specific actions like booking meetings or executing API calls. The Role of Tools and Human-in-the-Loop AI agents rely on tools—algorithms, scripts, or even humans-in-the-loop—to perform tasks and guide actions. This approach is particularly valuable in high-stakes industries like healthcare and finance, where precision is crucial. The Future of AI Agents With the advent of Large Action Models, model orchestration, and function calling, AI agents are becoming powerful problem solvers. These agents are evolving to explore, learn, and act within digital ecosystems, bringing us closer to a future where AI mimics human problem-solving processes. As AI agents become more sophisticated, they will redefine how we approach digital tasks and interactions. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more