Transforming the Role of Data Science Teams

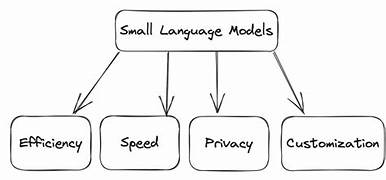

GenAI: Transforming the Role of Data Science Teams Challenges, Opportunities, and the Evolving Responsibilities of Data Scientists Generative AI (GenAI) is revolutionizing the AI landscape, offering faster development cycles, reduced technical overhead, and enabling groundbreaking use cases that once seemed unattainable. However, it also introduces new challenges, including the risks of hallucinations and reliance on third-party APIs. For Data Scientists and Machine Learning (ML) teams, this shift directly impacts their roles. GenAI-driven projects, often powered by external providers like OpenAI, Anthropic, or Meta, blur traditional lines. AI solutions are increasingly accessible to non-technical teams, but this accessibility raises fundamental questions about the role and responsibilities of data science teams in ensuring effective, ethical, and future-proof AI systems. Let’s explore how this evolution is reshaping the field. Expanding Possibilities Without Losing Focus While GenAI unlocks opportunities to solve a broader range of challenges, not every problem warrants an AI solution. Data Scientists remain vital in assessing when and where AI is appropriate, selecting the right approaches—whether GenAI, traditional ML, or hybrid solutions—and designing reliable systems. Although GenAI broadens the toolkit, two factors shape its application: For example, incorporating features that enable user oversight of AI outputs may prove more strategic than attempting full automation with extensive fine-tuning. Differentiation will not come from simply using LLMs, which are widely accessible, but from the unique value and functionality they enable. Traditional ML Is Far from Dead—It’s Evolving with GenAI While GenAI is transformative, traditional ML continues to play a critical role. Many use cases, especially those unrelated to text or images, are best addressed with ML. GenAI often complements traditional ML, enabling faster prototyping, enhanced experimentation, and hybrid systems that blend the strengths of both approaches. For instance, traditional ML workflows—requiring extensive data preparation, training, and maintenance—contrast with GenAI’s simplified process: prompt engineering, offline evaluation, and API integration. This allows rapid proof of concept for new ideas. Once proven, teams can refine solutions using traditional ML to optimize costs or latency, or transition to Small Language Models (SMLs) for greater control and performance. Hybrid systems are increasingly common. For example, DoorDash combines LLMs with ML models for product classification. LLMs handle cases the ML model cannot classify confidently, retraining the ML system with new insights—a powerful feedback loop. GenAI Solves New Problems—But Still Needs Expertise The AI landscape is shifting from bespoke in-house models to fewer, large multi-task models provided by external vendors. While this simplifies some aspects of AI implementation, it requires teams to remain vigilant about GenAI’s probabilistic nature and inherent risks. Key challenges unique to GenAI include: Data Scientists must ensure robust evaluations, including statistical and model-based metrics, before deployment. Monitoring tools like Datadog now offer LLM-specific observability, enabling teams to track system performance in real-world environments. Teams must also address ethical concerns, applying frameworks like ComplAI to benchmark models and incorporating guardrails to align outputs with organizational and societal values. Building AI Literacy Across Organizations AI literacy is becoming a critical competency for organizations. Beyond technical implementation, competitive advantage now depends on how effectively the entire workforce understands and leverages AI. Data Scientists are uniquely positioned to champion this literacy by leading initiatives such as internal training, workshops, and hackathons. These efforts can: The New Role of Data Scientists: A Strategic Pivot The role of Data Scientists is not diminishing but evolving. Their expertise remains essential to ensure AI solutions are reliable, ethical, and impactful. Key responsibilities now include: By adapting to this new landscape, Data Scientists will continue to play a pivotal role in guiding organizations to harness AI effectively and responsibly. GenAI is not replacing them; it’s expanding their impact. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more