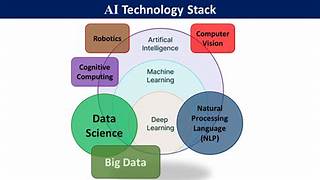

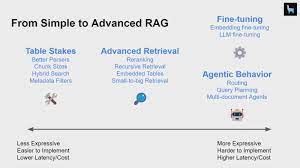

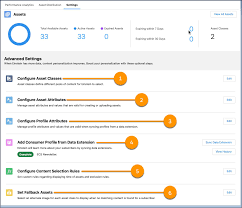

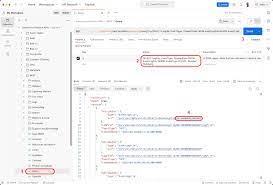

The bold new future of enterprise AI requires a new type of platform. What is Salesforce Einstein 1? One platform that can handle terabytes of disconnected data, gives you the freedom to choose your AI models, and connects directly into the flow of work, all while maintaining customer trust. The Einstein 1 Platform unifies your data, AI, CRM, development, and security into a single, comprehensive platform. It empowers IT, admins, and developers with an extensible AI platform, facilitating fast development of generative apps and automation. What is Salesforce Einstein 1 Einstein 1 has a mixture of artificial intelligence tools on the platform, and it kind of mirrors the way the core Salesforce platform is built, standardized and custom. We have out of the box AI features such as sales email generation in Sales Cloud, and service replies in Service Cloud. What is Einstein One? Einstein One seamlessly integrates our robust data platform with Data Cloud, offering a comprehensive AI and data-driven solution within our CRM service. As the leading comprehensive AI for CRM, Salesforce Einstein transforms the Customer Success Platform by incorporating a suite of integrated AI technologies, making it more intelligent and accessible to innovators worldwide. Introducing the Einstein One Platform The future of enterprise AI demands a groundbreaking platform capable of managing terabytes of diverse data, providing the flexibility to select AI models, and seamlessly integrating into daily workflows while maintaining customer trust. The Einstein One Platform consolidates data, AI, CRM, development, and security into a singular, comprehensive platform. It empowers IT, administrators, and developers with an extensible AI platform, facilitating rapid development of generative apps and automation. Salesforce has made significant investments in enhancing the Einstein One platform by introducing two prompt engineering features – a testing center and prompt engineering suggestions, demonstrating the company’s commitment to advancing AI capabilities. Data-driven and AI-driven customer experiences are vital in constructing Data Cloud. Salesforce’s predictive AI, Einstein, has been operational since 2016, conducting over a trillion predictions per week. The company continuously evolves its capabilities, introducing generative AI products like Einstein GPT, discussed at Dreamforce [2023]. ‘Customer 360′ now represents Salesforce customers’ ability to transform into customer-centric entities, delivering integrated and cohesive experiences across sales, marketing, service, and commerce. Leveraging Data Cloud, structured and unstructured engagement data comes alive, offering a personalized perspective of customer interactions. Einstein is accessible through various Salesforce products, including Sales Cloud, Service Cloud, Marketing Cloud, Salesforce Platform, Analytics Cloud, and Community Cloud. What is the Einstein One platform? Salesforce’s Einstein One is a transformative platform that securely integrates data, connects multiple Salesforce products, and empowers customer-centric businesses to create AI-driven apps, revolutionizing CRM interactions. What applications are connected with the Einstein One platform? Einstein Trust Layer: Ensuring the security of generative AI applications within Salesforce, this application acts as a safeguard for data, preventing unauthorized access. Data Cloud: Unifying structured and unstructured data, Data Cloud completes the customer 360 profile, integrating seamlessly with the Einstein One platform and making data actionable. Einstein Copilot: A built-in conversational assistant, Einstein Copilot enables users to ask questions in Natural Language and receive intelligent answers, actions, and more. Copilot Studio: An essential component of Einstein One, Copilot Studio manages Einstein One and Einstein Copilot, allowing businesses to control user access and use generative AI in their workspace. Prompt Builder, Skill Builder, Model Builder: Tools within Copilot Studio, these components empower businesses to create trusted AI prompts, add skills to user access, and choose AI models. What is Data Cloud for Einstein One? Data Cloud, combined with the Einstein Trust Layer, provides secure access to a unified data repository, forming a reliable resource for complete customer profiles and supporting AI assistants. Data Cloud facilitates valuable visualizations through reports, dashboards, and tools like Tableau, offering insights to enhance understanding of customers and business operations. What will Einstein One do for businesses? Einstein One aims to: Increase productivity Reduce operational costs Enhance the delivery of exceptional customer experiences The debut of Einstein One marks a significant stride for Salesforce and businesses, offering a secure path to boost productivity through AI technologies, ensuring continuous access to cutting-edge technologies for businesses. Where can generative AI impact businesses today? Generative AI has the potential to impact businesses by enhancing repetitive processes, making them faster. Exploring text-based processes, such as repetitive emails or form filling, is a great starting point for businesses looking to enhance capabilities. What can generative AI do for sales, service, field service teams? Generative AI can positively impact teams by automating processes, offering intelligent insights, and improving overall efficiency. Specific use cases for generative AI in sales, service, and field service teams can be explored through Salesforce’s 101 video series. What does Einstein One Tableau do? Einstein One Data Cloud, formerly known as Genie, serves as a metadata management tool connecting data from various sources. It makes data usable for AI and analysis, integrating with Einstein One, Tableau, and Salesforce’s CRM applications. Is Salesforce Einstein the same as Tableau? Formerly known as Einstein Analytics, Tableau CRM is Salesforce’s premium analytic platform, offering a powerful way to explore data, especially for current Salesforce users. Salesforce has renamed Einstein Analytics to Tableau CRM, reflecting its commitment to empowering users to make better decisions faster. In essence, the Einstein One platform combines Salesforce Data Cloud, Einstein Copilot, and the Einstein Trust Layer, providing a comprehensive solution for secure and connected data, facilitating generative AI on a comprehensive platform. Salesforce’s Einstein One Platform & the Data-Driven Revolution This innovation has the potential to revolutionize the way businesses interact with their customers. In this insight, we delve deeper into this transformative development, offering valuable insights, considerations for implementation, and the indispensable expertise of Tectonic as a data-oriented technology partner. Salesforce Data Cloud and Einstein AI are now seamlessly integrated into the Einstein One Platform. This integration empowers companies to effortlessly connect their diverse data sources, enabling the creation of AI-driven applications with minimal coding effort. Moreover, it forges a cohesive view of your data across