Generative AI Tools

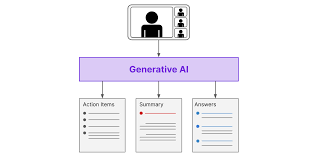

Generative AI Tools: A Comprehensive Overview of Emerging Capabilities The widespread adoption of generative AI services like ChatGPT has sparked immense interest in leveraging these tools for practical enterprise applications. Today, nearly every enterprise app integrates generative AI capabilities to enhance functionality and efficiency. A broad range of AI, data science, and machine learning tools now support generative AI use cases. These tools assist in managing the AI lifecycle, governing data, and addressing security and privacy concerns. While such capabilities also aid in traditional AI development, this discussion focuses on tools specifically designed for generative AI. Not all generative AI relies on large language models (LLMs). Emerging techniques generate images, videos, audio, synthetic data, and translations using methods such as generative adversarial networks (GANs), diffusion models, variational autoencoders, and multimodal approaches. Here is an in-depth look at the top categories of generative AI tools, their capabilities, and notable implementations. It’s worth noting that many leading vendors are expanding their offerings to support multiple categories through acquisitions or integrated platforms. Enterprises may want to explore comprehensive platforms when planning their generative AI strategies. 1. Foundation Models and Services Generative AI tools increasingly simplify the development and responsible use of LLMs, initially pioneered through transformer-based approaches by Google researchers in 2017. 2. Cloud Generative AI Platforms Major cloud providers offer generative AI platforms to streamline development and deployment. These include: 3. Use Case Optimization Tools Foundation models often require optimization for specific tasks. Enterprises use tools such as: 4. Quality Assurance and Hallucination Mitigation Hallucination detection tools address the tendency of generative models to produce inaccurate or misleading information. Leading tools include: 5. Prompt Engineering Tools Prompt engineering tools optimize interactions with LLMs and streamline testing for bias, toxicity, and accuracy. Examples include: 6. Data Aggregation Tools Generative AI tools have evolved to handle larger data contexts efficiently: 7. Agentic and Autonomous AI Tools Developers are creating tools to automate interactions across foundation models and services, paving the way for autonomous AI. Notable examples include: 8. Generative AI Cost Optimization Tools These tools aim to balance performance, accuracy, and cost effectively. Martian’s Model Router is an early example, while traditional cloud cost optimization platforms are expected to expand into this area. Generative AI tools are rapidly transforming enterprise applications, with foundational, cloud-based, and domain-specific solutions leading the way. By addressing challenges like accuracy, hallucination, and cost, these tools unlock new potential across industries and use cases, enabling enterprises to stay ahead in the AI-driven landscape. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more