SMPL: A Virtual “Robot” for Embodied AI

Embodied AI isn’t just for physical robots; it’s equally vital for virtual humans. Surprisingly, there’s a significant overlap between training robots to move and teaching avatars to behave like real people. Embodiment connects an AI agent‘s “brain” to a “body” that navigates and interacts with the world, whether real or virtual, grounding AI in a dynamic 3D environment.

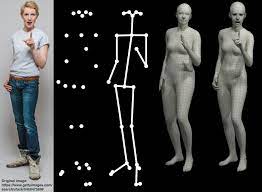

Skinned Multi Person Linear model is a realistic 3D model of the human body that is based on skinning and blend shapes and is learned from thousands of 3D body scans.

Virtual Humans and SMPL

The most common “body” for virtual humans is Skinned Multi Person Linear Model, a parametric 3D model encapsulating human shape and movement. SMPL represents body shape, pose, facial expressions, hand gestures, soft-tissue deformations, and more in about 100 numbers. This post explores why Skinned Multi Person Linear can be thought of as a “robot.”

Virtual Humans as Robots

The goal is to create virtual humans that behave like real ones, embodying AI that perceives, understands, plans, and executes actions to change its environment. In a recent talk at Stanford, I described virtual humans as “3D human foundation agents,” akin to robots. Replace the SMPL body with a humanoid robot and the virtual world with the real world, and the challenges are quite similar.

Key Differences Between Virtual and Physical Robots

However, virtual humans must move convincingly like real humans, which isn’t always necessary for physical robots. Another difference is physics; while real-world robots can’t ignore physics, virtual worlds can selectively model real-world physics, making training “SMPL robots” easier. Plus, SMPL never breaks down!

SMPL as a Universal Humanoid

SMPL serves as a “universal language” of behavior. At Meshcapade, we often call it a “secret decoder ring.” Various data forms like images, video, IMUs, 3D scans, or text can be encoded into SMPL format. This data can then be decoded back into the same formats or retargeted to new humanoid characters, such as game avatars using the Meshcapade UEFN plugin for Unreal or even physical robots.

AMASS: A Warehouse of Human Behavior

A first paper at Meshcapade was AMASS, the world’s largest collection of 3D human movement data in a unified format (SMPL-X). Modern AI requires large-scale data to learn human behavior, and most deep learning methods modeling human motion rely on AMASS for training data.

Researchers mine AMASS to train diffusion models to generate human movement. Adding text labels (see BABEL) enables conditioning generative models of motion on text. With speech and gesture data (see EMAGE), full-body avatars can be driven purely by speech. AMASS continues to grow, aiming to catalog all human behaviors.

Learning from Humans

At Perceiving Systems and Meshcapade, we use data like AMASS to train virtual humans and robots. For example, OmiH2O uses AMASS to retarget SMPL to a humanoid robot, and reinforcement learning methods mimic human behavior using AMASS data.

Methods like WHAM can estimate SMPL from video in 3D world coordinates, crucial for robotic applications. This allows robots to learn from video demonstrations encoded into SMPL format, using an encoder for input and a decoder for output retargeting.

SMPL as the “Latent Space”

In machine learning, encoder-decoder architectures encode data into a latent space, which is typically compact. SMPL, though not truly latent because its parameters are interpretable, serves as a compact representation of humans. It factors body shape from pose, modeling correlations with “pose corrective” blend shapes and using principal component analysis for data compression.

Summary

Embodiment is crucial for both physical robots and virtual humans. Viewing virtual humans as robots can benefit robotics. We consider SMPL a virtual robot, collecting human behavior data at scale, learning from it, and retargeting this behavior to other virtual or physical embodiments. SMPL acts as a “universal language” for human movement, translating data into and out of various forms of embodiment.