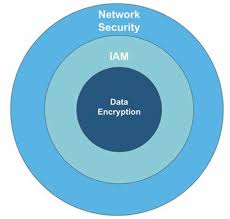

Was 2024 the Year Generative AI Delivered? Here’s What Happened Industry experts hailed 2024 as the year generative AI would take center stage. Operational use cases were emerging, technology was simplifying access, and general artificial intelligence felt imminent. So, how much of that actually came true? Well… sort of. As the year wraps up, some predictions have hit their mark, while others — like general AI — remain firmly in development. Let’s break down the trends, insights from investor Tomasz Tunguz, and what’s ahead for 2025. 1. A World Without Reason Three years into our AI evolution, businesses are finding value, but not universally. Tomasz Tunguz categorizes AI’s current capabilities into: While prediction and search have gained traction, reasoning models still struggle. Why? Model accuracy. Tunguz notes that unless a model has repeatedly seen a specific pattern, it falters. For example, an AI generating an FP&A chart might succeed — but introduce a twist, like usage-based billing, and it’s lost. For now, copilots and modestly accurate search reign supreme. 2. Process Over Tooling A tool’s value lies in how well it fits into established processes. As data teams adopt AI, they’re realizing that production-ready AI demands robust processes, not just shiny tools. Take data quality — a critical pillar for AI success. Sampling a few dbt tests or point solutions won’t cut it anymore. Teams need comprehensive solutions that deliver immediate value. In 2025, expect a shift toward end-to-end platforms that simplify incident management, enhance data quality ownership, and enable domain-level solutions. The tools that integrate seamlessly and address these priorities will shape AI’s future. 3. AI: Cost Cutter, Not Revenue Generator For now, AI’s primary business value lies in cost reduction, not revenue generation. Tools like AI-driven SDRs can increase sales pipelines, but often at the cost of quality. Instead, companies are leveraging AI to cut costs in areas like labor. Examples include Klarna reducing two-thirds of its workforce and Microsoft boosting engineering productivity by 50-75%. Cost reduction works best in scenarios with repetitive tasks, hiring challenges, or labor shortages. Meanwhile, specialized services like EvenUp, which automates legal demand letters, show potential for revenue-focused AI use cases. 4. A Slower but Smarter Adoption Curve While 2023 saw a wave of experimentation with AI, 2024 marked a period of reflection. Early adopters have faced challenges with implementation, ROI, and rapidly changing tech. According to Tunguz, this “dress rehearsal” phase has informed organizations about what works and what doesn’t. Heading into 2025, expect a more calculated wave of AI adoption, with leaders focusing on tools that deliver measurable value — and faster. 5. Small Models for Big Gains In enterprise AI, small, fine-tuned models are gaining favor over massive, general-purpose ones. Why? Small models are cheaper to run and often outperform their larger counterparts when fine-tuned for specific tasks. For example, training an 8-billion-parameter model on 10,000 support tickets can yield better results than a general model trained on a broad corpus. Legal and cost challenges surrounding large proprietary models further push enterprises toward smaller, open-source solutions, especially in highly regulated industries. 6. Blurring Lines Between Analysts and Engineers The demand for data and AI solutions is driving a shift in responsibilities. AI-enabled pipelines are lowering barriers to entry, making self-serve data workflows more accessible. This trend could consolidate analytical and engineering roles, streamlining collaboration and boosting productivity in 2025. 7. Synthetic Data: A Necessary Stopgap With finite real-world training data, synthetic datasets are emerging as a stopgap solution. Tools like Tonic and Gretel create synthetic data for AI training, particularly in regulated industries. However, synthetic data has limits. Over time, relying too heavily on it could degrade model performance, akin to a diet lacking fresh nutrients. The challenge will be finding a balance between real and synthetic data as AI advances. 8. The Rise of the Unstructured Data Stack Unstructured data — long underutilized — is poised to become a cornerstone of enterprise AI. Only about half of unstructured data is analyzed today, but as AI adoption grows, this figure will rise. Organizations are exploring tools and strategies to harness unstructured data for training and analytics, unlocking its untapped potential. 2025 will likely see the emergence of a robust “unstructured data stack” designed to drive business value from this vast, underutilized resource. 9. Agentic AI: Not Ready for Prime Time While AI copilots have proven useful, multi-step AI agents still face significant challenges. Due to compounding accuracy issues (e.g., 90% accuracy over three steps drops to ~50%), these agents are not yet ready for production use. For now, agentic AI remains more of a conversation piece than a practical tool. 10. Data Pipelines Are Growing, But Quality Isn’t As enterprises scale their AI efforts, the number of data pipelines is exploding. Smaller, fine-tuned models are being deployed at scale, often requiring hundreds of millions of pipelines. However, this rapid growth introduces data quality risks. Without robust quality management practices, teams risk inconsistent outputs, bottlenecks, and missed opportunities. Looking Ahead to 2025 As AI evolves, enterprises will face growing pains, but the opportunities are undeniable. From streamlining processes to leveraging unstructured data, 2025 promises advancements that will redefine how organizations approach AI and data strategy. The real challenge? Turning potential into measurable, lasting impact. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more