Embedded Salesforce Einstein

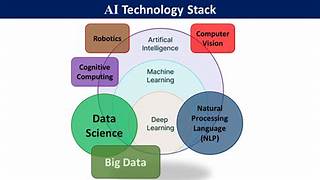

In a world where data is everything, businesses are constantly seeking ways to better understand their customers, streamline operations, and make smarter decisions. Enter Salesforce Einstein—a powerful AI solution embedded within the Salesforce platform that is revolutionizing how companies operate, regardless of size. By leveraging advanced analytics, automation, and machine learning, Einstein helps businesses boost efficiency, drive innovation, and deliver exceptional customer experiences. Embedded Salesforce Einstein is the answer. Here’s how Salesforce Einstein is transforming business: Imagine anticipating customer needs, market trends, or operational challenges before they happen. While it’s not magic, Salesforce Einstein’s AI-powered insights and predictions come remarkably close. By transforming vast amounts of data into actionable insights, Einstein enables businesses to anticipate future scenarios and make well-informed decisions. Industry insight: In financial services, success hinges on anticipating market shifts and client needs. Banks and investment firms leverage Einstein to analyze historical market data and client behavior, predicting which financial products will resonate next. For example, investment advisors might receive AI-driven recommendations tailored to individual clients, boosting engagement and satisfaction. Manufacturers also benefit from Einstein’s predictive maintenance tools, which analyze data from machinery to anticipate equipment failures. A car manufacturer, for instance, could use these insights to schedule maintenance during off-peak hours, minimizing downtime and preventing costly disruptions. Personalization is now a necessity. Salesforce Einstein elevates personalization by analyzing customer data to offer tailored recommendations, messages, and services. Industry insight: In e-commerce, personalized recommendations are often the key to converting browsers into loyal customers. An online bookstore using Einstein might analyze browsing history and past purchases to suggest new releases in genres the customer loves, driving repeat sales. In healthcare, Einstein’s personalization can improve patient outcomes by providing customized follow-up care. Hospitals can use Einstein to analyze patient histories and treatment data, offering reminders tailored to each patient’s needs, improving adherence to care plans and speeding recovery. Salesforce Einstein’s sales intelligence tools, such as Lead Scoring and Opportunity Insights, enable sales teams to focus on the most promising leads. This targeted approach drives higher conversion rates and more efficient sales processes. Industry insight: In real estate, Einstein helps agents manage numerous leads by scoring potential buyers based on their engagement with property listings. A buyer who repeatedly views homes in a specific area is flagged, prompting agents to prioritize their outreach, accelerating the sales process. In the automotive industry, Einstein identifies leads closer to purchasing by analyzing behaviors such as online vehicle configuration and test drive bookings. This allows sales teams to focus on high-potential buyers, closing deals faster. Automation is at the heart of Salesforce Einstein’s ability to streamline processes and boost productivity. By automating repetitive tasks like data entry and customer inquiries, Einstein frees employees to focus on strategic activities, improving overall efficiency. Industry insight: In insurance, Einstein Bots can handle routine tasks like policy inquiries and claim submissions, freeing up human agents for more complex issues. This leads to faster response times and reduced operational costs. In banking, Einstein-powered chatbots manage routine inquiries such as balance checks or transaction histories. By automating these interactions, banks reduce the workload on call centers, allowing agents to provide more personalized financial advice. Einstein Discovery democratizes data analytics, making it easier for non-technical users to explore data and uncover actionable insights. This tool identifies key business drivers and provides recommendations, making data accessible for all. Industry insight: In healthcare, predictive insights are helping providers identify patients at risk of chronic conditions like diabetes. With Einstein Discovery, healthcare providers can flag at-risk individuals early, implementing targeted care plans that improve outcomes and reduce long-term costs. For energy companies, Einstein Discovery analyzes data from sensors and weather patterns to predict equipment failures and optimize resource management. A utility company might use these insights to schedule preventive maintenance ahead of storms, reducing outages and enhancing service reliability. More Than a Tool – Embedded Salesforce Einstein Salesforce Einstein is more than just an AI tool—it’s a transformative force enabling businesses to unlock the full potential of their data. From predicting trends and personalizing customer experiences to automating tasks and democratizing insights, Einstein equips companies to make smarter decisions and enhance performance across industries. Whether in retail, healthcare, or technology, Einstein delivers the tools needed to thrive in today’s competitive landscape. Tectonic empowers organizations with Salesforce solutions that drive organizational excellence. Contact Tectonic today. Like1 Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more