AI Safety and Responsibility

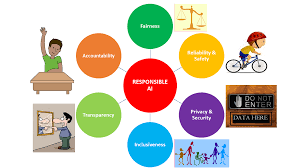

The Future of AI: Balancing Innovation and Trust Authored by Justin Tauber, General Manager, Innovation and AI Culture at Salesforce, ANZ. AI Safety and Responsibility AI holds the promise of transforming business operations and freeing up our most precious resource: time. This is particularly beneficial for small businesses, where customer-facing staff must navigate a complex set of products, policies, and data with limited time and support. AI-assisted customer engagement can lead to more timely, personalized, and intelligent interactions. However, trust is paramount, and businesses must use AI power safely and ethically. The Trust Challenge According to the AI Trust Quotient, 89% of Australian office workers don’t trust AI to operate without human oversight, and 62% fear that humans will lose control of AI. Small businesses must build competence and confidence in using AI responsibly. Companies that successfully combine human and machine intelligence will lead in AI transformation. Building trust and confidence in AI requires focusing on the employee experience of AI. Employees should be integrated early into decision-making, output refinement, and feedback processes. Generative AI outcomes improve when humans are actively involved. Humans need to lead their partnership with AI, ensuring AI works effectively with humans at the helm. Strategies for Building Trust One strategy is to remind employees of AI’s strengths and weaknesses within their workflow. Showing confidence values — how much the model believes its output is correct — helps employees handle AI responses with the appropriate level of care. Lower-scored content can still be valuable, but human reviews provide deeper scrutiny. Prompt templates for staff ensure consistent inputs and predictable outputs. Explainability or citing sources for AI-generated content also addresses trust and accuracy issues. Another strategy focuses on use cases that enhance customer trust. The sweet spot is where productivity and trust-building benefits align. For example, generative AI can reassure customers that a product will arrive on time. AI in fraud detection and prevention is another area where AI can flag suspicious transactions for human review, improving the accuracy and effectiveness of fraud detection systems. Salesforce’s Commitment to Ethical AI Salesforce ensures that its AI solutions keep humans at the helm by respecting ethical guardrails in AI product development. Salesforce goes further by creating capabilities and solutions that lower the cost of responsible AI deployment and use. AI safety products help businesses use AI power without significant risks. Salesforce AI products are built with trust and reliability in mind, embodying Trustworthy AI principles to help customers deploy these products ethically. It’s unrealistic and unfair to expect employees, especially in SMBs, to refine every AI-generated output. Therefore, Salesforce provides businesses with powerful, system-wide controls and intuitive interfaces to make timely and responsible judgments about testing, refining responses, or escalating problems. Salesforce has invested in ethical AI for nearly a decade, focusing on principles, policies, and protections for itself and its customers. New guidelines for responsible generative AI development expand on core Trusted AI principles. Updated Acceptable Use Policy safeguards and the Einstein Trust layer protect customer data from external LLMs. Commitment to a Trusted AI Future While we’re still in the early days of AI, Salesforce is committed to learning and iterating in close collaboration with customers and regulators to make trusted AI a reality for all. Originally published in Smart Company. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more