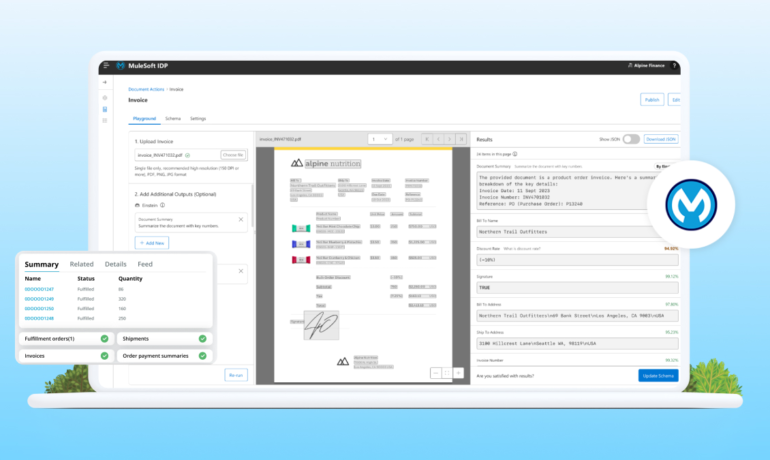

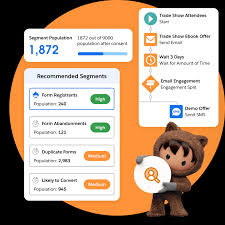

Why Personalized Marketing Matters Personalized marketing is a game-changer for inceased customer engagement and business growth. According to a recent study, it can boost ROI by up to 20%. Yet, many marketers struggle to execute effective personalization due to fragmented data and lack of alignment across teams. To overcome these challenges, marketers must build a solid data foundation, leverage AI-driven segmentation, and utilize dynamic content strategies. This Tectonic insight outlines the essential steps to achieving personalized marketing at scale. Step 1: Build a Unified Data Foundation Marketers today have access to more data than ever before—from CRM and sales data to digital advertising, ERP, transactional, and accounting data. The challenge is unifying these sources to create a single operational customer profile. Without this, analyzing customer behavior and delivering personalized experiences is nearly impossible. How to Get Your Data in Order: Centralize customer data across teams using Marketing Cloud Growth and Advanced Editions, built on the Salesforce platform. Leverage AI-powered insights to optimize real-time personalization. Establish a “single source of truth” to align marketing, sales, and service teams. A strong data foundation not only fuels better AI-driven personalization but also enhances analytics and campaign performance. Step 2: Use AI to Segment with Precision Segmentation is the backbone of personalized marketing, allowing marketers to tailor campaigns based on customer behavior, demographics, and engagement levels. Traditionally, segmentation was a manual, time-consuming process. However, AI has revolutionized this process by enabling natural language-based segment creation. How AI Improves Segmentation: Eliminates the need for SQL expertise, making segmentation accessible to all marketers. Generates precise audience segments based on engagement and fit scores. Leverages harmonized operational profiles to build complex, data-driven segments. With AI-driven segmentation, marketers can create hyper-targeted campaigns that resonate with their audience, increasing engagement and conversion rates. Step 3: Implement Engagement and Fit Scoring One of the most effective ways to enhance personalization is by scoring leads and contacts based on their engagement and fit with your ideal customer profile. This approach helps prioritize high-value prospects and ensures marketing efforts are focused on the right audience. Understanding Engagement Scoring: Engagement Scoring evaluates how actively leads and contacts interact with your marketing campaigns. This includes actions like email clicks, form submissions, and website visits. Key Metrics Used in Engagement Scoring: Email Engagement (e.g., Click, Unsubscribe) Website Engagement (e.g., Form Submission, Button Click) Message Engagement (e.g., Subscribe, Unsubscribe) Understanding Fit Scoring: Fit Scoring assesses how well a lead or contact aligns with your ideal customer profile. This includes demographic, firmographic, and behavioral data. Key Fit Scoring Criteria: Demographics: Age, gender, income level Firmographics: Industry, company size, revenue Behavioral Patterns: Purchase history, product usage Geographics: Location-based targeting Technographics: Technology stack compatibility Step 4: Use Dynamic Content Blocks for Hyper-Personalization Once your data is structured and segments are refined, the next step is delivering personalized content. Marketing Cloud Growth and Advanced Editions offer powerful tools like Cross-Object Merge Fields and Rule-Based Dynamic Content. How to Leverage These Tools: Cross-Object Merge Fields: Pull data from operational customer profiles to create more personalized messages (e.g., referencing a webinar attended or service case status). R ule-Based Dynamic Content: Display targeted text and images based on complex customer attributes (e.g., showing a specific image to customers in New York who attended a liveevent). These tools allow marketers to go beyond basic personalization, creating highly relevant and engaging experiences for their audience. Step 5: Combine Engagement and Fit Scoring for Optimal Results By integrating Engagement and Fit Scoring, marketers can create an overall marketing score that prioritizes the most valuable prospects. This score considers both interaction levels and alignment with the ideal customer profile. How to Implement Overall Scoring: Default weighting assigns 50% to Engagement Score and 50% to Fit Score. Scores range from 0 to 100, ensuring a balanced approach to prioritization. Customization allows businesses to adjust weighting based on their specific needs. This holistic scoring model ensures marketing efforts are directed toward leads that are both highly engaged and a great fit for your business. The Future of Personalized Marketing Personalization is no longer optional—it’s essential. Businesses that embrace advanced personalization techniques see a 10-30% increase in sales and customer retention rates. By implementing the strategies outlined in this guide, marketers can: Build a strong data foundation for AI-driven personalization. Use engagement and fit scoring to prioritize high-value leads. Deliver hyper-personalized content with dynamic marketing tools. With the right foundation, tools, and AI-driven insights, marketers can unlock the full potential of personalized marketing, driving stronger customer relationships and higher ROI. Now is the time to take action and position your business for sustained success in an increasingly competitive marketplace. incorporate this. Meet the all-new Salesforce Marketing Cloud Growth edition! Recently unveiled, this platform is designed specifically to empower small businesses by seamlessly integrating CRM, AI, and data within Salesforce’s trusted customer platform – Einstein. It promises to redefine marketing automation across sales, service, and commerce. Let’s explore how Marketing Cloud Growth is set to revolutionize the way small businesses approach marketing, enabling them to drive growth and efficiency like never before. Salesforce Marketing Cloud Growth Edition On February 20, 2024, Salesforce launched the Marketing Cloud Growth edition, aimed at empowering small businesses specifically with B2B marketing needs. With advanced marketing automation tools, powered by Einstein’s Generative AI and Data Cloud, this new edition simplifies marketing tasks, allowing businesses to concentrate on strategic growth. Salesforce Marketing Cloud Growth Edition integrates seamlessly with the core Salesforce platform, known as the Einstein 1 platform to provide users with easy access to all the robust features of Marketing Cloud within the broader Salesforce ecosystem. With the Marketing Cloud Growth edition, teams can efficiently: Unify various data sources via Data Cloud Create and deliver compelling content with Digital Experiences Automate complex customer journeys using Flow Builder Improve decision-making using Einstein’s intelligent analytics and predictions Marketing Cloud Growth edition is user-friendly and built directly on the Salesforce platform, eliminating the need for external connectors. However, it does not include some advanced features found in Marketing