Gap Between Data and Decisions

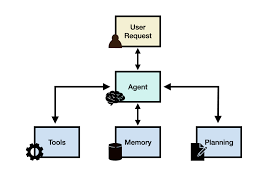

Bridging the Gap Between Data and Decisions One of the biggest challenges in enterprise technology is the fragmentation of data across various systems, commonly referred to as data silos. Salesforce research shows that 81% of IT leaders believe these silos prevent their organizations from fully utilizing data, while 94% of business leaders feel they should be extracting more value from their data. Despite the wealth of available information, only 30% of employees report using data to make decisions. Tableau Einstein addresses this challenge by enabling self-service analytics through a unified metadata framework. This framework ensures consistent data definitions and structures across an organization, allowing data architects and analysts to create semantic models. These models simplify complex datasets, making them more accessible to business users. Additionally, the platform integrates AI-powered agents into the data authoring process, automatically suggesting relationships between data objects and providing business context to improve the reliability of insights. Integrating AI for Proactive Insights Southard Jones, Chief Product Officer of Tableau, highlights the need for analytics tools to deliver proactive and autonomous insights within users’ workflows. “Employees often miss critical insights because they lack the time or expertise to analyze complex data sets,” Jones said. “With predictive and generative AI, Tableau Einstein can explore your data and surface insights you might not have considered.” Features like Pulse and Tableau Agent provide insights within familiar platforms like Salesforce, Slack, and third-party applications such as Workday. For example, a manager using Workday might receive real-time notifications about team performance metrics or potential indicators of employee attrition, allowing for timely intervention. Enhancing Trust Through Transparency Building trust in data analytics is key to adoption. Tableau Einstein introduces Tableau Semantics, which acts as a bridge between raw data and business terminology. “With Tableau Semantics, we provide clear explanations and data lineage, showing users exactly how metrics are created,” Jones explained. This transparency demystifies complex calculations, making it easier for users to understand metrics like campaign ROI. By aligning technical data with business understanding, the platform seeks to make analytics more accessible and trustworthy. Potential Challenges While AI-driven analytics holds great promise, it raises questions about data privacy, implementation complexity, and the learning curve for employees. Although AI-powered insights can revolutionize how organizations use data, it’s essential to address data governance concerns and ensure employees are trained to interpret AI-generated insights accurately. The competitive landscape for AI analytics tools is growing, with alternatives like Microsoft’s Power BI offering similar capabilities. Organizations will need to compare Tableau Einstein with other options in terms of features, integration potential, and overall cost. Facilitating Collaboration and Customization Tableau Einstein’s open, API-driven architecture enables developers to create customized analytical agents tailored to specific enterprise needs. “By opening our APIs, we allow developers to interact with the platform programmatically,” Jones noted. This flexibility supports the creation of custom analytics solutions that can seamlessly integrate with other business applications. The platform also introduces marketplace capabilities for sharing and discovering data models and analytics assets, fostering collaboration and innovation while reducing redundant efforts across organizations. Real-World Applications and Early Feedback Early adopters are already exploring how Tableau Einstein enhances decision-making processes. Leslie Bercher, Senior Director of Performance Marketing & Analytics at Arvest Bank, noted, “Embedding these insights into our daily applications empowers teams to make informed decisions quickly. It’s not just about data; it’s about having actionable insights when and where we need them.” Despite the potential, adopting advanced analytics tools may require investment in training and change management to ensure successful implementation. Without the right support, organizations may struggle to unlock the full potential of these technologies. Looking Ahead: The Future of AI-Powered Analytics Tableau Einstein is available through Tableau+, with future integrations planned across Salesforce products, including Sales, Service, Marketing, and Commerce Cloud. Salesforce is offering expert guidance, migration support, and a reimagined partner network to help businesses adopt the platform efficiently. As companies continue their digital transformation journeys, AI-powered analytics tools like Tableau Einstein will be pivotal in making data more accessible and actionable. Success will depend on how well organizations address challenges related to data privacy, employee training, and system integration. “The autonomous revolution in analytics is just beginning,” Jones observed. “The key will be how companies use these tools to gain insights and drive strategic actions that lead to measurable business outcomes.” Salesforce’s launch of Tableau Einstein marks a significant step in democratizing data analytics within organizations. By embedding AI-driven insights into daily workflows and increasing transparency in data metrics, the platform has the potential to transform operations. However, realizing this potential will require thoughtful implementation, strong employee engagement, and ongoing support. As AI and analytics evolve, companies that harness these technologies effectively will gain a competitive advantage in a data-driven world. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more