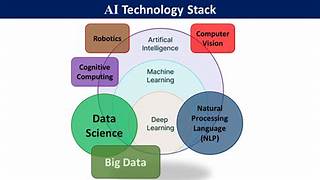

The AI Tech Stack

The AI tech stack integrates various tools, libraries, and solutions to enable applications with generative AI capabilities, such as image and text generation. Key components of the AI stack include programming languages, model providers, large language model (LLM) frameworks, vector databases, operational databases, monitoring and evaluation tools, and deployment solutions. The infrastructure of the AI tech stack leverages both parametric knowledge from foundational models and non-parametric knowledge from information sources (PDFs, databases, search engines) to perform generative AI functions like text and image generation, text summarization, and LLM-powered chat interfaces. This insight explores each component of the AI tech stack, aiming to provide a comprehensive understanding of the current state of generative AI applications, including the tools and technologies used to develop them. For those with a technical background, this Tectonic insight includes familiar tools and introduces new players within the AI ecosystem. For those without a technical background, the article serves as a guide to understanding the AI stack, emphasizing its importance across business roles and departments. Content Overview: The AI layer is a vital part of the modern application tech stack, integrating across various layers to enhance software applications. This layer brings intelligence to the stack through capabilities such as data visualization, generative AI (image and text generation), predictive analysis (behavior and trends), and process automation and optimization. In the backend layer, responsible for orchestrating user requests, the AI layer introduces techniques like semantic routing, which allocates user requests based on the meaning and intent of the tasks, leveraging LLMs. As the AI layer becomes more effective, it also reduces the roles and responsibilities of the application and data layers, potentially blurring the boundaries between them. Nevertheless, the AI stack is becoming a cornerstone of software development. Components of the AI Stack in the Generative AI Landscape: The emphasis on model providers over specific models highlights the importance of a provider’s ability to update models, offer support, and engage with the community, making switching models as simple as changing a name in the code. Programming Languages Programming languages are crucial in shaping the AI stack, influencing the selection of other components and ultimately determining the development of AI applications. The choice of a programming language is especially important for modern AI applications, where security, latency, and maintainability are critical factors. Among the options for AI application development, Python, JavaScript, and TypeScript (a superset of JavaScript) are the primary choices. Python holds a significant market share among data scientists, machine learning, and AI engineers, largely due to its extensive library support, including TensorFlow, PyTorch, and Scikit-learn. Python’s readable syntax and flexibility, accommodating both simple scripts and full applications with an object-oriented approach, contribute to its popularity. According to the February 2024 update on the PYPL (PopularitY of Programming Language) Index, Python leads with a 28.11% share, reflecting a growth trend of +0.6% over the previous year. The PYPL Index tracks the popularity of programming languages based on the frequency of tutorial searches on Google, indicating broader usage and interest. JavaScript, with an 8.57% share in the PYPL Index, has traditionally dominated web application development and is now increasingly used in the AI domain. This is facilitated by libraries and frameworks that integrate AI functionalities into web environments, enabling web developers to leverage AI infrastructure directly. Notable AI frameworks like LlamaIndex and LangChain offer implementations in both Python and JavaScript/TypeScript. The GitHub 2023 State of Open Source report highlights JavaScript’s dominance among developers, with Python showing a 22.5% year-over-year increase in usage on the platform. This growth reflects Python’s versatility in applications ranging from web development to data-driven systems and machine learning. The programming language component of the AI stack is more established and predictable compared to other components, with Python and JavaScript/TypeScript solidifying their positions among software engineers, web developers, data scientists, and AI engineers. Model Providers Model providers are a pivotal component of the AI stack, offering access to powerful large language models (LLMs) and other AI models. These providers, ranging from small organizations to large corporations, make available various types of models, including embedding models, fine-tuned models, and foundational models, for integration into generative AI applications. The AI landscape features a wide array of models that support capabilities like predictive analytics, image generation, and text completion. These models can be broadly classified into closed-source and open-source categories. Closed-Source Models have privatized internal configurations, architectures, and algorithms, which are not shared with the model consumers. Key information about the training processes and data is withheld, limiting the extent to which users can modify the model’s behavior. Access to these models is typically provided through an API or a web interface. Examples of closed-source models include: Open-Source Models offer a different approach, with varying levels of openness regarding their internal architecture, training data, weights, and parameters. These models foster collaboration and transparency in the AI community. The levels of open-source models include: Open-source models democratize access to advanced AI technology, reducing the entry barriers for developers to experiment with niche use cases. Examples of open-source models include: Open-Source vs. Closed-Source Large Language Models (LLMs) AI engineers and machine learning practitioners often face the critical decision of whether to use open- or closed-source LLMs in their AI stacks. This choice significantly impacts the development process, scalability, ethical considerations, and the application’s utility and commercial flexibility. Key Considerations for Selecting LLMs and Their Providers Resource Availability The decision between open- and closed-source models often hinges on the availability of compute resources and team expertise. Closed-source model providers simplify the development, training, and management of LLMs, albeit at the cost of potentially using consumer data for training or relinquishing control of private data access. Utilizing closed-source models allows teams to focus on other aspects of the stack, such as user interface design and data integrity. In contrast, open-source models offer more control and privacy but require significant resources to fine-tune, maintain, and deploy. Project Requirements The scale and technical requirements of a project are crucial in deciding whether to use open-