Why Does ChatGPT Use the Word “Delve” So Much? Mystery Solved.

The mystery behind ChatGPT’s frequent use of the word “delve” (one of the 10 most common words it uses) has finally been unraveled, and the answer is quite unexpected. Why ChatGPT Word Choices are repetitive.

While “delve” and other words like “tapestry” aren’t common in everyday conversations, ChatGPT seems to favor them. You may have noticed this tendency in its outputs.

The sudden rise in the use of “delve” in medical papers from March 2024, coincides with the first full year of ChatGPT’s widespread use.

“Delve,” along with phrases like “as an AI language model…,” has become a hallmark of ChatGPT’s language, almost a giveaway that a text is AI-generated.

But why does ChatGPT overuse “delve”? If it’s trained on human data, how did it develop this preference? Is it emergent behavior? And why “delve” specifically?

A Guardian article, “How Cheap, Outsourced Labour in Africa is Shaping AI English,” provides a clue. The key lies in how ChatGPT was built.

Why “Delve” So Much?

The overuse of “delve” suggests ChatGPT’s language might have been influenced after its initial training on internet data.

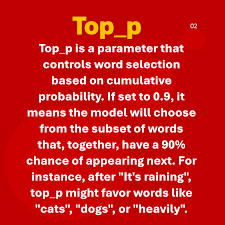

After training on a massive corpus of data, an additional supervised learning step is used to align the AI’s behavior. Human annotators evaluate the AI’s outputs, and their feedback fine-tunes the model.

Here’s a summary of the process:

- A base Language Learning Model (LLM) is trained using a data corpus.

- A Reward Model (RM) learns what humans consider “good” and “aligned.”

- The LLM generates multiple outputs; humans select the preferred one.

- The RM learns from these human choices, converting preferences into rewards or scores.

- The LLM adjusts its behavior to obtain more rewards based on these scores.

This iterative process involves human feedback to improve the AI’s responses, ensuring it stays aligned and useful. However, this feedback is often provided by a workforce in the global south, where English-speaking annotators are more affordable.

In Nigeria, “delve” is more commonly used in business English than in the US or UK. Annotators from these regions provided examples using their familiar language, influencing the AI to adopt a slightly African English style. This is an example of poor sampling, where the evaluators’ language differs from that of the target users, introducing a bias in the writing style.

This bias likely stems from the RLHF step rather than the initial training. ChatGPT’s writing style, with or without “delve,” is already somewhat robotic and easy to detect. Understanding these potential pitfalls helps us avoid similar issues in future AI development.

Making ChatGPT More Human-Like

To make ChatGPT sound more human and avoid overused words like “delve,” consider these Prompt Engineering approaches:

- Directly instruct ChatGPT to avoid certain words.

- Instruct ChatGPT to act as a specific role.

- Use few-shot learning by providing text samples you want it to emulate.

These methods can be time-consuming. Ideally, a quick, reliable tool, like a Chrome extension, would streamline this process.

If you’ve found a solution or a reliable tool for this issue, share it below in the comments. This is a widespread challenge that many users face.