When users first began interacting with ChatGPT, they noticed an intriguing behavior: the model would often reverse its stance when told it was wrong. This raised concerns about the reliability of its outputs. How can users trust a system that appears to contradict itself?

Recent research has revealed that large language models (LLMs) not only generate inaccurate information (often referred to as “hallucinations”) but are also aware of their inaccuracies. Despite this awareness, these models proceed to present their responses confidently.

Unveiling LLM Awareness of Hallucinations

Researchers discovered this phenomenon by analyzing the internal mechanisms of LLMs. Whenever an LLM generates a response, it transforms the input query into a numerical representation and performs a series of computations before producing the output. At intermediate stages, these numerical representations are called “activations.” These activations contain significantly more information than what is reflected in the final output. By scrutinizing these activations, researchers can identify whether the LLM “knows” its response is inaccurate.

A technique called SAPLMA (Statement Accuracy Prediction based on Language Model Activations) has been developed to explore this capability. SAPLMA examines the internal activations of LLMs to predict whether their outputs are truthful or not.

Why Do Hallucinations Occur?

LLMs function as next-word prediction models. Each word is selected based on its likelihood given the preceding words. For example, starting with “I ate,” the model might predict the next words as follows:

- “I ate” → “spinach”

- “I ate spinach” → “today.”

The issue arises when earlier predictions constrain subsequent outputs. Once the model commits to a word, it cannot go back to revise its earlier choice.

For instance:

- The model might generate: “Pluto is the smallest dwarf planet.”

While it associates “small” with Pluto and “dwarf planet” as a logical continuation, the statement is inaccurate because Pluto is the largest dwarf planet.

In another case:

- The model might predict: “Tiztoutine is the capital of the Republic of Niger.”

Although it might internally “know” the correct answer (Morocco), its initial prediction (“Republic of”) prevents it from outputting “Morocco,” as Morocco is not a republic.

This mechanism reveals how the constraints of next-word prediction can lead to hallucinations, even when the model “knows” it is generating an incorrect response.

Detecting Inaccuracies with SAPLMA

To investigate whether an LLM recognizes its own inaccuracies, researchers developed the SAPLMA method. Here’s how it works:

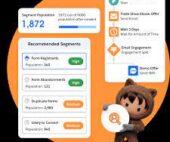

- Dataset Creation: A dataset of true and false statements is compiled.

- Activation Analysis: Statements are passed through the LLM, and hidden layer activations are extracted.

- Training a Classifier: The extracted activations are used to train a neural network classifier to distinguish between truthful and inaccurate statements.

- Layer Optimization: Researchers repeat this process across different layers of the LLM to identify the layer that achieves the highest classification accuracy.

The classifier itself is a simple neural network with three dense layers, culminating in a binary output that predicts the truthfulness of the statement.

Results and Insights

The SAPLMA method achieved an accuracy of 60–80%, depending on the topic. While this is a promising result, it is not perfect and has notable limitations. For example:

- The classifier provides a binary prediction (true or false) without indicating the degree of inaccuracy.

- Simply detecting inaccuracies doesn’t guarantee that a model can revise its response effectively. Endless loops of revisions could occur, and improved accuracy is not assured.

- The accuracy range (60–80%) may not be sufficient for high-stakes applications where precision is critical.

However, if LLMs can learn to detect inaccuracies during the generation process, they could potentially refine their outputs in real time, reducing hallucinations and improving reliability.

The Future of Error Mitigation in LLMs

The SAPLMA method represents a step forward in understanding and mitigating LLM errors. Accurate classification of inaccuracies could pave the way for models that can self-correct and produce more reliable outputs. While the current limitations are significant, ongoing research into these methods could lead to substantial improvements in LLM performance.

By combining techniques like SAPLMA with advancements in LLM architecture, researchers aim to build models that are not only aware of their errors but capable of addressing them dynamically, enhancing both the accuracy and trustworthiness of AI systems.

🔔🔔 Follow us on LinkedIn 🔔🔔