Advances in AI Models

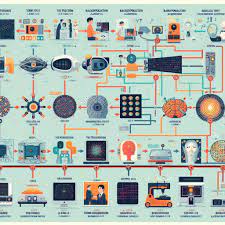

Let’s take a moment to appreciate the transformative impact large language models (LLMs) have had on the world. Before the rise of LLMs, researchers spent years training AI to generate images, but these models had significant limitations. Advances in AI Models.

One promising neural network architecture was the generative adversarial network (GAN). In a GAN, two networks play a cat-and-mouse game: one tries to create realistic images while the other tries to distinguish between generated and real images. Over time, the image-creating network improves at tricking the other.

While GANs can generate convincing images, they typically excel at creating images of a single subject type. For example, a GAN that creates excellent images of cats might struggle with images of mice. GANs can also experience “mode collapse,” where the network generates the same image repeatedly because it always tricks the discriminator. An AI that produces only one image repeatedly isn’t very useful.

What’s truly useful is an AI model capable of generating diverse images, whether it’s a cat, a mouse, or a cat in a mouse costume. Such models exist and are known as diffusion models, named for the underlying math that resembles diffusion processes like a drop of dye spreading in water. These models are trained to connect images and text, leveraging vast amounts of captioned images on the internet. With enough samples, a model can extract the essence of “cat,” “mouse,” and “costume,” embedding these elements into generated images using diffusion principles. The results are often stunning.

Some of the most well-known diffusion models include DALL-E, Imagen, Stable Diffusion, and Midjourney. Each model differs in training data, embedding language details, and user interaction, leading to varied results. As research and development progress, these tools continue to evolve rapidly.

Uses of Generative AI for Imagery

Generative AI can do far more than create cute cat cartoons. By fine-tuning generative AI models and combining them with other algorithms, artists and innovators can create, manipulate, and animate imagery in various ways.

Here are some examples:

Text-to-Image

Generative AI allows for incredible artistic variety using text-to-image techniques. For instance, you can generate a hand-drawn cat or opt for a hyperrealistic or mosaic style. If you can imagine it, diffusion models can interpret your intention successfully.

Text-to-3D Model

Creating 3D models traditionally requires technical skill, but generative AI tools like DreamFusion can generate 3D models along with detailed descriptions of coloring, lighting, and material properties, meeting the growing demand in commerce, manufacturing, and entertainment.

Image-to-Image

Images can be powerful prompts for generative AI models. Here are some use cases:

- Style transfer: Start with a simple sketch and let AI fill in the details in a specific artistic style.

- Paint out details: Remove unwanted elements from a photo and have AI fill in the gaps seamlessly.

- Paint in details: Add elements to a scene, like putting a party hat on a panther, with realistic integration.

- Extend picture borders: Continue the scene beyond the original borders using context from the existing image.

Animation

Creating a series of consistent images for animation is challenging due to inherent randomness in generated images. However, researchers have developed methods to reduce variations, enabling smoother animations.

All the use cases for still images can be adapted for animation. For example, style transfer can turn a video of a skateboarder into an anime-style animation. AI models trained on speech patterns can animate the lips of a generated 3D character.

Embracing Generative AI

Generative AI offers enormous possibilities for creating stunning imagery. As you explore these capabilities, it’s essential to use them responsibly. In the next unit, you’ll learn how to leverage generative AI’s potential in an ethical and effective manner.