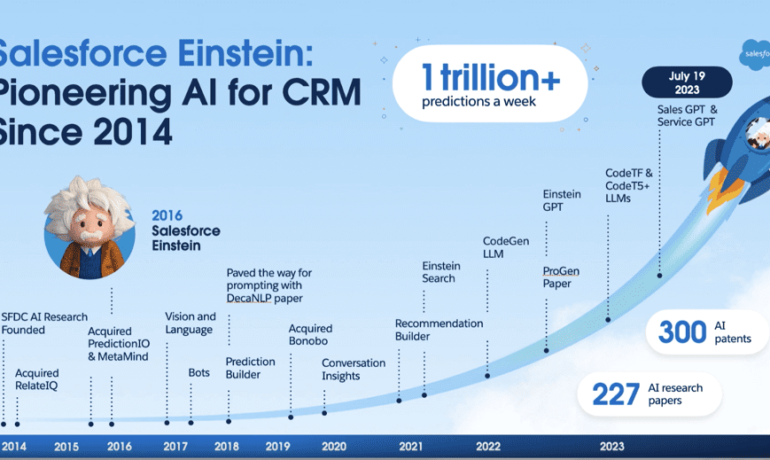

Salesforce AI Journey

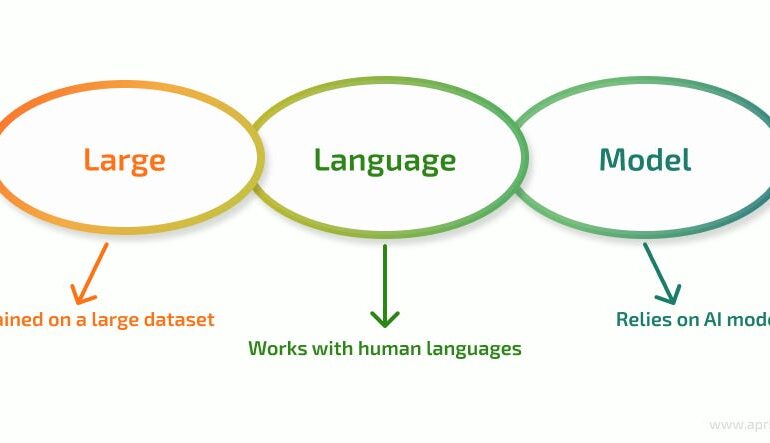

“We’re on an Incredible Journey”: Why Salesforce’s AI Push is Just Beginning This article originally appeared in TechRadar by Mike Moore. Salesforce AI Journey. When it comes to leveraging AI to enhance global workforces, some companies are leading the charge, particularly when their technology is at the forefront. Salesforce, known for its robust AI tools, is one such company pushing the boundaries. At its recent World Tour London event, Salesforce emphasized its commitment to AI, showcasing how its Einstein tools are already benefiting customers worldwide. TechRadar Pro spoke with Paul O’Sullivan, SVP, Solution Engineering & UKI, CTO, Salesforce, about the company’s vision for enhancing efficiency and productivity across all markets, particularly in the UK. A Wave of Innovation “We’re on an incredible journey,” O’Sullivan stated, referencing Salesforce’s $4 billion investment in the UK and Ireland in 2023. “We’re well-positioned in the UK to maximize AI’s potential and help our customers achieve true value.” This ambition is epitomized by Salesforce’s new AI center in London. The 40,000 square foot facility is set to be a hub for AI collaboration and development, addressing the growing demand for AI technology. O’Sullivan hinted that this is just the beginning. “We’re an innovation-led company—always looking ahead,” he said, highlighting the UK’s history of driving innovation as a positive indicator for AI’s future in the capital. Growing Demand and Education As demand for AI tools and services increases among businesses of all sizes, O’Sullivan acknowledged the rapid pace of change in the AI landscape. “It starts with education—at all levels,” he noted, recognizing the varying degrees of AI knowledge among business leaders. O’Sullivan compared the current AI momentum to past technological revolutions like cloud computing, websites, and ecommerce. Companies had to adapt quickly to avoid falling behind, and he noted that the window for catching up with AI might be even smaller. He predicted a “steady wave of innovation” in AI before it becomes ubiquitous in the business world, with various models and platforms vying for dominance. “It feels like everyone is in a race for AI,” he added, “and there’s a collective agreement that AI will enhance productivity and efficiency, benefiting both the bottom and top lines of big enterprises.” Human Jobs and AI Looking forward, O’Sullivan dismissed concerns that AI would replace human jobs. He suggested that AI would instead create new opportunities for human workers. “I think human nature is inherently curious,” he said. “We will continue to explore new ways of doing things and offer different levels of connection and service.” Drawing parallels to the industrial revolution, O’Sullivan pointed out that machines didn’t eliminate jobs; they increased productivity and efficiency. He believes AI will have a similar impact. “We’re going to see a new level of productivity and efficiency with AI, just as we did with the industrial revolution,” he concluded. Salesforce’s AI journey is only just beginning, promising exciting advancements and opportunities for businesses and workers alike. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more