While electronic health records (EHRs) have improved data exchange for care coordination, they have also increased the clinical documentation burden on healthcare providers. Research from 2023 suggests that clinicians may now spend more time on EHRs than on direct patient care. On average, providers spend over 36 minutes on EHR tasks for every 30-minute patient visit.

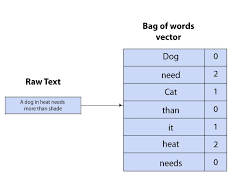

Generative AI, however, holds the potential to transform this. As defined by the Government Accountability Office, generative AI (GenAI) is a technology that can create content—such as text, images, audio, or video—based on user prompts. With the rise of chatbot interfaces like Chat-GPT, health IT vendors and healthcare systems are piloting GenAI tools to streamline clinical documentation.

While the technology shows promise in reducing the documentation burden and mitigating clinician burnout, several challenges still hinder widespread adoption.

Ambient Clinical Intelligence

Ambient clinical intelligence leverages smartphone microphones and GenAI to transcribe patient encounters in real time, producing draft clinical documentation for providers to review within seconds. A 2024 study examined the use of ambient AI scribes by 10,000 physicians and staff at The Permanente Medical Group. The results were promising—providers reported better patient conversations and less after-hours EHR documentation.

However, accuracy is critical for patient safety. A 2023 study found that ambient AI tools struggle with non-lexical conversational sounds (NLCSes)—like “mm-hm” and “uh-uh”—which patients and providers use to convey information. For instance, a patient might say “Mm-hm” to confirm they have no allergies to antibiotics. The study found that while the AI tools had a word error rate of 12% for all words, the error rate for NLCSes conveying clinically relevant information was as high as 98.7%. These inaccuracies could lead to patient safety risks, highlighting the importance of provider review.

Patient Communication

Patient portal messaging has surged since the COVID-19 pandemic, with a 2023 report showing a 157% increase in messages compared to pre-pandemic levels. To manage inbox overload, healthcare systems are exploring generative AI for drafting responses to patient messages. Clinicians review and edit these drafts before sending them to patients.

A 2024 study found that primary care physicians rated AI-generated responses higher in communication style and empathy than those written by providers. However, the AI-generated responses were often longer and more complex, posing challenges for patients with lower health or English literacy.

There are also potential risks to clinical decision-making. A 2024 simulation study revealed that the content of replies to patient messages changed when physicians used AI assistance, introducing an automation bias that could impact patient outcomes. Although most AI-generated drafts posed minimal safety risks, a small portion, if left unedited, could result in severe harm or death.

Although GenAI may reduce the cognitive load of writing replies, it might not significantly decrease the overall time spent on patient communications. A recent study showed that while providers felt less emotional exhaustion when using AI to draft messages, the time spent on replying, reading, and writing messages remained unchanged from pre-pilot levels.

Discharge Summaries

Generative AI has also been shown to improve the readability of patient discharge summaries. A study published in JAMA Network Open demonstrated that GenAI could lower the reading level of discharge notes from an eleventh-grade to a sixth-grade level, which is more appropriate for diverse health literacy levels. However, accuracy is still a concern. Physician reviews of these AI-generated summaries found that while some were complete, others contained omissions and inaccuracies that raised safety concerns.

Balancing AI’s Benefits with Oversight

While generative AI shows promise in alleviating the documentation burden and improving patient communication, challenges remain. Issues such as accurately capturing non-verbal cues and ensuring document accuracy underscore the need for careful provider oversight.

As AI technologies continue to evolve, ensuring that the benefits are balanced with provider review will be crucial for safe and effective healthcare implementation.