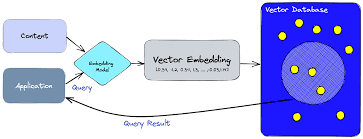

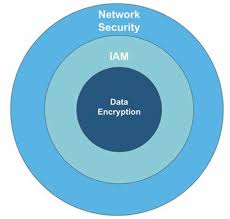

Selling has never been easy — and it’s not getting any simpler. Sales representatives are under constant pressure to research markets, navigate gatekeepers, and craft compelling pitches to win over decision-makers. But in today’s market, that’s not enough. Nearly 90% of business buyers expect personalized, insightful interactions — and delivering on that expectation requires more than persuasive messaging. It demands access to accurate, real-time data. The challenge? Sales reps often struggle to find the information they need. Instead of focusing on closing deals, they waste time chasing down customer data, piecing together fragmented insights, or working off outdated information. In fact: The root cause? Data silos. Data Silos are Crippling Sales Efficiency In most companies, critical customer data is scattered across: This fragmented data structure creates massive blind spots for sales teams. Consider this: The impact is costly — missed opportunities, slower deal cycles, and lost revenue. Without a unified approach to data management, sales teams remain limited by incomplete information, preventing them from delivering personalized, high-impact customer experiences. The Answer: Data Activation The solution isn’t just unifying your data — it’s activating it. Data activation means making your customer data accessible, actionable, and visible within your CRM so your sales team can use it in real-time. It eliminates the need to toggle between systems, request data from other teams, or work from static spreadsheets. Instead, activated data flows directly into the workflows and tools that your sales reps use every day — giving them everything they need to engage, sell, and close deals faster. Here’s what data activation looks like in practice: Data activation ensures that every team member works from the same, real-time, unified view of the customer — eliminating data silos and transforming sales productivity. Why Data Activation is a Game-Changer for Sales By bringing your unified data directly into your CRM, your sales team gains immediate access to valuable insights that drive better outcomes. Here are some powerful data types that become actionable through data activation: 1. Web Engagement Data Understand customer behavior based on their interactions with your website. Track which products or services they’ve browsed, downloaded, or engaged with — allowing your sales team to tailor conversations and offers accordingly. Use case: 2. Marketing Campaign Data Eliminate disjointed outreach by giving your sales team visibility into marketing campaigns. Sales reps can instantly see which emails, ads, or events a prospect engaged with — ensuring their outreach feels relevant, not redundant. Use case: 3. Consumption Data Track product usage, subscriptions, and consumption patterns from your ERP or product database. This data empowers sales reps to identify upsell and cross-sell opportunities or proactively prevent churn. Use case: 4. Unstructured Data (Emails, Call Logs, Chat Transcripts) Unlock insights from past customer interactions by analyzing emails, call center transcripts, chat logs, and even social media comments. Sales teams can use this data to understand sentiment, previous objections, and overall engagement history. Use case: 5. Billing and Subscription Data Integrate billing, purchase, and subscription information directly into your CRM. This allows sales reps to track contract renewals, upcoming billing cycles, or outstanding invoices — enabling more proactive and strategic outreach. Use case: 6. Third-Party Data for Enhanced Lead Scoring Enhance your lead scoring models with third-party data, such as firmographic information, buying intent signals, or demographic insights. This helps your team prioritize high-quality leads and drive faster conversions. Use case: Why Third-Party Data Tools Fall Short Many organizations attempt to solve their data challenges by investing in third-party data platforms like Snowflake, Databricks, or Redshift. While these tools excel at aggregating data, they introduce a new problem — they still create a data silo. The data sits outside of your CRM, meaning: This is why true data activation matters. It doesn’t just unify your data — it embeds it directly into your sales reps’ day-to-day tools, making insights instantly actionable. The Competitive Advantage of Data Activation By embracing data activation, your organization gains three major competitive advantages: ✅ 1. Increased Sales Productivity Sales reps no longer waste time tracking down information or switching between systems. With all customer data at their fingertips, they can spend more time building relationships and closing deals. ✅ 2. Enhanced Personalization at Scale With access to web behavior, campaign engagement, and product usage data, your team can personalize every interaction — at scale. This drives higher conversion rates and better customer experiences. ✅ 3. Smarter Forecasting and Planning By integrating billing, subscription, and past purchase data, sales managers gain accurate revenue forecasting and better visibility into growth opportunities. Activate Your Data. Unlock Your Revenue. The future of sales is not about more tools — it’s about better data accessibility. Data activation eliminates silos, unlocks powerful insights, and delivers real-time, actionable data directly into your CRM. This empowers your sales team to: The result? Faster sales, higher revenue, and exceptional customer experiences. Ready to activate your data and supercharge your sales performance? Start by bringing all your data — web, marketing, subscription, and service — directly into your CRM. Your sales team will thank you — and your revenue will show it. Like1 Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more