Qwary Salesforce Integration

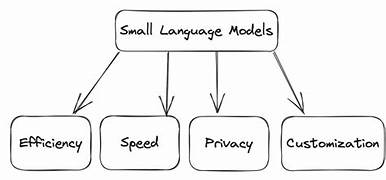

Qwary Enhances Customer Insights with New Salesforce Integration HERNDON, Va., Aug. 13, 2024 /PRNewswire/ — While surveys have long been a staple for gathering customer feedback, data entry often poses a challenge in obtaining comprehensive insights. Qwary’s new Salesforce integration aims to resolve this issue by enabling seamless data transfer and synchronization between the two platforms. This integration allows teams to consolidate customer information into a single hub, providing real-time visibility and enhancing strategic planning and collaboration. Key features include creating email campaigns, importing contacts, mapping survey results, and automating event-based workflows. What Is Qwary’s Salesforce Integration? Qwary’s Salesforce integration is designed to streamline the analysis of Salesforce survey data, offering a more efficient way to understand customer interactions with your brand. By integrating survey feedback with CRM data, this tool helps you quickly adapt your products and services to meet evolving customer needs. It tracks customer journeys, collects feedback, and reveals pain points, enabling you to deliver tailored solutions. Benefits of Using Qwary’s Salesforce Integration Qwary’s integration offers several notable benefits: Automate Feedback Collection The integration automates the feedback collection process by triggering surveys at strategic points in the customer lifecycle. This allows your team to act swiftly to foster engagement and generate leads. Gain Actionable Insights Seamlessly integrating with Salesforce CRM, Qwary scores, analyzes, and enriches customer data, helping your team identify emerging trends and seize opportunities for personalization and customer development. Synchronize Data Automatically With Qwary’s integration, your contact data is consolidated into a single, reliable source of truth. Whether you’re using Salesforce or Qwary, automated data synchronization ensures consistency and provides real-time updates. Collaborate Effectively The integration promotes effective teamwork by sharing data between Salesforce and Qwary, enabling your team to solve problems collaboratively and refine strategies to boost customer retention. Key Capabilities Qwary’s Salesforce integration excels in managing customer feedback, automating workflows, and consolidating contact data: Salesforce Workflow Automation The integration simplifies scheduling and automating survey triggers, eliminating manual processes. Surveys can be initiated via email or following significant events, with responses seamlessly mapped into Salesforce. This creates a comprehensive view of customer behavior, helping your team act on insights, strengthen connections, and enhance satisfaction. Contact Data Importation Qwary facilitates quick access to Salesforce contacts, providing a holistic view of your customer base. The integration streamlines contact data importation and updates, eliminating manual data entry and speeding up data management. Potential Business Impacts By combining automation, synchronization, and data consolidation with a user-friendly interface, Qwary’s Salesforce integration enhances your sales team’s ability to collect and leverage customer feedback. Immediate access to comprehensive consumer insights allows your business to respond promptly to customer needs, improving satisfaction and loyalty. Real-time data aggregation helps your company adapt quickly and refine offerings to exceed customer expectations. Stay Ahead with Qwary’s Salesforce Integration Qwary continuously updates its solutions to meet the evolving needs of businesses focused on customer engagement. Leveraging automation, synchronization, and advanced analytics through an accessible platform, Qwary’s Salesforce integration empowers your team to enhance offerings and connect with customers efficiently. By optimizing the use of survey data and Salesforce feedback, Qwary keeps your business at the forefront of market trends, enabling you to consistently delight your customers. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more