Optimizing AI Agent Performance Through Strategic Data Management

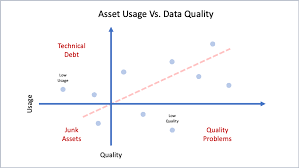

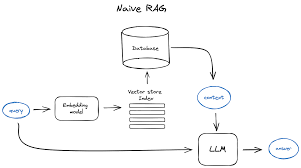

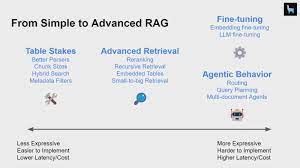

The Foundation of Effective AI Implementation The success of your Agentforce deployment directly correlates with your organization’s data maturity. While comprehensive, well-structured data yields optimal AI performance, businesses at any stage of their data journey can benefit from implementing AI agents. The key lies in adopting a strategic, phased approach that delivers measurable impact while building toward long-term data excellence. Building a Robust Data Infrastructure Data Quality Optimization The principle of “garbage in, garbage out” remains particularly relevant for AI systems. To ensure high-quality outputs: Data Unification Strategy Creating a centralized knowledge ecosystem enables AI agents to deliver consistent, informed responses. Essential integration points include: Platforms like Unified Knowledge facilitate seamless consolidation of disparate data sources into Salesforce, enhancing AI grounding effectiveness. Knowledge Base Development for AI Excellence Effective AI Grounding Techniques Retrieval-augmented generation (RAG) transforms your knowledge base into a powerful AI enabler by: Knowledge Base Optimization To maximize AI agent performance: Continuous Knowledge Improvement Cycle Proactive Knowledge Maintenance Implement these practices to sustain AI relevance: Feedback-Driven Enhancement Leverage multiple feedback channels to identify knowledge gaps: Strategic Implementation Framework Focused Use Case Development Begin with high-impact scenarios by: Phased Deployment Methodology Channel Optimization Strategy Alignment with Business Objectives Tailor channel strategy to organizational priorities: Customer Journey Mapping Human-AI Collaboration Model Workforce Transformation Seamless Transition Protocols Performance Measurement Framework Key Success Metrics By implementing this comprehensive framework, organizations can systematically enhance their AI capabilities while delivering immediate business value and building toward increasingly sophisticated implementations. The approach balances quick wins with long-term transformation, ensuring sustainable success in AI-powered customer service. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more