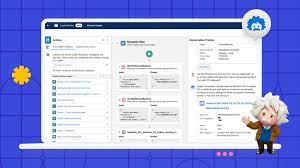

Agentforce Powered Marketing

Maximize Team Productivity and Customer Engagement with Agentforce and AI-Powered Marketing Tools Transform your marketing operations with Agentforce, an advanced AI-powered suite seamlessly integrated into your platform. From building end-to-end campaigns to personalizing touchpoints in real time, Agentforce empowers your team to optimize performance with actionable AI insights. Here’s how: Revolutionize Campaign Management with Agentforce Agent-Driven Campaign Briefs Streamline campaign creation with Agentforce, which uses structured and unstructured data from Data Cloud to create tailored campaign briefs. Define your target segments and key messages effortlessly with the support of AI. AI-Powered Content Creation Leverage Agentforce to generate on-brand content at scale, including email subject lines, body copy, and SMS messages. Every piece of content aligns with your brand guidelines and campaign goals, ensuring consistency and relevance across audiences. Unified SMS Conversations Turn static promotions into dynamic, two-way conversations with Agentforce Unified SMS. Automatically connect customers to AI agents for tasks like appointment scheduling and offer redemption, delivering seamless customer experiences. Supercharge Insights and Actions with Data Cloud Agent-Driven AI Segmentation Create target audience segments in minutes using natural language prompts. With Agentforce and Data Cloud working in harmony, agents translate prompts into precise segment attributes—no technical expertise or SQL required. Integrate or Build Custom AI Models Develop predictive AI models with clicks, not code, or bring in existing models via direct integrations with tools like Amazon SageMaker, Google Vertex AI, or Databricks. Use these models to generate actionable predictions, such as purchase propensity or churn likelihood. Secure, Harmonized Data Foundation Keep your data safe on the Einstein Trust Layer while enabling agents to analyze harmonized, structured, and unstructured data in Data Cloud. This ensures informed decision-making without compromising security. Automate Intelligent Journeys with Marketing Cloud Engagement Journey Optimization Automate personalized campaign variations with predictive AI. Optimize engagement by tailoring content, timing, channels, and frequency dynamically across customer journeys. Generative AI for Content Creation Solve the content bottleneck with generative AI tools that instantly create on-brand copy and visuals grounded in first-party data, campaign insights, and brand guidelines—all while safeguarding trust. Real-Time Messaging Insights Stay proactive with Einstein Messaging Insights, which flags engagement anomalies like sudden drops in click-through rates. These real-time insights enable quick resolutions, preventing performance surprises. Unified WhatsApp Conversations Transform WhatsApp into a dynamic two-way engagement channel. Use a single WhatsApp number to connect marketing and service teams while enabling AI-driven self-service actions like appointment booking and offer redemptions. Scale Lead Generation and Account-Based Marketing Agent-Driven Campaign Creation Accelerate campaign planning with Agentforce, which handles everything from briefs to audience segmentation, content, and journey creation. Ground campaigns in real-time customer data for accurate targeting, all with marketer oversight for approvals. AI Lead and Account Scoring Boost alignment between marketing and sales with Einstein AI Scoring, which identifies top leads and prospects automatically. Improve ABM strategies with automated account rankings based on historical and behavioral data, driving higher conversions. Full-Funnel Attribution Gain end-to-end visibility with AI-powered multi-touch attribution. Use models like Einstein Attribution to measure the impact of each channel, event, or team activity on your pipeline, boosting ROI and campaign efficiency. Personalization on Auto-Pilot with AI Objective-Based AI Recommendations Set business objectives and let AI optimize product and content recommendations to achieve those goals. AI-Automated Offers Combine real-time customer behavior data with AI-driven insights to personalize offers across touchpoints. This results in higher satisfaction and conversion rates tailored to each individual customer. Real-Time Affinity Profiling Use AI to uncover customer affinities, preferences, and intent in real time. Deliver hyper-personalized messaging and offers across your website, app, and other channels for maximum engagement. Optimize Spend, Planning, and Performance with Marketing Cloud Intelligence AI-Powered Data Integration Say goodbye to spreadsheets and manual data maintenance. Automate data unification, KPI standardization, and cross-channel analytics with AI-powered connectors, saving time and boosting campaign effectiveness. AI Campaign Performance Insights Get interactive visualizations and AI-generated insights to adjust campaign spend and offers mid-flight. Use these insights to optimize ROI and maximize in-the-moment opportunities. Predictive Budgeting and Planning Allocate budgets more effectively with predictive AI. Real-time alerts help prevent overages or underspending, ensuring your marketing dollars are used efficiently for maximum return. With Agentforce and AI marketing tools, your team can focus on what matters most—building stronger customer relationships and driving measurable results. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more