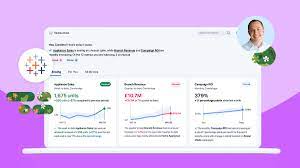

Salesforce Data Cloud Pioneer

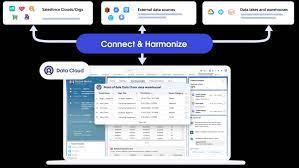

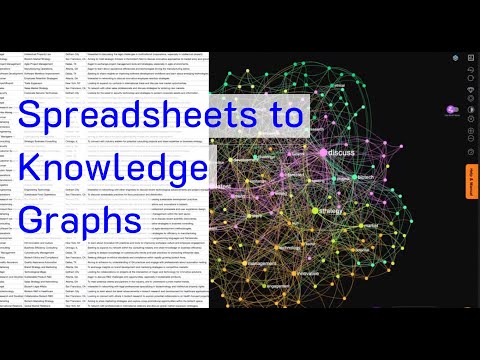

While many organizations are still building their data platforms, Salesforce Data Cloud Pioneer has made a significant leap forward. By seamlessly incorporating metadata integration, Salesforce has transformed the modern data stack into a comprehensive application platform known as the Einstein 1 Platform. Led by Muralidhar Krishnaprasad, executive vice president of engineering at Salesforce, the Einstein 1 Platform is built on the company’s metadata framework. This platform harmonizes metadata and integrates it with AI and automation, marking a new era of data utilization. The Einstein 1 Platform: Innovations and Capabilities Salesforce’s goal with the Einstein 1 Platform is to empower all business users—salespeople, service engineers, marketers, and analysts—to access, use, and act on all their data, regardless of its location, according to Krishnaprasad. The open, extensible platform not only unlocks trapped data but also equips organizations with generative AI functionality, enabling personalized experiences for employees and customers. “Analytics is very important to know how your business is doing, but you also want to make sure all that data and insights are actionable,” Krishnaprasad said. “Our goal is to blend AI, automation, and analytics together, with the metadata layer being the secret sauce.” Salesforce Data Cloud Pioneer In a conversation with George Gilbert, senior analyst at theCUBE Research, Krishnaprasad discussed the platform’s metadata integration, open-API technology, and key features. They explored how its extensibility and interoperability enhance usability across various data formats and sources. Metadata Integration: Accommodating Any IT Environment The Einstein 1 Platform is built on Trino, the federated open-source query engine, and Spark for data processing. It offers a rich set of connectors and an open, extensible environment, enabling organizations to share data between warehouses, lake houses, and other systems. “We use a hyper-engine for sub-second response times in Tableau and other data explorations,” Krishnaprasad explained. “This in-memory overlap engine ensures efficient data processing.” The platform supports various machine learning options and allows users to integrate their own large language models. Whether using Salesforce Einstein, Databricks, Vertex, SageMaker, or other solutions, users can operate without copying data. The platform includes three levels of extensibility, enabling organizations to standardize and extend their customer journey models. Users can start with basic reference models, customize them, and then generate insights, including AI-driven insights. Finally, they can introduce their own functions or triggers to act on these insights. The platform continuously performs unification, allowing users to create different unified graphs based on their needs. “We’re a multimodal system, considering your entire customer journey,” Krishnaprasad said. “We provide flexibility at all levels of the stack to create the right experience for your business.” The Triad of AI, Automation, and Analytics The platform’s foundation ingests, harmonizes, and unifies data, resulting in a standardized metadata model that offers a 360-degree view of customer interactions. This approach unlocks siloed data, much of which is in unstructured forms like conversations, documents, emails, audio, and video. “What we’ve done with this customer 360-degree model is to use unified data to generate insights and make these accessible across application surfaces, enabling reactions to these insights,” Krishnaprasad said. “This unlocks a comprehensive customer journey.” For instance, when a customer views an ad and visits the website, salespeople know what they’re interested in, service personnel understand their concerns, and analysts have the information needed for business insights. These capabilities enhance customer engagement. “Couple this with generative AI, and we enable a lot of self-service,” Krishnaprasad added. “We aim to provide accurate answers, elevating data to create a unified model and powering a unified experience across the entire customer journey.” Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more