Automating and assisting in coding holds tremendous promise for speeding up and enhancing software development. Yet, ensuring that these advancements yield secure and effective code presents a significant challenge. Balancing functionality with safety is crucial, especially given the potential risks associated with malicious exploitation of generated code. Salesforce Research Produces INDICT.

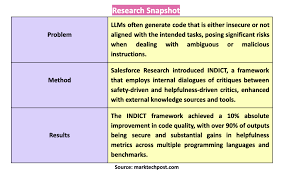

In practical applications, Large Language Models (LLMs) often struggle with ambiguous or adversarial instructions, sometimes leading to unintended security vulnerabilities or facilitating harmful attacks. This isn’t merely theoretical; empirical studies, such as those on GitHub’s Copilot, have revealed that a substantial portion of generated programs—about 40%—contained vulnerabilities. Addressing these risks is vital for unlocking the full potential of LLMs in coding while safeguarding against potential threats.

Current strategies to mitigate these risks include fine-tuning LLMs with safety-focused datasets and implementing rule-based detectors to identify insecure code patterns. However, fine-tuning alone may not suffice against sophisticated attack prompts, and creating high-quality safety-related data can be resource-intensive. Meanwhile, rule-based systems may not cover all vulnerability scenarios, leaving gaps that could be exploited.

To address these challenges, researchers at Salesforce Research have introduced the INDICT framework. INDICT employs a novel approach involving dual critics—one focused on safety and the other on helpfulness—to enhance the quality of LLM-generated code. This framework facilitates internal dialogues between the critics, leveraging external knowledge sources like code snippets and web searches to provide informed critiques and iterative feedback.

INDICT operates through two key stages: preemptive and post-hoc feedback. In the preemptive stage, the safety critic assesses potential risks during code generation, while the helpfulness critic ensures alignment with task requirements. External knowledge sources enrich their evaluations. In the post-hoc stage, after code execution, both critics review outcomes to refine future outputs, ensuring continuous improvement.

Evaluation of INDICT across eight diverse tasks and programming languages demonstrated substantial enhancements in both safety and helpfulness metrics. The framework achieved a remarkable 10% absolute improvement in code quality overall. For instance, in CyberSecEval-1 benchmarks, INDICT enhanced code safety by up to 30%, with over 90% of outputs deemed secure. Additionally, the helpfulness metric showed significant gains, surpassing state-of-the-art baselines by up to 70%.

INDICT’s success lies in its ability to provide detailed, context-aware critiques that guide LLMs towards generating more secure and functional code. By integrating safety and helpfulness feedback, the framework sets new standards for responsible AI in coding, addressing critical concerns about functionality and security in automated software development.