Predictive Analytics

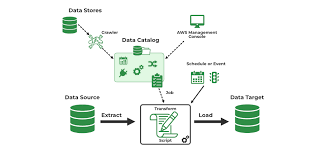

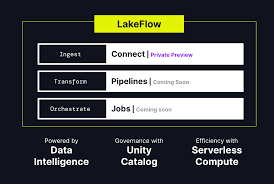

Industry forecasts predict an annual growth rate of 6% to 7%, fueled by innovations in cloud computing, artificial intelligence (AI), and data engineering. In 2023, the global data analytics market was valued at approximately $41 billion and is expected to surge to $118.5 billion by 2029, with a compound annual growth rate (CAGR) of 27.1%. This significant expansion reflects the growing demand for advanced analytics tools that provide actionable insights. AI has notably enhanced the accuracy of predictive models, enabling marketers to anticipate customer behaviors and preferences with impressive precision. “We’re on the verge of a new era in predictive analytics, with tools like Salesforce Einstein Data Analytics revolutionizing how we harness data-driven insights to transform marketing strategies,” says Koushik Kumar Ganeeb, a Principal Member of Technical Staff at Salesforce Data Cloud and a distinguished Data and AI Architect. Ganeeb’s leadership spans initiatives like AI-powered Salesforce Einstein Data Analytics, Marketing Cloud Connector for Data Cloud, and Intelligence Reporting (Datorama). His expertise includes architecting vast data extraction pipelines that process trillions of transactions daily. These pipelines play a crucial role in the growth strategies of Fortune 500 companies, helping them scale their data operations efficiently by leveraging AI. Ganeeb’s visionary work has propelled Salesforce Einstein Data Analytics into the forefront of business intelligence. Under his guidance, the platform’s advanced capabilities—such as predictive modeling, real-time data analysis, and natural language processing—are now pivotal in transforming how businesses forecast trends, personalize marketing efforts, and make data-driven decisions with unprecedented precision. AI and Machine Learning: The Next Frontier Beginning in 2018, Salesforce Marketing Cloud, a leading engagement platform used by top enterprises, faced challenges in extracting actionable insights and enhancing AI capabilities from rapidly growing data across diverse systems. Ganeeb was tasked with overcoming these hurdles, leading to the development of the Salesforce Einstein Provisioning Process. This process involved the creation of extensive data import jobs and the establishment of standardized patterns based on consumer adoption learning. These automated jobs handle trillions of transactions daily, delivering critical engagement and profile data in real-time to meet the scalability needs of large enterprises. The data flows seamlessly into AI models that generate predictions on a massive scale, such as Engagement Scores and insights into messaging and language usage across the platform. “Integrating AI and machine learning into data analytics through Salesforce Einstein is not just a technological enhancement—it’s a revolutionary shift in how we approach data,” explains Ganeeb. “With our advanced predictive models and real-time data processing, we can analyze vast amounts of data instantly, delivering insights that were previously unimaginable.” This innovative approach empowers organizations to make more informed decisions, driving unprecedented growth and operational efficiency. Real-World Success Stories Under Ganeeb’s technical leadership, Salesforce Einstein Data Analytics has delivered remarkable results across industries by leveraging AI and machine learning to provide actionable insights and enhance business performance. In the past year, leading companies like T-Mobile, Fitbit, and Dell Technologies have reported significant improvements after integrating Einstein. Ganeeb’s proficiency in designing and scaling data engineering solutions has been critical in helping these enterprises optimize performance. “Scalability with Salesforce Einstein Data Analytics goes beyond managing data volumes—it ensures that every data point is converted into actionable insights,” says Ganeeb. His work processing petabytes of data daily underscores his commitment to precision and efficiency in data engineering. Navigating Data Ethics and Quality Despite the rapid growth of predictive analytics, Ganeeb emphasizes the importance of data ethics and quality. “The accuracy of predictive models depends on the integrity of the data,” he notes. Salesforce Einstein Data Analytics addresses this by curating datasets to ensure they are representative and free from bias, maintaining trust while delivering reliable insights. By implementing rigorous data quality checks and ethical considerations, Ganeeb ensures that Einstein Analytics not only delivers actionable insights but also fosters transparency and trust. This balanced approach is key to the responsible use of predictive analytics across various industries. Future Trends in Predictive Analytics The future of predictive analytics looks bright, with AI and machine learning poised to further refine the accuracy and utility of predictive models. “Success lies in embracing technological advancements while maintaining a human touch,” Ganeeb notes. “By combining AI-driven insights with human intuition, businesses can navigate market complexities and uncover new opportunities.” Ganeeb’s contributions to Salesforce Einstein Data Analytics exemplify this balanced approach, integrating cutting-edge technology with human insight to empower businesses to make strategic decisions. His work positions organizations to thrive in a data-driven world, helping them stay agile and competitive in an evolving market. Balancing Benefits and Challenges – Predictive Analytics While predictive analytics offers vast potential, Ganeeb recognizes the challenges. Ensuring data quality, addressing ethical concerns, and maintaining transparency are crucial for its responsible use. “Although challenges remain, the future of AI-based predictive analytics is promising,” Ganeeb asserts. His work with Salesforce Einstein Data Analytics continues to push the boundaries of marketing analytics, enabling businesses to harness the power of AI for transformative growth. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more