Connected Vehicles

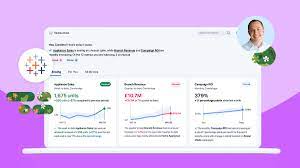

Revolutionizing the Automotive Industry: Salesforce’s Connected Car App The automotive industry has always been a beacon of innovation, consistently pushing the boundaries to enhance the driving experience. From the iconic Model T and the assembly line to today’s electric and autonomous vehicles, the evolution of automobiles has been driven by an unyielding pursuit of progress. I actually purchased a new-to-me car today, and with the connected vehicle on the horizon I’m kind of glad I’ll be able to upgrade in a couple years. Bluetooth and back up cameras are great. But a car that can tell the dealership to get me on the horn before some automotive calamity occurs? The future is here, my friends. Connected Vehicles for Better Experiences Now, as digital transformation reshapes industries, a new chapter is emerging in automotive innovation: the connected car. Leading this charge is Salesforce, a global powerhouse in customer relationship management (CRM), with the introduction of its groundbreaking Connected Car App, poised to redefine in-car experiences for both drivers and passengers. From my personal buying experience today, the car business could use some customer relationship management! The Future of In-Car Connectivity Salesforce’s Connected Car App is more than just a technological enhancement; it represents a fundamental shift in how we interact with our vehicles. By leveraging Salesforce’s Customer 360 platform, this app creates personalized, engaging experiences that go far beyond traditional automotive features. The Connected Car App is designed to make every journey more intuitive and efficient, offering real-time insights and services tailored to the unique needs of each driver. Whether it’s maintenance alerts, optimized route suggestions based on traffic, or personalized entertainment options, the app transforms the car into a truly smart companion on the road. A GPS feature? I guess I can plan on deleting Waze off my phone in the near future! Powered by Salesforce Customer 360 At the heart of the Connected Car App is Salesforce’s Customer 360 platform, which delivers a comprehensive, 360-degree view of each customer. This integration ensures that the app provides tailored experiences based on a deep understanding of the driver’s preferences, habits, and history. It isn’t going to just know you by a vehicle loan number, a VIN number, or even just an email address. For instance, a driver who frequently takes long road trips might receive customized recommendations for rest stops, dining options, and attractions along their route. Meanwhile, commuters could benefit from real-time updates on traffic, weather, and parking availability. The app’s ability to anticipate and respond to the driver’s needs in real time distinguishes it from traditional in-car systems. I can just hear my car now, advising me it has been one hour since I stopped for coffee, and she’s worried about my sanity. Enhancing Customer Loyalty and Satisfaction with Connected Vehicles The Connected Car App offers significant potential to boost customer loyalty and satisfaction. By delivering a personalized driving experience, automakers can strengthen relationships with customers, transforming each driving journey into an opportunity to build brand loyalty. If Toyota is suddenly going to treat me like Shannan Hearne instead of customer # xxxxx would be ecstatic. Additionally, the app’s capability to collect and analyze data in real time opens new avenues for automakers to engage with their customers. Predictive maintenance reminders, targeted promotions, and special offers are just a few examples of how the app fosters a deeper connection between the brand and the driver. Oh, yeah. My connected vehicle app is DEFINITELY going to be talking to me about changing my oil (I’m not exactly diligent), how great the latest model of Toyota is (I drove a Corolla for 18 years and have also owned a Tacoma, a Tundra, and a Prius), and if it would add coffee coupons I would be golden. A New Era of Automotive Innovation Salesforce’s Connected Car App marks a pivotal moment in the automotive industry’s digital transformation. As vehicles become increasingly connected, the opportunities for innovation are boundless. Salesforce is at the forefront with a solution that not only enhances the driving experience but also empowers automakers to build stronger, more meaningful relationships with their customers. In a world where customer expectations are constantly growing, the Connected Car App is a game-changer. Customers, even car owners, expect their brands to know them and recognize them. By integrating Salesforce’s CRM capabilities directly into vehicles, the app creates a seamless, personalized experience that stands out. As we look ahead, it’s clear that the Connected Car App is just the beginning of an exciting new era of automotive innovation. As a marketer at heart and a technologist by trade, I’m really excited about the potential here. Connected Vehicle: A Unified Digital Foundation Salesforce’s Connected Vehicle platform provides automakers with a unified, intelligent digital foundation, enabling them to reduce development time and roll out features and updates faster than ever before. This platform allows seamless integration of vehicle, Internet of Things (IoT), driver, and retail data from various sources, including AWS IoT FleetWise and Snapdragon® Car-to-Cloud Connected Services Platform, to enhance driver experiences and ensure smooth vehicle operation. Can you imagine a smart app like the Connected Vehicle talking to your loyalty apps for gas stations, convenience stores, and grocery stores? I would be driving down the interstate and the app will tell me there is a Starbucks ahead AND I have a 10% off coupon. Automakers and mobility leaders like Sony Honda Mobility are already exploring the use of Connected Vehicle to deliver better experiences for their customers. The platform’s ability to access and integrate data from any source in near real time allows automakers to personalize driver experiences, in-car offers, and safety upgrades. Why It Matters By 2030, every new vehicle sold will be connected, and the advanced, tech-driven features they provide will be increasingly important to consumers. A recent Salesforce survey revealed that drivers already consider connected features to be nearly as important as a car’s brand. Connected Vehicle accelerates this evolution, enabling automakers to immediately deliver branded, customized experiences tailored to