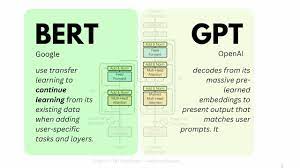

Salesforce Unlimited Plus (UE+) is designed as an advanced offering that incorporates several specialized features tailored for different industries, making it particularly suitable for larger organizations and enterprises that require robust, integrated solutions for complex business processes and customer relationship management. Salesforce Unlimited+ Edition Explained. Target Audience UE+ is targeted toward large enterprises that need extensive CRM functionalities combined with AI and data analytics capabilities. This solution is ideal for organizations that: • Manage complex customer relationships across multiple channels. • Require deep integration of data and processes across departments. • Are looking to leverage advanced AI capabilities for predictive insights and automation. • Need industry-specific solutions that can be customized for unique business requirements. The integration of various Salesforce clouds (e.g., Sales Cloud, Service Cloud, Data Cloud) with enhanced features like AI and specific industry capabilities makes UE+ a comprehensive solution for organizations aiming to streamline their operations and gain a competitive edge through advanced technology adoption. Here are the five Salesforce editions for every purpose: ·Starter/Essentials: Ideal for small businesses, offering basic contact, lead, and opportunity management. ·Professional: Tailored for mid-sized companies with enhanced sales forecasting and automation capabilities. ·Enterprise: Geared towards larger organizations, providing advanced customization, reporting, and integration options. ·Unlimited: Offers comprehensive functionality, customizability, 24/7 support, and access to premium features like generative AI. ·Unlimited Plus: Most robust solution for businesses of all sizes, featuring additional functionalities and enhanced capabilities. Key Considerations: ·Business Size: Consider the number of users and overall business scale when choosing an edition. ·Features Needed: Identify the specific features crucial for your sales, service, or marketing processes. ·Scalability: Choose an edition that accommodates your projected growth and future needs. ·Budget: Evaluate the cost of each edition against its offered features and value proposition. Sales Cloud Unlimited Edition+ Features: Account and Contact Management: Complete visibility of customer profiles including activity history and communications. Opportunity Management: Tracking and details of every sales deal at each stage. Pipeline Inspection: A comprehensive tool that allows sales managers to monitor pipeline changes, offering AI-driven insights and recommendations to optimize sales strategies. Einstein AI Capabilities: Includes tools like Einstein Conversation Insights which transcribe and analyze sales calls, highlighting key parts for review and deeper analysis. Customizable Reports and Dashboards: Enhanced capabilities for building real-time reports and visualizations to track sales metrics and forecasts. Advanced Integration Features: Integration with external data and systems through various APIs including REST and SOAP. Automation and Customization: Extensive options for workflow automation and personalization of user interfaces and customer interactions using the Flow Builder and Lightning App Builder. Developer Tools: Access to tools like Developer Sandbox for safe testing and app development environments. Service Cloud Unlimited+ Features: Einstein Bots: AI-powered chatbots to handle customer inquiries automatically, available 24/7 across various communication channels. Enhanced Messaging: Integration with popular messaging platforms like WhatsApp, SMS, and Apple Messages to facilitate seamless customer interactions. Feedback Management: Tools to gather and analyze customer feedback directly within the CRM. Self-Service Capabilities: Including customizable help centers and service catalogs that allow customers to find information and resolve issues independently. Field Service Tools: Comprehensive management of field operations including work order and asset management. Real-Time Analytics: Advanced reporting features for creating in-depth analytics to monitor and improve customer service processes. Additional features include Data Cloud, Generative AI, Service Cloud Voice, Digital Engagement, Feedback Management, Self-Service, and Slack. Salesforce Unlimited+ for Industries UE+ for Industries: UE+ for Industries includes Unlimited+ for Sales and Service together with industry-specific data models and capabilities to help customers drive faster time to value within their sectors: •Financial Services Cloud UE+ for Sales and Financial Services Cloud UE+ for Service helps banks, asset management, and insurance agencies connect all of their customer data on one platform and embed AI to deliver personalized financial engagement, at scale. •An insurance carrier can use Financial Services Cloud UE+ to connect engagement data like emails, webinars, and educational content with third-party conference attendance, social media follows, and business performance data to understand what is motivating agents, helping drive more personalized relationships and grow revenue with Data Cloud and Einstein AI. •Health Cloud UE+ for Service helps healthcare, pharmaceutical, and other medical organizations improve response times at their contact centers and offer digital healthcare services with built-in intelligence, real-time collaboration, and a 360-degree view of every patient, provider, and partner. •A hospital can use the bundle to quickly create a personalized, AI-powered support center to triage and speed up time to care with self-service tools like scheduling and connecting patients and members with care teams on their preferred channels. •Manufacturing Cloud UE+ for Sales brings together tools for manufacturing organizations to build their data foundation, embed AI capabilities across the sales cycle, and maximize productivity, empowering them to scale their commercial operations and grow revenues. •A manufacturer can now look across the entire book of business to see how companies are performing against negotiated sales agreements and then use AI-generated summaries to determine where to prioritize their time and resources. By Tectonic’s AArchitecture Team Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more