Scale and AI Influence Shape Partner Ecosystems

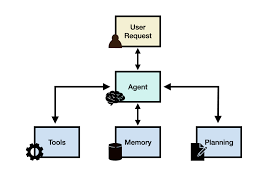

Hyperscalers’ Scale and AI Influence Shape Partner Ecosystems Despite their seemingly saturated networks, the largest cloud vendors continue to dominate as top ecosystems for service providers, according to a recent survey. Hyperscalers are playing a critical role in partner alliances, a trend that has only intensified in recent years. A study released by Tercera, an investment firm specializing in IT services, highlights the dominance of cloud giants AWS, Google Cloud, and Microsoft Azure in the partner ecosystem landscape. More than 50% of the 250 technology service providers surveyed by Tercera identified one of these three vendors as their primary partner. This data comes from Tercera’s third annual report on the Top 30 Partner Ecosystems. The report emphasizes the “gravitational pull” of these hyperscalers, attracting partners despite their already vast networks. Each of the major cloud vendors maintains relationships with thousands of software and services partners. “The hyperscalers continue to defy the law of large numbers when you look at how many partners are in their ecosystems,” said Michelle Swan, CMO at Tercera. The Shift in Channel Alliances The emergence of cloud vendors as top partners for service providers has been evident since at least 2021. That year, a survey by Accenture of 1,150 channel companies found that AWS, Google, and Microsoft accounted for the majority of revenue for these partners. This represents a significant shift in channel economics, where traditionally large hardware companies occupied the top spots in partner alliances. AI’s Role in Partner Ecosystem Growth The rise of generative AI (GenAI) is reshaping alliance strategies, as service providers increasingly align themselves with hyperscalers and their AI technology partners. For instance, AWS channel partners interested in GenAI are likely to work with Anthropic, following Amazon’s $4 billion investment in the AI company. Meanwhile, Microsoft partners tend to collaborate with OpenAI, as Microsoft has committed up to $13 billion in investments to expand their partnership. “They have their own solar systems,” Swan remarked, referencing AWS, Google, Microsoft, and the AI startups within their ecosystems. Tiers of Partner Ecosystems Tercera categorizes its top 30 ecosystems into three tiers. The first tier, known as “market anchors,” includes AWS, Google, Microsoft, and large independent software vendors (ISVs) such as Salesforce and ServiceNow. The second tier, “market movers,” features publicly traded vendors with evolving partner ecosystems. The third tier, “market challengers,” is made up of privately held vendors with a partner-centric focus, such as Anthropic and OpenAI. Generative AI Ecosystem Survey A 2024 generative AI survey conducted by TechTarget and its Enterprise Strategy Group supports the idea that the leading cloud vendors play a central role in AI ecosystems. In a poll of 610 GenAI decision-makers and users, Microsoft topped the list of ecosystems supporting GenAI initiatives, with 54% of respondents citing it as the best ecosystem. Microsoft’s partner, OpenAI, followed with 35%. Google and AWS ranked third and fourth, with 30% and 24% of the responses, respectively. The survey covered a wide range of industries, including business services and IT, further reinforcing the dominant role hyperscalers play in shaping AI and partner ecosystems. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Health Cloud Brings Healthcare Transformation Following swiftly after last week’s successful launch of Financial Services Cloud, Salesforce has announced the second installment in its series Read more