Split-Testing Hyperlink Destinations in Salesforce

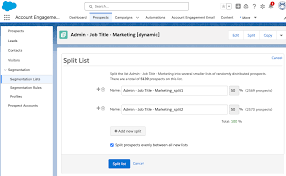

Split-Testing Hyperlink Destinations in Salesforce: A Complete Guide How to A/B Test Link URLs in Salesforce Campaigns Want to optimize your email conversions without changing your message? Here’s how to test different destination URLs while keeping your hyperlinks consistent across Salesforce campaigns. Two Approaches to URL Split-Testing in Salesforce 1. Using Marketing Cloud (Native Solution) Best for: Enterprise teams with SFMC licensesCapabilities:✅ Built-in A/B testing for email links✅ Automatic winner selection✅ Salesforce-native analytics Implementation: Pro Tip: Test landing pages with different: 2. Third-Party Integrations Best for: Teams without Marketing Cloud Platform Key Feature Salesforce Integration HubSpot Visual URL testing Native connector Mailchimp Link-level A/B tests AppExchange integration ActiveCampaign Multi-URL experiments API-based sync Workflow Example: Critical Implementation Steps Phase 1: Setup Phase 2: Execution Phase 3: Analysis Key Metrics to Track in Salesforce:📈 Click-through rate (Email > Campaign Influence)💰 Conversion rate (Leads/Opportunities created)⏱️ Time-to-conversion (Sales Analytics) Advanced Tactics Dynamic URL Testing apex Copy Download // Sample Salesforce Apex for dynamic routing public String getOptimizedLink() { Integer randomizer = Math.mod(Math.abs(Crypto.getRandomInteger()),100); return (randomizer < 50) ? ‘versionA_url’ : ‘versionB_url’; } Cross-Object Tracking Limitations & Workarounds ⚠️ Core Salesforce Limitation 🔧 Solutions: “Companies testing link destinations see 22% higher email conversion rates on average.”— Salesforce State of Marketing Report 📥 Download Salesforce A/B Testing Playbook▶️ Watch Marketing Cloud Split-Test Tutorial Which approach fits your tech stack?🔵 Marketing Cloud user? Start with Content Builder🟠 Using ESPs? Explore AppExchange integrations today Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more