The Future of AI Agents

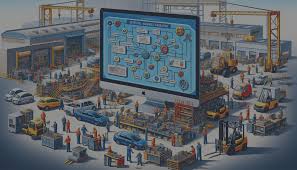

The Future of AI Agents: A Symphony of Digital Intelligence Forget simple chatbots—tomorrow’s AI agents will be force multipliers, seamlessly integrating into our workflows, anticipating needs, and orchestrating complex tasks with near-human intuition. Powered by platforms like Agentforce (Salesforce’s AI agent builder), these agents will evolve in five transformative ways: 1. Beyond Text: Multimodal AI That Sees, Hears, and Understands Today’s AI agents mostly process text, but the future belongs to multimodal AI—agents that interpret images, audio, and video, unlocking richer, real-world applications. How? Neural networks convert voice, images, and video into tokens that LLMs understand. Salesforce AI Research’s xGen-MM-Vid is already pioneering video comprehension. Soon, agents will respond to spoken commands, like:“Analyze Q2 sales KPIs—revenue growth, churn, CAC—summarize key insights, and recommend two fixes.”This isn’t just about speed; it’s about uncovering hidden patterns in data that humans might miss. 2. Agent-to-Agent (A2A) Collaboration: The Rise of AI Teams Today’s AI agents work solo. Tomorrow, specialized agents will collaborate like a well-oiled team, multiplying efficiency. Human oversight remains critical—not for micromanagement, but for ethics, strategy, and alignment with human goals. 3. Orchestrator Agents: The AI “Managers” of Tomorrow Teams need leaders—enter orchestrator agents, which coordinate specialized AIs like a restaurant GM oversees staff. Example: A customer service request triggers: The orchestrator integrates all inputs into a seamless, on-brand response. Why it matters: Orchestrators make AI systems scalable and adaptable. New tools? Just plug them in—no rebuilds required. 4. Smarter Reasoning: AI That Thinks Like You Today’s AI follows basic commands. Tomorrow’s will analyze, infer, and strategize like a human colleague. Example: A marketing AI could: Key Advances: As Anthropic’s Jared Kaplan notes, future agents will know when deep reasoning is needed—and when it’s overkill. 5. Infinite Memory: AI That Never Forgets Current AI has the memory of a goldfish—each interaction starts from scratch. Future agents will retain context across sessions, like a human recalling notes. Impact: The Bottom Line The next generation of AI agents won’t just assist—they’ll augment human potential, turning complex workflows into effortless collaborations. With multimodal perception, team intelligence, advanced reasoning, and infinite memory, they’ll redefine productivity across industries. The future isn’t just AI—it’s AI working for you, with you, and ahead of you. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more