Most people are familiar with Wordle by now. It’s that simple yet addictive game where players try to guess a five-letter word within six attempts.

Introducing WordMap: Guess the Word of the Day

A few weeks ago, while using semantic search in a Retrieval-Augmented Generation (RAG) system (for those curious about RAG, there’s more information in a previous insight), an idea emerged.

What if there were a game like Wordle, but instead of guessing a word based on its letter positions, players guessed the word of the day by how close their guesses were in meaning? Players would input various words, and the game would score each one based on its semantic similarity to the target word, evaluating how related the guesses are in terms of meaning or context. The goal would be to guess the word in as few tries as possible, though without a limit on the number of attempts.

This concept led to the creation of ☀️ WordMap!

To develop the game, it was necessary to embed both the user’s input word and the word of the day, then calculate how semantically similar they were. The game would normalize the score between 0 and 100, displaying it in a clean, intuitive user interface.

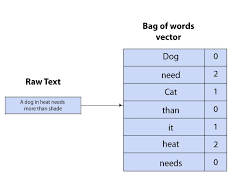

Diagram of the Workflow

The Embedding Challenge

RAGs are frequently used for searching relevant data based on an input. The challenge in this case was dealing with individual words instead of full paragraphs, making the context limited.

There are two types of embeddings: word-level and sentence-level. While word-level embeddings might seem like the logical choice, sentence-level embeddings were chosen for simplicity.

Word-Level Embeddings

Word-level embeddings represent individual words as vectors in a vector space, with the premise that words with similar meanings tend to appear in similar contexts.

Key Features

- Granularity: Each word gets its own vector.

- Training Data: Models like Word2Vec and GloVe train on large corpora, learning from word usage.

- Example: The words king and queen might have vectors that are 92% similar based on their context.

However, word embeddings treat words in isolation, which is a limitation. For instance, the word “bank” could refer to either a financial institution or the side of a river, depending on the context.

Sentence-Level Embeddings

Sentence embeddings represent entire sentences (or paragraphs) as vectors, capturing the meaning by considering the order and relationships between words.

Key Features

- Granularity: The entire sentence gets a single vector.

- Usage: Ideal for tasks like sentence similarity, paraphrase detection, and document classification.

The downside is that sentence embeddings require more computational resources, and longer sentences may lose some granularity.

Why Sentence Embeddings Were Chosen

The answer lies in simplicity. Most embedding models readily available today are sentence-based, such as OpenAI’s text-embedding-3-large. While Word2Vec could have been an option, it would have required loading a large pre-trained model. Moreover, models like Word2Vec need vast amounts of training data to be precise.

Using sentence embeddings isn’t entirely inaccurate, but it does come with certain limitations.

Challenges and Solutions

One limitation was accuracy, as the model wasn’t specifically trained to embed single words. To improve precision, the input word was paired with its dictionary definition, although this method has its own drawbacks, especially when a word has multiple meanings.

Another challenge was that semantic similarity scores were relatively low. For instance, semantically close guesses often didn’t exceed a cosine similarity score of 0.45. To avoid discouraging users, the scores were normalized to provide more realistic feedback.

The Final Result 🎉

The game is available at WordMap, and it’s ready for players to enjoy!