Unstructured Data Isn’t Just for Embeddings: Hidden Structure Can Enhance RAG

Vector search isn’t designed to handle everything.

In Generative AI (GenAI) applications, particularly in retrieval-augmented generation (RAG) systems, we often place heavy reliance on vector embeddings to interpret unstructured data. Embeddings convert the semantic content of text documents into high-dimensional vectors, which AI models can easily process. However, there’s a misconception that unstructured text should only be embedded into vectors. After all, vectors are the language of AI systems, right?

Yet, it seems we’ve overlooked the possibility that text can be analyzed and transformed for machine learning models without always defaulting to vector embeddings, especially before GenAI became prevalent. While embeddings are powerful and solve many challenges, they weren’t intended to be a one-size-fits-all solution.

This article will explore why depending solely on embeddings often falls short and why integrating other strategies to process text can maximize the value extracted from documents. Though unstructured data lacks the clear organization that classical algorithms prefer, it doesn’t mean we should disregard the structure that already exists—such as headings, hyperlinks, and metadata—and simply convert the data into vectors. In fact, by ignoring these elements, we risk weakening our systems, reducing efficiency, and missing key pieces of information. When we preserve and leverage this inherent structure, we can create more effective retrieval systems.

The Problem with Relying Solely on Embeddings

Semantic search alone often doesn’t fulfill the complex needs of information retrieval. By incorporating structured methods—such as document relationships, metadata, and thematic context—we can create much more effective retrieval systems. Embeddings are a great tool, but when used alongside structured data, they form part of a more intelligent and capable hybrid approach.

Vector Embeddings Aren’t Perfect

Vector embeddings allow AI systems to represent documents in high-dimensional space, where relationships between textual elements are evaluated through straightforward vector operations like cosine similarity. This helps identify semantically relevant documents, even when their wording differs. However, there’s a critical limitation to relying entirely on embeddings: they are lossy.

When we embed a document, we reduce its detailed, rich content into a fixed-length vector, inevitably losing some nuances. Embeddings are good at capturing the core essence of a document, but they might miss subtle details, phrasing, or relationships between sections of the text. This becomes especially problematic when a query requires precision. For example, if a query seeks specific information about a historical event, but the embedding didn’t prioritize that detail adequately, the retrieval might miss it altogether. Embeddings, by nature, abstract data, and like any abstraction, this comes with trade-offs.

The Challenge of Non-Deterministic Retrieval

Another issue with relying solely on embeddings is the non-deterministic nature of large language models (LLMs). Even when the same vector is retrieved, the model’s response can vary, potentially leading to inconsistencies. While this variability adds to the model’s ability to generate human-like language, it can hinder consistent retrieval of specific details.

Moreover, embeddings focus on semantic similarity, not necessarily exact matches. Sometimes, precise information is needed, but embeddings prioritize conceptual relevance over specificity. This mismatch can be problematic when the goal is to retrieve exact facts or specific details.

“Unstructured” Data Isn’t Completely Unstructured

Although we call it “unstructured” data, most documents have a significant amount of implicit structure. Documents often contain headings, sections, tables of contents, citations, and hyperlinks—these elements provide valuable context and help users understand how information relates to one another. This inherent structure can also be used to enhance retrieval.

In previous articles, I’ve highlighted how documents naturally reference other sources of knowledge through hyperlinks, footnotes, and citations. These references are forms of structure that can be preserved for more accurate retrieval. When text is embedded into vectors, this interconnectivity often gets lost, weakening the retrieval process. By preserving relationships like hyperlinks and citations, we can provide a richer context for retrieving relevant documents.

For instance, when one document links to another, it’s a deliberate indication that the two are related. If both documents are simply embedded as independent vectors, we lose the ability to understand that relationship. Instead, we should preserve these references and utilize them in retrieval, creating a more informed search.

One effective way to improve retrieval using document structure is through a graph-based RAG approach. By building a knowledge graph with connections between documents and concepts, we can traverse this graph during the retrieval process to find related documents that might otherwise be missed by a purely semantic vector search.

Other Strategies for Managing Unstructured Data

Besides vector embeddings, there are several strategies to enhance retrieval by leveraging the structure embedded within documents. Techniques like thematic chunking, metadata preservation, and keyword-based grouping can significantly improve RAG systems. By incorporating these approaches, we can mitigate the limitations of relying solely on embeddings.

Thematic Chunking for Better Retrieval

How we chunk and embed documents plays a vital role in improving retrieval. Documents are often divided into chunks based on size limitations, with little attention paid to thematic coherence. By optimizing how we chunk documents—grouping them by themes or logical sections—we can better preserve the content’s meaning and structure.

For example, splitting a product manual into thematic sections like “setup instructions,” “troubleshooting,” and “maintenance” is more effective than splitting the document randomly. Thematic chunking results in coherent text segments that are better suited for embedding and retrieval.

Thematic coherence also improves retrieval quality, especially when context is critical in RAG systems. If a query about the Space Needle in Seattle is asked, thematic chunking ensures that documents about nearby landmarks—without explicitly mentioning the Space Needle—can still be grouped together for relevant retrieval.

Preserving Document Metadata

Document metadata—such as author names, publication dates, and section titles—can be crucial for enhancing retrieval. Metadata provides additional cues about relevance, which embeddings alone might miss.

For instance, if a query asks about recent developments in a field, metadata like the publication date becomes critical for retrieving the most up-to-date documents. Additionally, section titles and headings help preserve the document’s internal structure, directing the system to the most relevant sections for a query.

For example, metadata might link a document about the Space Needle to the Lower Queen Anne neighborhood. If this metadata is preserved during retrieval, even documents that don’t explicitly mention the Space Needle but relate to that neighborhood can be found.

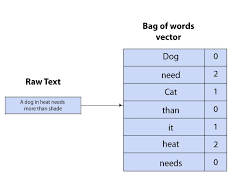

Keyword Linking and Search for Precision

While embeddings capture semantic similarity, keyword-based search can provide precision. Keyword linking prioritizes exact terms or phrases, making it ideal for queries that require precise matches rather than conceptual relevance.

For example, if a user asks for details on “random forests” in machine learning, keyword linking ensures that documents containing the term “random forests” are prioritized, even if the vector search might retrieve more general documents on ensemble methods. Keyword linking and search are more precise and can be combined with embeddings to balance precision with semantic understanding.

The Hybrid Approach: Combining Structured Retrieval with Embeddings

The key takeaway isn’t that vector embeddings are ineffective—they are essential tools for representing and retrieving unstructured data. However, they are not the only option, and they have limitations. A hybrid retrieval system that incorporates structured approaches—like thematic chunking, metadata preservation, and keyword-based grouping—can significantly improve the relevance and quality of retrieved documents. By combining embeddings with these structured strategies, we create more capable systems that can handle both nuanced queries and maintain precision.

This hybrid approach is especially effective in RAG systems, where retrieving the right information is key. By introducing structure into the retrieval pipeline, we can ensure that AI systems understand not just the meaning of individual documents but also how they relate to one another.

A Future Beyond Only Embeddings

Vector embeddings are a powerful tool, but they are not the sole solution for handling unstructured data. Documents contain inherent structure—headings, links, references, and metadata—that can enhance retrieval. By optimizing how we chunk documents, preserving metadata, and using keyword-based strategies, we can improve retrieval accuracy and effectiveness.

Ultimately, combining the semantic depth of embeddings with the precision of structured retrieval methods will create more intelligent, robust AI systems—ones that understand the full context of the data and can deliver more precise, relevant results.