Optimizing Generative AI: Overcoming Adoption Barriers Through Prompt Decomposition

Understand and Control Every Element of Your Workload

Prompt Decomposition decompose the task into steps that focus on age date and interest allowing for accurate recommendations based on predefined test cases.

Challenges in Scaling Generative AI

As Generative AI Specialist at AWS, Iweve worked with over 50 customers in the last 18 months, encountering numerous generative AI proof of concepts (PoCs). Many teams struggle to move beyond the PoC stage due to several common challenges:

- Low Accuracy: Even with optimal prompt engineering, achieving high accuracy can be elusive. Teams often struggle to further enhance performance.

- High Cost: Operating generative AI solutions can be expensive. Using smaller models might reduce accuracy, and managing costs effectively remains a challenge.

- Lack of Control: While initial prompts may work well, maintaining their effectiveness as projects scale or receive more feedback can be problematic. Minor adjustments can lead to unintended errors.

- High Latency: Systems may become too slow, making them impractical. Switching to faster, smaller models can compromise accuracy, leaving teams uncertain about how to balance speed and performance.

- Lack of Metrics: Limited quantitative measures make it hard to gauge system performance and build stakeholder confidence.

Solution: Prompt Decomposition

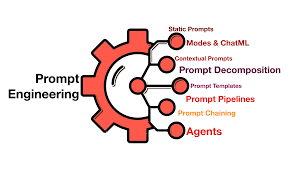

Prompt decomposition offers a solution to these common issues by breaking down complex prompts into manageable parts. While other techniques exist, prompt decomposition stands out for its ability to address these blockers effectively.

Does Prompt Decomposition Really Work?

Yes, it does. This technique has proven effective in unlocking scalability for some of AWS’s largest clients across various sectors. In this blog post, I will share code examples for two use cases that illustrate how prompt decomposition can improve accuracy and reduce latency. Each example will demonstrate changes in cost, latency, and accuracy before and after applying prompt decomposition.

Example Results

- Increasing Accuracy: Using prompt decomposition improved accuracy from 60% to 100%, while also nearly halving costs.

- Reducing Latency: We achieved a 50% reduction in response time and increased accuracy by applying prompt decomposition.

What is Prompt Decomposition?

Prompt decomposition involves breaking down a complex prompt into smaller, more manageable components. This approach simplifies large tasks into sequential, manageable steps, improving execution efficiency.

Example: Summer Camp Recommendation System

Consider a system recommending summer camps based on a child’s age, desired camp date, and interests. The process can be decomposed into three steps:

- Extract Information: Gather the child’s age, requested camp date, and camp availability.

- Find Possible Camps: Match the child’s age and date request to available camps.

- Recommend a Camp: Suggest a suitable camp based on the child’s interests.

Parallel Execution

For particularly lengthy prompts, decomposing them into parallel tasks can significantly reduce execution time. For example, a prompt initially taking 43 seconds can be broken into three parallel parts, reducing the total execution time to under 10 seconds without sacrificing accuracy.

Conclusion

Prompt decomposition is a powerful technique to overcome common challenges in generative AI projects. By breaking down complex tasks, teams can improve accuracy, manage costs and latency, and gain better control and metrics, leading to more scalable and reliable solutions.

Ready to Build?

For those ready to dive in, full code examples are available in the GitHub repository linked below. The repository includes a Jupyter Notebook (Prompt_Decomposition.ipynb) with two examples: one focused on accuracy and the other on latency. An updated evaluation function for multithreaded calls to Amazon Bedrock is also included.

Starting with Evaluation

Automated evaluation is crucial for assessing generative AI performance. Begin with a gold standard set of input/output pairs created by humans to serve as a benchmark. Avoid using generative AI to create this set, as it may introduce inaccuracies.

The evaluation function compares the correct and generated answers, scoring them similarly to how a teacher would grade student work. Here’s a sample evaluation prompt:

pythonCopy codetest_prompt_template_system = """You are a detail-oriented teacher.

You are grading an exam, looking at a correct answer and a student

submitted answer. Your goal is to score the student answer based

on how close it is to the correct answer. This is a pass/fail test.

If the two answers are basically the same, the score should be 100.

Minor things like punctuation, capitalization, or spelling should

not impact the score.

If the two answers are different, then the score should be 0.

Please use your score in a 'score' XML tag, and any reasoning

in a 'reason' XML tag.

"""

Task-Based Decomposition Example

For a summer camp recommendation system, we decompose the task into steps that focus on age, date, and interests, allowing for accurate recommendations based on predefined test cases.

Volume-Based Decomposition Use Case

To handle long prompts efficiently, such as analyzing an entire novel, we break the task into smaller, parallel parts, significantly improving execution time and accuracy.

Prompt Decomposition

Creating a flowchart for your task and selecting the best tools for each step can greatly enhance your generative AI workflows. Explore the full code in the GitHub repository, and feel free to comment with questions or share your own experiences. Let’s build something amazing by breaking it down into manageable pieces!