A Strategic Approach to Governing Enterprise AI Systems

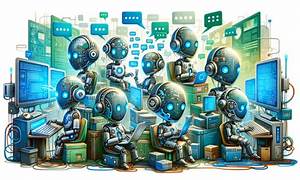

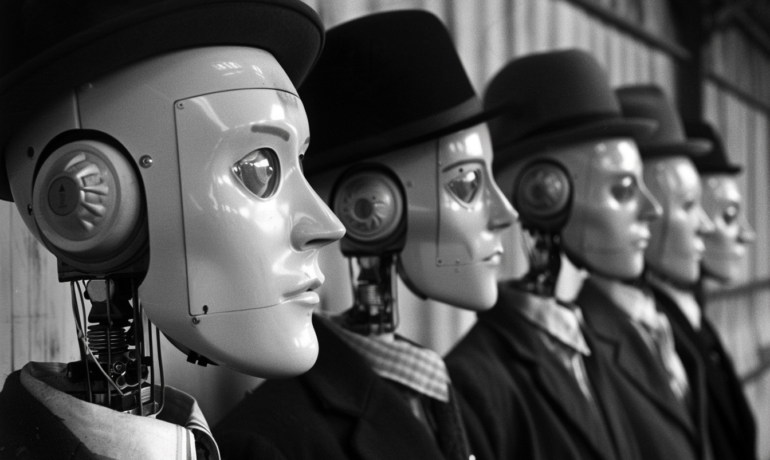

The Imperative of AI Governance in Modern Enterprises Effective data governance is widely acknowledged as a critical component of deploying enterprise AI applications. However, translating governance principles into actionable strategies remains a complex challenge. This article presents a structured approach to AI governance, offering foundational principles that organizations can adapt to their needs. While not exhaustive, this framework provides a starting point for managing AI systems responsibly. Defining Data Governance in the AI Era At its core, data governance encompasses the policies and processes that dictate how organizations manage data—ensuring proper storage, access, and usage. Two key roles facilitate governance: Traditional data systems operate within deterministic governance frameworks, where structured schemas and well-defined hierarchies enable clear rule enforcement. However, AI introduces non-deterministic challenges—unstructured data, probabilistic decision-making, and evolving models—requiring a more adaptive governance approach. Core Principles for Effective AI Governance To navigate these complexities, organizations should adopt the following best practices: Multi-Agent Architectures: A Governance Enabler Modern AI applications should embrace agent-based architectures, where multiple AI models collaborate to accomplish tasks. This approach draws from decades of distributed systems and microservices best practices, ensuring scalability and maintainability. Key developments facilitating this shift include: By treating AI agents as modular components, organizations can apply service-oriented governance principles, improving oversight and adaptability. Deterministic vs. Non-Deterministic Governance Models Traditional (Deterministic) Governance AI (Non-Deterministic) Governance Interestingly, human governance has long managed non-deterministic actors (people), offering valuable lessons for AI oversight. Legal systems, for instance, incorporate checks and balances—acknowledging human fallibility while maintaining societal stability. Mitigating AI Hallucinations Through Specialization Large language models (LLMs) are prone to hallucinations—generating plausible but incorrect responses. Mitigation strategies include: This mirrors real-world expertise—just as a medical specialist provides domain-specific advice, AI agents should operate within bounded competencies. Adversarial Validation for AI Governance Inspired by Generative Adversarial Networks (GANs), AI governance can employ: This adversarial dynamic improves quality over time, much like auditing processes in human systems. Knowledge Management: The Backbone of AI Governance Enterprise knowledge is often fragmented, residing in: To govern this effectively, organizations should: Ethics, Safety, and Responsible AI Deployment AI ethics remains a nuanced challenge due to: Best practices include: Conclusion: Toward Responsible and Scalable AI Governance AI governance demands a multi-layered approach, blending:✔ Technical safeguards (specialized agents, adversarial validation).✔ Process rigor (knowledge certification, human oversight).✔ Ethical foresight (bias mitigation, risk-aware automation). By learning from both software engineering and human governance paradigms, enterprises can build AI systems that are effective, accountable, and aligned with organizational values. The path forward requires continuous refinement, but with strategic governance, AI can drive innovation while minimizing unintended consequences. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce Story In Marc Benioff’s own words How did salesforce.com grow from a start up in a rented apartment into the world’s Read more Salesforce Jigsaw Salesforce.com, a prominent figure in cloud computing, has finalized a deal to acquire Jigsaw, a wiki-style business contact database, for Read more Service Cloud with AI-Driven Intelligence Salesforce Enhances Service Cloud with AI-Driven Intelligence Engine Data science and analytics are rapidly becoming standard features in enterprise applications, Read more