Building a trusted AI system starts with ensuring transparency in how decisions are made. Explainable AI is vital not only for addressing trust issues within organizations but also for navigating regulatory challenges.

According to research from Forrester, many business leaders express concerns over AI, particularly generative AI, which surged in popularity following the 2022 release of ChatGPT by OpenAI.

“AI faces a trust issue,” explained Forrester analyst Brandon Purcell, underscoring the need for explainability to foster accountability. He highlighted that explainability helps stakeholders understand how AI systems generate their outputs. “Explainability builds trust,” Purcell stated at the Forrester Technology and Innovation Summit in Austin, Texas. “When employees trust AI systems, they’re more inclined to use them.”

Implementing explainable AI does more than encourage usage within an organization—it also helps mitigate regulatory risks, according to Purcell.

Explainability is crucial for compliance, especially under regulations like the EU AI Act. Forrester analyst Alla Valente emphasized the importance of integrating accountability, trust, and security into AI efforts. “Don’t wait for regulators to set standards—ensure you’re already meeting them,” she advised at the summit.

Purcell noted that explainable AI varies depending on whether the AI model is predictive, generative, or agentic.

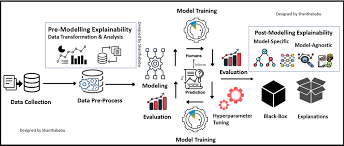

Building an Explainable AI System AI explainability encompasses several key elements, including reproducibility, observability, transparency, interpretability, and traceability.

For predictive models, transparency and interpretability are paramount. Transparency involves using “glass-box modeling,” where users can see how the model analyzed the data and arrived at its predictions. This approach is likely to be a regulatory requirement, especially for high-risk applications.

Interpretability is another important technique, useful for lower-risk cases such as fraud detection or explaining loan decisions. Techniques like partial dependence plots show how specific inputs affect predictive model outcomes.

“With predictive AI, explainability focuses on the model itself,” Purcell noted. “It’s one area where you can open the hood and examine how it works.”

In contrast, generative AI models are often more opaque, making explainability harder. Businesses can address this by documenting the entire system, a process known as traceability. For those using models from vendors like Google or OpenAI, tools like transparency indexes and model cards—which detail the model’s use case, limitations, and performance—are valuable resources.

Lastly, for agentic AI systems, which autonomously pursue goals, reproducibility is key. Businesses must ensure that the model’s outputs can be consistently replicated with similar inputs before deployment. These systems, like self-driving cars, will require extensive testing in controlled environments before being trusted in the real world.

“Agentic systems will need to rack up millions of virtual miles before we let them loose,” Purcell concluded.

🔔🔔 Follow us on LinkedIn 🔔🔔