OpenAI’s GPT-4o: Advancing the Frontier of AI

OpenAI’s GPT-4o builds upon the foundation of its predecessors with significant enhancements, including improved multimodal capabilities and faster performance. GPT 4o and GPT 4.

Evolution of ChatGPT and Its Underlying Models

Since the launch of ChatGPT in late 2022, both the chatbot interface and its underlying models have seen several major updates. GPT-4o, released in May 2024, succeeds GPT-4, which launched in March 2023, and was followed by GPT-4o mini in July 2024.

GPT-4 and GPT-4o (with “o” standing for “omni”) are advanced generative AI models developed for the ChatGPT interface. Both models generate natural-sounding text in response to user prompts and can engage in interactive, back-and-forth conversations, retaining memory and context to inform future responses.

TechTarget Editorial tested these models by using them within ChatGPT, reviewing OpenAI’s informational materials and technical documentation, and analyzing user reviews on Reddit, tech blogs, and the OpenAI developer forum.

Differences Between the GPTs

While GPT-4o and GPT-4 share similarities, including vision and audio capabilities, a 128,000-token context window, and a knowledge cutoff in late 2023, they also differ significantly in multimodal capabilities, performance, efficiency, pricing, and language support.

Introduction of GPT-4o Mini

On July 18, 2024, OpenAI introduced GPT-4o mini, a cost-efficient, smaller model designed to replace GPT-3.5. GPT-4o mini outperforms GPT-3.5 while being more affordable. Aimed at developers seeking to build AI applications without the compute costs of larger models, GPT-4o mini is positioned as a competitor to other small language models like Claude’s Haiku.

All users on ChatGPT Free, Plus, and Team plans received access to GPT-4o mini at launch, with ChatGPT Enterprise users expected to gain access shortly afterward. The model supports text and vision, and OpenAI plans to add support for other multimodal inputs like video and audio.

Multimodality

Multimodal AI models process multiple data types such as text, images, and audio. Both GPT-4 and GPT-4o support multimodality in the ChatGPT interface, allowing users to create and upload images and use voice chat. However, their approaches to multimodality differ significantly.

GPT-4 primarily focuses on text processing, requiring other OpenAI models like DALL-E for image generation and Whisper for speech recognition to handle non-text input. In contrast, GPT-4o was designed for multimodality from the ground up, with all inputs and outputs processed by a single neural network. This design makes GPT-4o faster for tasks involving multiple data types, such as image analysis.

Controversy Over GPT-4o’s Voice Capabilities

During the GPT-4o launch demo, a voice called Sky, which sounded similar to Scarlett Johansson’s AI character in the film “Her,” sparked controversy. Johansson, who had declined a previous request to use her voice, announced legal action. In response, OpenAI paused the use of Sky’s voice, highlighting ethical concerns over voice likenesses and artists’ rights in the AI era.

Performance and Efficiency

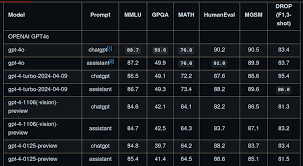

GPT-4o is designed to be quicker and more efficient than GPT-4. OpenAI claims GPT-4o is twice as fast as the most recent version of GPT-4. In tests, GPT-4o generally responded faster than GPT-4, although not quite at double the speed. OpenAI’s testing indicates GPT-4o outperforms GPT-4 on major benchmarks, including math, language comprehension, and vision understanding.

Pricing

GPT-4o’s improved efficiency translates to lower costs. For API users, GPT-4o is available at per million input tokens and per million output tokens, compared to GPT-4’s per million input tokens and per million output tokens. GPT-4o mini is even cheaper, at $0.15 per million input tokens and $0.60 per million output tokens.

GPT-4o will power the free version of ChatGPT, offering multimodality and higher-quality text responses to free users. GPT-4 remains available only to paying customers on plans starting at $20 per month.

Language Support

GPT-4o offers better support for non-English languages compared to GPT-4, particularly for languages that don’t use Western alphabets. This improvement addresses longstanding issues in natural language processing, making GPT-4o more effective for global applications.

Is GPT-4o Better Than GPT-4?

In most cases, GPT-4o is superior to GPT-4, with improved speed, lower costs, and multimodal capabilities. However, some users may still prefer GPT-4 for its stability and familiarity, especially in critical applications. Transitioning to GPT-4o may involve significant changes for systems tightly integrated with GPT-4.

What Does GPT-4o Mean for ChatGPT Users?

GPT-4o’s introduction brings significant changes, including the availability of multimodal capabilities for all users. While these advancements may make the Plus subscription less appealing, paid plans still offer benefits like higher usage caps and faster response times.

As the AI community looks forward to GPT-5, expected later this summer, the introduction of GPT-4o sets a new standard for AI capabilities, offering powerful tools for users and developers alike.