Databricks unveiled Databricks LakeFlow last week, a new tool designed to unify all aspects of data engineering, from data ingestion and transformation to orchestration.

What is Databricks LakeFlow?

According to Databricks, LakeFlow simplifies the creation and operation of production-grade data pipelines, making it easier for data teams to handle complex data engineering tasks. This solution aims to meet the growing demands for reliable data and AI by providing an efficient and streamlined approach.

The Current State of Data Engineering

Data engineering is crucial for democratizing data and AI within businesses, yet it remains a challenging field. Data teams must often deal with:

- Siloed Systems: Ingesting data from various isolated systems such as databases and enterprise applications through complex and often fragile connectors.

- Data Preparation: Managing intricate data preparation logic, where failures or latency spikes can disrupt operations and dissatisfy customers.

- Disparate Tools: Deploying pipelines and monitoring data quality typically require multiple fragmented tools, leading to low data quality, reliability issues, high costs, and a growing backlog of work.

How LakeFlow Addresses These Challenges

LakeFlow offers a unified experience for all aspects of data engineering, simplifying the entire process:

- Ingestion at Scale: LakeFlow allows data teams to easily ingest data from traditional databases like MySQL, Postgres, and Oracle, as well as enterprise applications such as Salesforce, Dynamics, SharePoint, Workday, NetSuite, and Google Analytics.

- Automation: It automates the deployment, operation, and monitoring of production pipelines with built-in support for CI/CD and advanced workflows that include triggering, branching, and conditional execution.

Key Features of LakeFlow

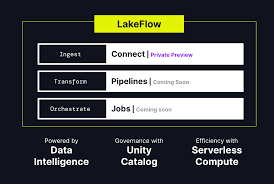

LakeFlow comprises three main components: LakeFlow Connect, LakeFlow Pipelines, and LakeFlow Jobs.

- LakeFlow Connect: Incorporating capabilities from Arcion, which Databricks acquired last year, LakeFlow Connect offers simple and scalable data ingestion with various native connectors integrated with the Unity Catalog for data governance.

- LakeFlow Pipelines: Built on Databricks’ Delta Live Tables technology, LakeFlow Pipelines enables data teams to implement data transformation and ETL in SQL or Python for automated, real-time data pipelines.

- LakeFlow Jobs: This feature provides automated orchestration, data health monitoring, and delivery, spanning from scheduling notebooks and SQL queries to machine learning training and automatic dashboard updates.

Availability

LakeFlow is entering preview soon, starting with LakeFlow Connect. Customers can register to join the waitlist today.