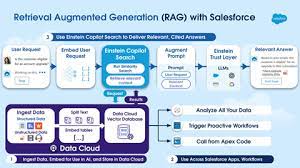

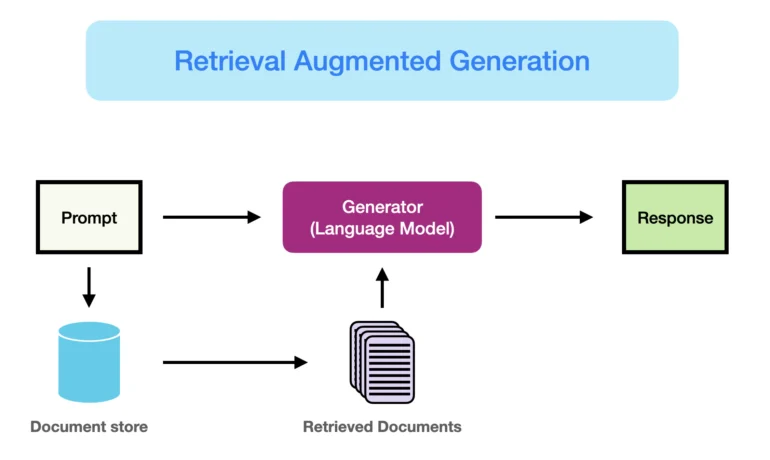

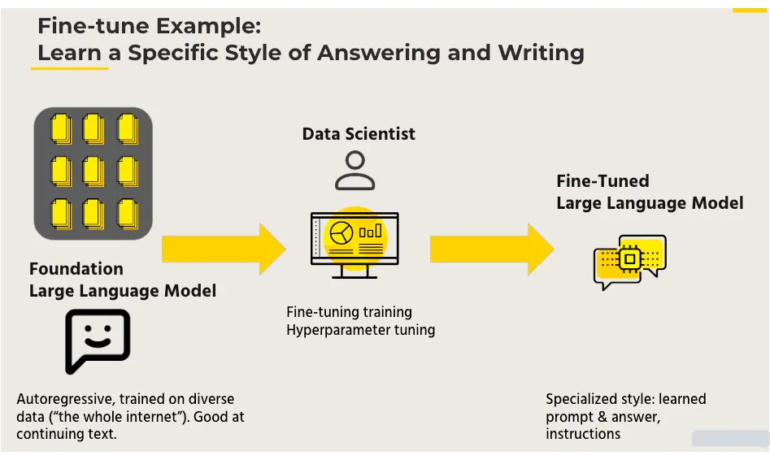

Salesforce unveils the groundbreaking Einstein 1 Platform, a transformative force in enterprise AI designed to enhance productivity and cultivate trusted customer experiences by seamlessly integrating data, AI, and CRM. This advanced platform meets the demands of a new AI era, adeptly managing extensive disconnected data, offering flexibility in AI model selection, and seamlessly integrating with workflow processes while prioritizing customer trust. Salesforce Einstein 1 Platform is a game changer from Salesforce. What is the Salesforce Einstein 1 platform? Einstein 1 has a mixture of artificial intelligence tools on the platform, and it kind of mirrors the way the core Salesforce platform is built, standardized and custom. We have out of the box AI features such as sales email generation in Sales Cloud, and service replies in Service Cloud. The Einstein 1 Platform consolidates data, AI, CRM, development, and security into a unified, comprehensive platform, empowering IT professionals, administrators, and developers with an extensible AI platform for rapid app development and automation. Streamlining change and release management, the DevOps Center allows centralized oversight of project work at every stage of the application lifecycle management process, ensuring secure data testing and AI app deployment. Salesforce customizes security and privacy add-on solutions, including data monitoring and masking, backup implementation, and compliance with evolving privacy and encryption regulations. Grounded in the Einstein 1 Platform, Salesforce AI delivers trusted and customizable experiences by leveraging customer data to create predictive and generative interactions tailored to diverse business needs. What are the Einstein platform products? Commerce Cloud Einstein is a generative AI tool that can be used to provide personalized commerce experiences throughout the entire buyer’s journey. It can be used to generate auto-generated recommendations, content, and communications that are based on real-time data from the Data Cloud. Einstein 1 serves as a comprehensive solution for organizations seeking a unified 360-degree view of their customers, integrating Silverline expertise to maximize AI potential and scalability. The introduction of Einstein 1 Data Cloud addresses data integration challenges, enabling users to connect any data source for a unified customer profile enriched with AI, automation, and analytics. Salesforce Data Cloud unifies and harmonizes customer data, enterprise content, telemetry data, Slack conversations, and other structured and unstructured data to create a single view of the customer. Einstein 1 Data Cloud is natively integrated with the Einstein 1 Platform and allows companies to unlock siloed data and scale in entirely new ways, including: Supporting thousands of metadata-enabled objects per customer, the platform ensures scalability, while re-engineering Marketing Cloud and Commerce Cloud onto the Einstein 1 Platform enables seamless incorporation of massive data volumes. Salesforce offers Data Cloud at no cost for Enterprise Edition or above customers, underscoring its commitment to supporting businesses at various stages of maturity. Einstein Copilot Search and the Data Cloud Vector Database further enhance Einstein 1 capabilities, providing improved AI search and unifying structured and unstructured data for informed workflows and automation. Einstein 1 introduces generative AI-powered conversational assistants, operating within the secure Einstein Trust Layer to enhance productivity while ensuring data privacy. Businesses are encouraged to embrace Einstein 1 as a strategic move toward becoming AI-centric, leveraging its unified data approach to effectively train AI models for informed decision-making. Salesforce’s Einstein 1 Platform introduces the Data Cloud Vector Database, seamlessly unifying structured and unstructured business data to enhance AI prompts and streamline workflows. Generative AI impacts businesses differently, augmenting processes to improve efficiency and productivity across sales, service, and field service teams. Einstein 1 Platform addresses challenges of fragmented customer data, offering a unified view for effective AI model training and decision-making. Salesforce’s continuous evolution ensures businesses have access to cutting-edge AI technologies, positioning Einstein 1 as a crucial tool for staying ahead in the AI-centric landscape. Ready to explore Data Cloud for Einstein 1? Limited access is available for $0, offering businesses an exclusive opportunity to leverage this transformative solution. Salesforce’s Einstein 1 Platform introduces advancements in AI search capabilities and unification of structured and unstructured business data, empowering informed workflows and automation. Einstein GPT expands conversational AI across Marketing and Commerce clouds, with the Data Cloud Vector Database playing a pivotal role in unifying data for Einstein 1 users. Einstein now has a generative AI-powered conversational AI assistant that includes Einstein Copilot and Einstein Copilot Studio. These two capabilities operate within the Einstein Trust Layer – a secure AI architecture built natively into the Einstein 1 Platform that allows you to leverage generative AI while preserving data privacy and security standards. Einstein Copilot is an out-of-the-box conversational AI assistant built into the user experience of every Salesforce application. Einstein Copilot drives productivity by assisting users within their flow of work, enabling them to ask questions in natural language and receive relevant and trustworthy answers grounded in secure proprietary company data from Data Cloud. Data Cloud Vector Database simplifies data integration, enhancing AI prompts without costly updates to specific business models. Data Cloud, integrated with the Einstein Trust Layer, provides secure data access and visualization, enabling businesses to fully harness generative AI. Einstein 1, with Data Cloud, offers a solution for organizations seeking comprehensive customer insights, guided by Silverline expertise for AI maximization. Salesforce’s Einstein 1 Platform securely integrates data, connecting various products to empower customer-centric businesses with AI-driven applications. Data Cloud for Einstein 1 supports AI assistants and enhances customer experiences, driving productivity and reducing operational costs. Einstein 1’s impact is evident in increased productivity and enhanced customer experiences, with ongoing evolution ensuring businesses stay at the forefront of AI technology. Generative AI augments existing processes, particularly in sales, service, and customer support, with Einstein 1 providing tools for streamlined operations. Salesforce’s Einstein 1 Platform introduces AI search enhancements and unified data capabilities, empowering businesses with informed decision-making and automation. Ready to embrace AI-driven productivity? Explore Data Cloud for Einstein 1 and revolutionize your business operations today. Like Related Posts Salesforce OEM AppExchange Expanding its reach beyond CRM, Salesforce.com has launched a new service called AppExchange OEM Edition, aimed at non-CRM service providers. Read more The Salesforce