Supporting the development of AI design patterns that demonstrate trustworthiness not only enhances user experiences but also serves as an enabling tool for informing more effective policy and compliance measures. Patterns for AI Security.

By prototyping patterns, teams can effectively communicate complex policies, illustrating how they could function within industries and for users. This approach also facilitates the testing of patterns, enabling teams to swiftly identify trade-offs and challenge assumptions, thereby accelerating the establishment of industry standards for best practices. Ultimately, this iterative process leads to the creation of better policies and services that yield superior outcomes for both individuals and organizations.

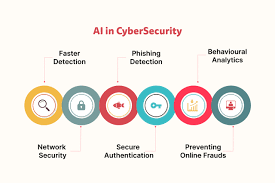

Patterns for AI Security

For instance, consider the pattern of “watermarking,” mandated by China’s Cyberspace Administration and poised to be adopted by the USA and EU. Through exploration of this pattern, the team at IF highlighted the inherent challenges associated with implementing watermarking for users and businesses.

Another design pattern is the AI query router. A user inputs a query, that query is sent to a router, which is a classifier that categorizes the input.

A recognized query routes to small language model, which tends to be more accurate, more responsive, & less expensive to operate.

If the query is not recognized, a large language model handles it. LLMs much more expensive to operate, but successfully returns answers to a larger variety of queries.

In this way, an AI product can balance cost, performance, & user experience.

Moreover, investing in trustworthy solutions not only addresses immediate challenges but also positions businesses for long-term success. As reliance on AI systems becomes ubiquitous, the complexities of trust, collaboration, and robustness will only intensify. Stakeholders, both in the private and public sectors, increasingly expect organizations to deliver responsible solutions that prioritize user value without compromising on privacy. This is particularly evident among Gen Z individuals, who demand technology that understands and anticipates their needs while upholding privacy standards. Gen Alpha will be even moreso inclined.

Organizations that recognize the significance of trustworthiness and proactively invest in differentiating their products and services accordingly stand to gain a competitive advantage in the evolving landscape. By prioritizing trustworthiness, businesses can not only meet the expectations of their stakeholders but also foster lasting relationships built on transparency, reliability, and integrity.

We all anchor to some tried and tested methods, approaches and patterns when building something new. This statement is very true for those in software engineering, however for generative AI and artificial intelligence itself this may not be the case. With emerging technologies such as generative AI we lack well documented patterns to ground our solution’s.

Here are a handful of approaches and patterns for generative AI, based on evaluation of countless production implementations of LLM’s in production. The goal of these patterns is to help mitigate and overcome some of the challenges with generative AI implementations such as cost, latency and hallucinations.

List of Patterns

- Layered Caching Strategy Leading To Fine-Tuning

- Multiplexing AI Agents For A Panel Of Experts

- Fine-Tuning LLM’s For Multiple Tasks

- Blending Rules Based & Generative

- Utilizing Knowledge Graphs with LLM’s

- Swarm Of Generative AI Agents

- Modular Monolith LLM Approach With Composability

- Approach To Memory Cognition For LLM’s

- Red & Blue Team Dual-Model Evaluation