Unveiling the Vision Transformer: A Leap in Video Generation

The closest open-source model to SORA is Latte, which uses the same Vision Transformer architecture. So, what makes the Vision Transformer so outstanding, and how does it differ from previous methods? You can Train Your Own SORA Model.

Latte hasn’t open-sourced its text-to-video training code. We’ve replicated this code from the paper and made it available for anyone to use in training their own SORA alternative model. Let’s discuss how effective our training was.

From 3D U-Net to Vision Transformer

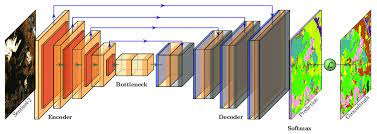

Image generation has advanced significantly, with the U-Net model structure being the most commonly used:

- 2D U-Net Architecture: U-Net compresses and shrinks the input image, then gradually decodes and enlarges it, forming a U shape. Early video generation models extended the U-Net structure to support video by adding a time dimension, thus converting 2D height and width to 3D:

- 3D U-Net: Integrating a transformer within this time dimension allows the model to learn what the image should look like at a given time point (e.g., the nth frame). A 3D U-Net generates 16 images given a prompt, but the transformer’s function is limited within the U-Net, leading to poor consistency between consecutive frames and insufficient learning capacity for larger movements and motions.

If you’re confused about the network structures, remember the key principle of deep learning: “Just Add More Layers!”

Vision Transformer: A Game Changer

In 3D U-Net, the transformer can only function within the U-Net, limiting its view. The Vision Transformer, however, enables transformers to globally manage video generation.

- Global Dominance: The Vision Transformer treats a video as a sequence, similar to language models. Each data block in the sequence could be a small piece of an image, encoded into a sequence of tokens, much like how a language model tokenizer works.

- Simple and Brute-Force Design: This approach aligns with OpenAI’s style — simple and operable model structures make it easier to scale models with larger training data. Instead of competing in model structures, OpenAI focuses on data, leveraging petabytes of data and tens of thousands of GPUs.

- Enhanced Capabilities: Compared to 3D U-Net, Vision Transformer significantly enhances the model’s ability to learn motion patterns, handle greater movement amplitude, and generate longer video lengths.

Training Your Open-Source SORA Alternative with Latte

Latte uses the video slicing sequence and Vision Transformer method discussed. While Latte hasn’t open-sourced its text-to-video model training code, we’ve replicated it here: GitHub Repo. Training involves three steps:

- Download and train the model, install the environment.

- Prepare training videos.

- Run the training:

./run_img_t2v_train.sh.

For more details, see the GitHub repo.

They’ve also made improvements to the training process:

- Added support for gradient accumulation to reduce memory requirements.

- Included validation samples during training to verify the process.

- Added support for wandb.

- Included support for classifier-free guidance training.

Model Performance

The official Latte video shows impressive performance, especially in handling significant motion. However, our own tests indicate that while Latte performs well, it isn’t the top-performing model. Other open-source models have shown better performance.

- Scaling Law: Latte’s larger scale requires more and higher-quality training data.

- Pretrained Image Model: The performance of a video model relies heavily on its pretrained image model, which in Latte’s case, needs further strengthening.

We will continue to share information on models with better performance, so stay tuned to Tectonic’s Insights.

Hardware Requirements

Due to its large scale, training Latte requires an A100 or H100 with 80GB of memory.

🔔🔔 Follow us on LinkedIn 🔔🔔